Steps:

- Create private Feed in Azure DevOps

- Create Personal Access Token (PAT) in Azure DevOps with

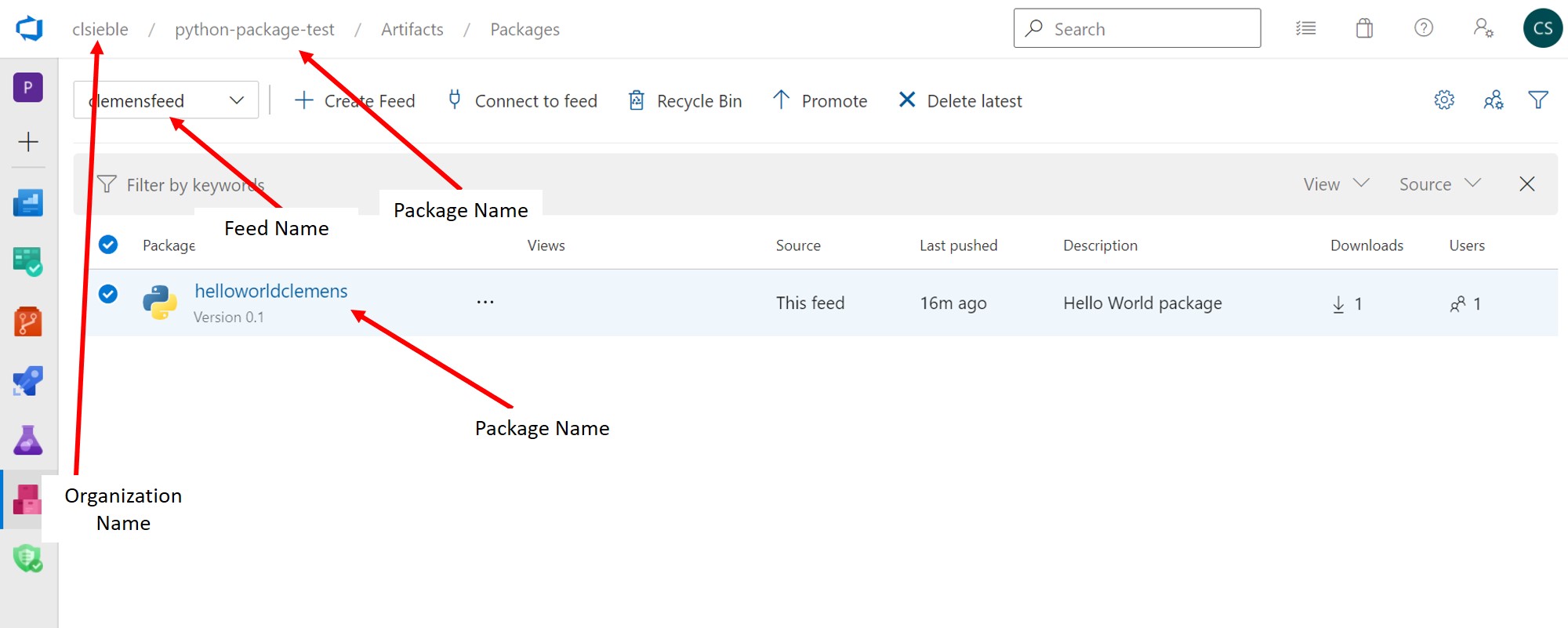

Feed Readpermission (details) - Navigate to the Azure DevOps Artifacts Feed page where you can see the details for the next steps (you'll need feed name, project name, organization name and later also package name):

- Create Build pipeline in Azure DevOps to create package and push to private feed:

trigger: