Applying Back Pressure When Overloaded

[...]

Let’s assume we have asynchronous transaction services fronted by an input and output queues, or similar FIFO structures. If we want the system to meet a response time quality-of-service (QOS) guarantee, then we need to consider the three following variables:

- The time taken for individual transactions on a thread

- The number of threads in a pool that can execute transactions in parallel

- The length of the input queue to set the maximum acceptable latency

max latency = (transaction time / number of threads) * queue length

queue length = max latency / (transaction time / number of threads)By allowing the queue to be unbounded the latency will continue to increase. So if we want to set a maximum response time then we need to limit the queue length.

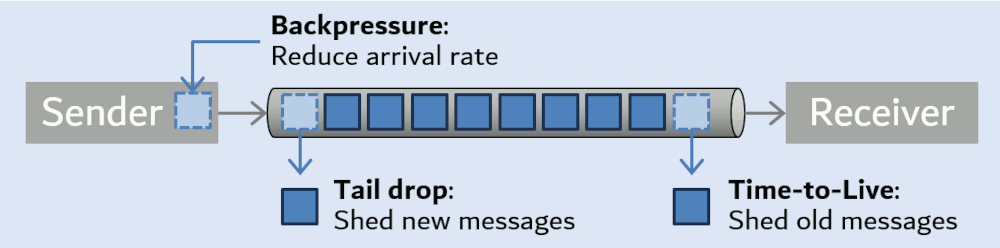

By bounding the input queue we block the thread receiving network packets which will apply back pressure up stream. If the network protocol is TCP, similar back pressure is applied via the filling of network buffers, on the sender. This process can repeat all the way back via the gateway to the customer. For each service we need to configure the queues so that they do their part in achieving the required quality-of-service for the end-to-end customer experience.

One of the biggest wins I often find is to improve the time taken to process individual transaction latency. This helps in the best and worst case scenarios.

[...]

ScyllaDB's talk: How to Maximize Database Concurrency

Q: No system supports unlimited concurrency. Otherwise, no matter how fast your database is, you’ll eventually end up reaching the retrograde region. Do you have any recommendations for workloads that are unbound and/or spiky by nature?

A: Yeah, in my career, all workloads are spiky so you always get to solve this problem. You need to think about what happens when the wheels fall off. When work bunches up, what are you going to do? You have two choices. You can queue and wait or you can load shed. You need to make that choice consistently for your system. Your chosen scale chooses a throughput you can handle within SLA. Your SLA tells you how often you’re allowed to violate your latency target so make sure the reality you’ve observed violates your design throughput well less than the limit your SLA requires. And then when reality drives you outside that window, you either queue and wait and blow up your latency or you load shed and effectively force the user to implement the queue themselves. Load shedding is not magic. It’s just choosing to force the user to implement the queue.