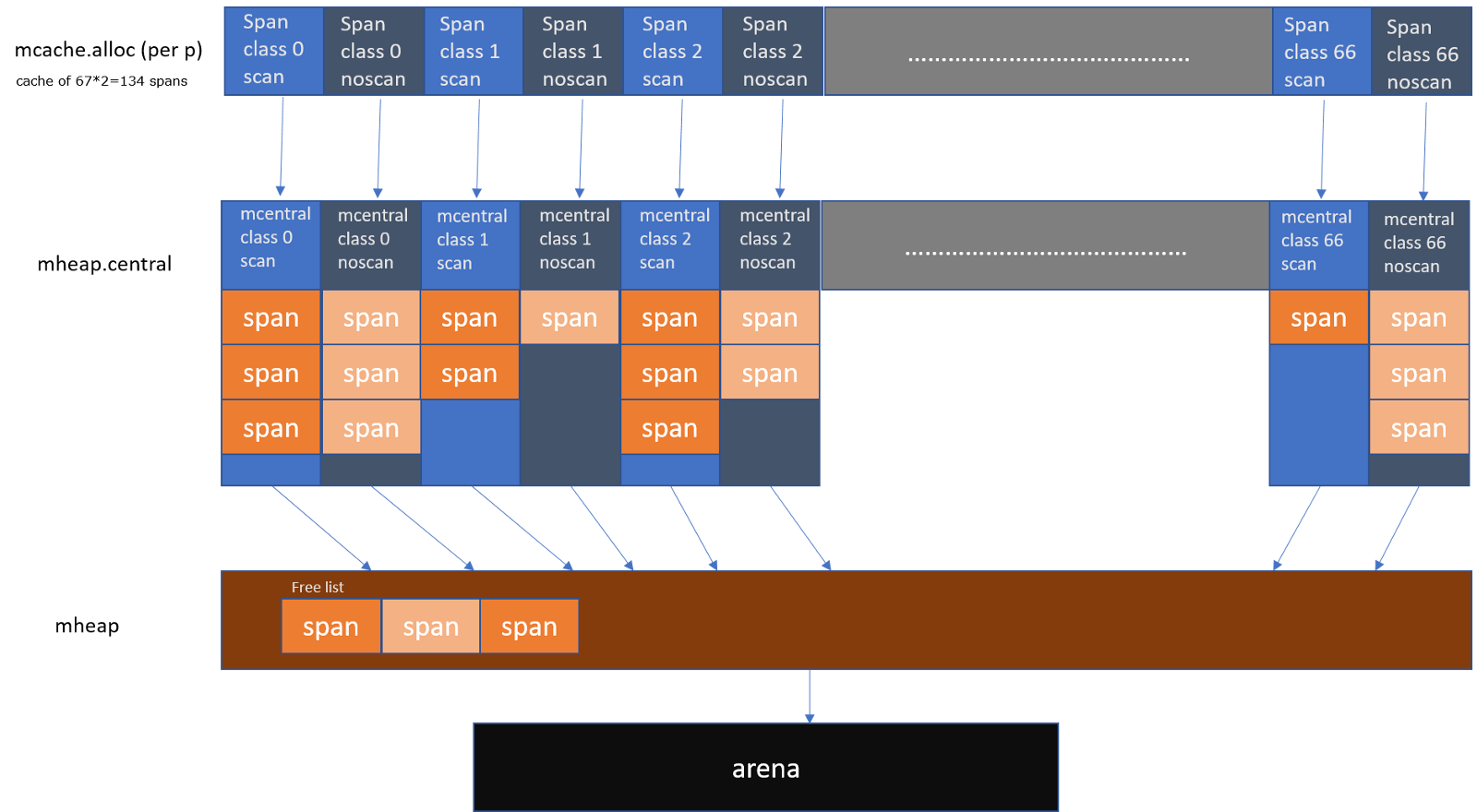

size class 67 types class 0 is for large object

// sizeclasses.go

// class bytes/obj bytes/span objects tail waste max waste

// 1 8 8192 1024 0 87.50%

// 2 16 8192 512 0 43.75%

// 3 32 8192 256 0 46.88%

// 4 48 8192 170 32 31.52%

// ...

// 64 27264 81920 3 128 10.00%

// 65 28672 57344 2 0 4.91%

// 66 32768 32768 1 0 12.50%

_NumSizeClasses = 67

// Code generated by mksizeclasses.go; DO NOT EDIT.

// go:generate go run mksizeclasses.go

var class_to_size = [_NumSizeClasses]uint16{0, 8, 16, 32, 48, ..., 28672, 32768}

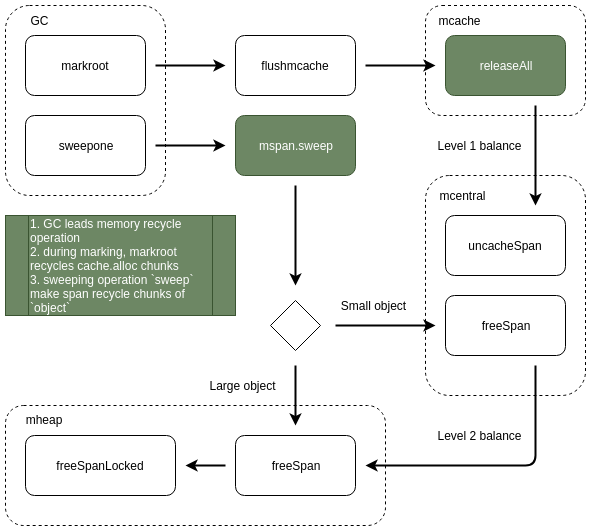

var class_to_allocnpages = [_NumSizeClasses]uint8{0, 1, 1, 1, 1, ..., 7, 4}cache -> central -> heap

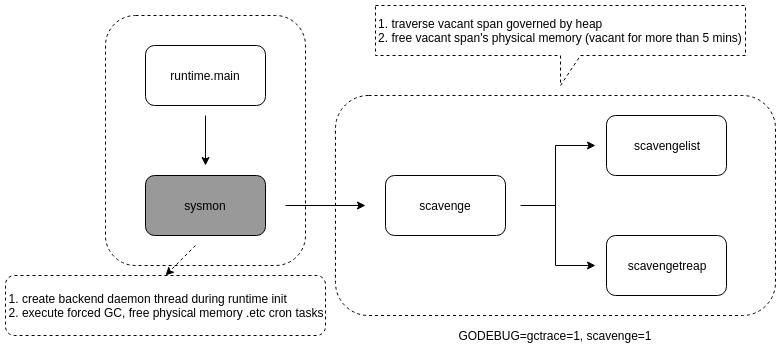

img reference

img reference

zero garbage: avoid allocating on heap to reduce GC pressure

memory allocation in stack or heap is determined by compiler, not code itself.

go tool compile -h, go tool link -h

Pacer: Go's default pacer will try to trigger a GC cycle every time the heap size doubles. (2X -> GOGC)

virtual memory, swap in/out, page fault

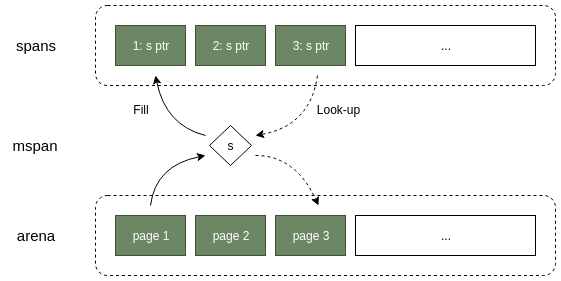

spans (look-up table: page -> span) : bitmaps (GC mark bitmap) : arena (user object allocation region)

note: runtime managed object is not allocated in arena due to its different lifetime strategy

Linux mmap, Windows VirtualAlloc

index = (object.address - arena.start) / step

// mheap.go

type mheap struct {

spans []*mspan

bitmap uintptr // Points to one byte past the end of the bitmap

bitmap_mapped uintptr

arena_start uintptr

arena_used uintptr // Set with setArenaUsed.

arena_alloc uintptr

arena_end uintptr

}arena size is set statically, the upper limit is 512GB, if beyond will raise OOM error.

according to size class rules, min_object.size = 8 bytes

status bitmap bitmaps offers 2 bits for every object: bitmap.size = arena.size / (min_object.size * 8) * 2 -> 16GB

look-up table spans is based on page: spans.size = arena.size / page.size * pointer.size -> 512MB ? (this table stores pointers, page.size = 4KB, pointer.size = 64B on 64-bit system, 32B on 32-bit system)

PROT_NONE: Pages may not be accessed. Accessing PROT_NONE memory will result in a segfault, but the memory region will be reserved and not used for any other purpose.

MEM_RESERVE: Reserves a range of the process's virtual address space without allocating any actual physical storage in memory or in the paging file on disk.

A visual guide to Go Memory Allocator from scratch

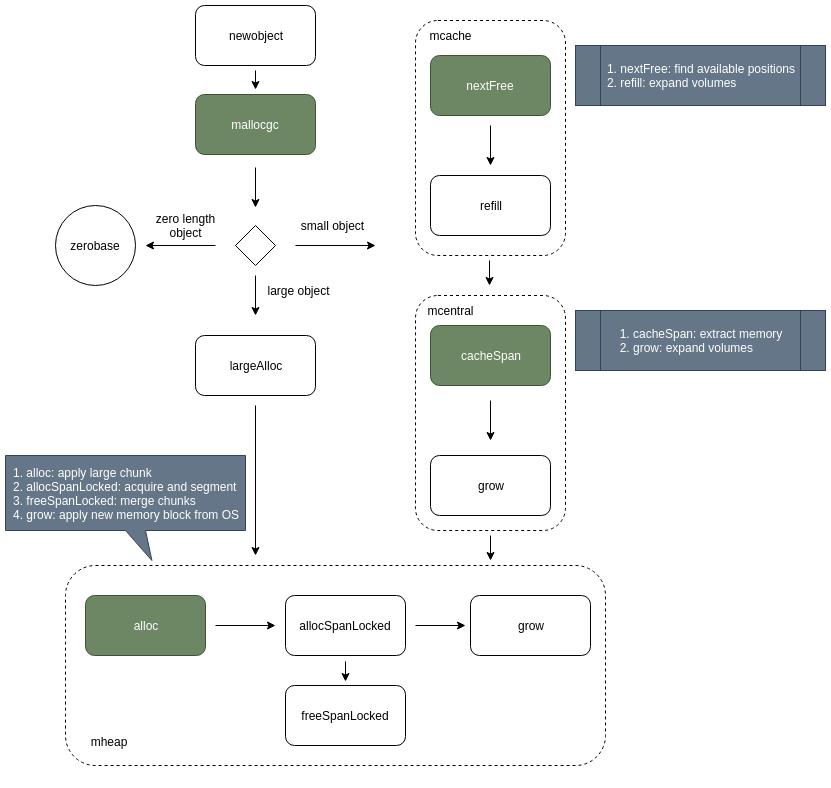

memory allocation strategy is based on length, it has 3 types: zero length, small, large objects. And small objects can be divided into tiny and small objects. It aims to reducing waste of memory.

// malloc.go

// Small objects are allocated from the per-P cache's free lists.

// Large objects (> 32 kB) are allocated straight from the heap.

func mallocgc(size uintptr, typ *_type, needzero bool) unsafe.Pointer {

if size == 0 {

// zero length object ...

}

if size <= maxSmallSize {

if noscan && size < maxTinySize {

// tiny object ...

} else {

// small object ...

}

} else {

// large object ...

}

}size 0 is different from nil

size 0: valid object but no real readable/writable content nil: a staff without valid allocation

since zero size object can NOT be accessed, so different types can point to the same position. But due to their memory address may be the same, one should pay extra attention when use zero size object as key.

# forbid linking

➜ mem git:(master) ✗ go build -o test -gcflags "-N -l -m" zero_size_object.go

# command-line-arguments

./zero_size_object.go:7:9: &a escapes to heap

./zero_size_object.go:4:6: moved to heap: a

./zero_size_object.go:7:13: &b escapes to heap

./zero_size_object.go:5:6: moved to heap: b

➜ mem git:(master) ✗ ./test

0x4dce30 0x4dce30

# permit linking

➜ mem git:(master) ✗ go build -o test -gcflags "-N -m" zero_size_object.go

# command-line-arguments

./zero_size_object.go:3:6: can inline test

./zero_size_object.go:10:6: can inline main

./zero_size_object.go:11:16: inlining call to test

/tmp/go-build328061627/b001/_gomod_.go:6:6: can inline init.0

./zero_size_object.go:7:9: &a escapes to heap

./zero_size_object.go:4:6: moved to heap: a

./zero_size_object.go:7:13: &b escapes to heap

./zero_size_object.go:5:6: moved to heap: b

./zero_size_object.go:11:16: main &a does not escape

./zero_size_object.go:11:16: main &b does not escape

➜ mem git:(master) ✗ ./test

0xc000048748 0xc000048748➜ mem git:(master) ✗ gdb ./test

(gdb) l

1 package main

2

3 func test() (*[0]int, *struct{}) {

4 var a [0]int

5 var b struct{}

6

7 return &a, &b

8 }

9

10 func main() {

(gdb) l

11 pa, pb := test()

12 println(pa, pb)

13 }

(gdb) b 12

Breakpoint 1 at 0x44f4f7: file /home/harold/go_tests/source_code_analysis/mem/zero_size_object.go, line 12.

(gdb) info b

Num Type Disp Enb Address What

1 breakpoint keep y 0x000000000044f4f7 in main.main

at /home/harold/go_tests/source_code_analysis/mem/zero_size_object.go:12

(gdb) r

Starting program: /home/harold/go_tests/source_code_analysis/mem/test

[New LWP 21754]

[New LWP 21755]

[New LWP 21756]

[New LWP 21757]

[New LWP 21758]

Thread 1 "test" hit Breakpoint 1, main.main ()

at /home/harold/go_tests/source_code_analysis/mem/zero_size_object.go:12

12 println(pa, pb)

(gdb) p pa

$1 = ([0]int *) 0xc000048748

(gdb) info frame

Stack level 0, frame at 0xc000048798:

rip = 0x44f4f7 in main.main

(/home/harold/go_tests/source_code_analysis/mem/zero_size_object.go:12);

saved rip = 0x424bbc

source language unknown.

Arglist at 0xc000048738, args:

Locals at 0xc000048738, Previous frame's sp is 0xc000048798

Saved registers:

rip at 0xc000048790// malloc.go

// base address for all 0-byte allocations

var zerobase uintptr

func mallocgc(size uintptr, typ *_type, needzero bool) unsafe.Pointer {

if size == 0 {

return unsafe.Pointer(&zerobase)

}

}large object (> 32KB) is allocated directly from mheap. these large request comes at an expenses of central lock, so only one P’s request can be served at any given point of time.

// malloc.go

func mallocgc(size uintptr, typ *_type, needzero bool) unsafe.Pointer {

// larger than 32KB -> large object

if size <= maxSmallSize {

} else {

systemstack(func() {

s = largeAlloc(size, needzero, noscan)

})

}

}

func largeAlloc(size uintptr, needzero bool, noscan bool) *mspan {

// large block of memory unit is in pages

npages := size >> _PageShift

if size & _PageMask != 0 {

npages++

}

// obtain memory blocks from heap

s := mheap_.alloc(npages, makeSpanClass(0, noscan), true, needzero)

return s

}free: free and available busy: used

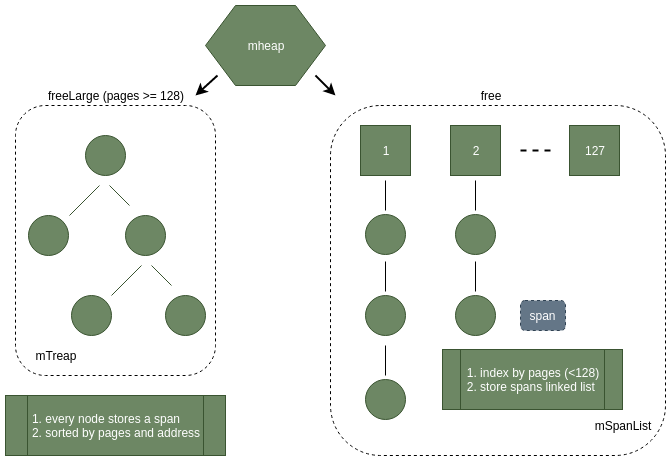

mSpanList (linked list): memory block < 128 pages mTreap (binary search tree): memory block >= 128pages

// mheap.go

type mheap struct {

free [_MaxMHeapList]mSpanList // free lists of given length up to _MaxMHeapList

freelarge mTreap // free treap of length >= _MaxMHeapList

busy [_MaxMHeapList]mSpanList // busy lists of large spans of given length

busylarge mSpanList // busy lists of large spans length >= _MaxMHeapList

}_MaxMHeapList = 1 << (20 - _PageShift) // 128, malloc.go

// mheap.go

type mSpanList struct {

first *mspan // first span in list, or nil if none

last *mspan // last span in list, or nil if none

}

type mspan struct {

next *mspan // next span in list, or nil if none

prev *mspan // previous span in list, or nil if none

}// mgclarge.go

// Each treapNode holds a single span. The treap is sorted by page size

// and for spans of the same size a secondary sort based on start address

// is done.

// Spans are returned based on a best fit algorithm and for spans of the same

// size the one at the lowest address is selected.traverse free list instead of directly access by pages index: the element with fitted condition may be empty, no matter in init or runtime, the corresponding linked list has the possibility to have no valid memory block. the best choice is keeping trying to find linked list with more pages instead of applying new memory block from OS.

if no valid in free linked list, it will keep searching in freelarge, and finally applying from OS. it will return a memory block with approximate volume in existing memory blocks.

func (h *mheap) allocSpanLocked(npage uintptr, stat *uint64) *mspan {

// traverse free linked list from specified page

for i := int(npage); i < len(h.free); i++ {

// get memory block from linked list header

list = &h.free[i]

if !list.isEmpty() {

s = list.first

list.remove(s)

goto HaveSpan

}

}

// check in freelarge tree

s = h.allocLarge(npage)

if s == nil {

// expand, apply new memory block from OS, (at least 1 MB, 128 pages)

if !h.grow(npage) {

return nil

}

// put newly applied memory block into freelarge and extract

s = h.allocLarge(npage)

}

HaveSpan:

// ... trim vacant memory ...

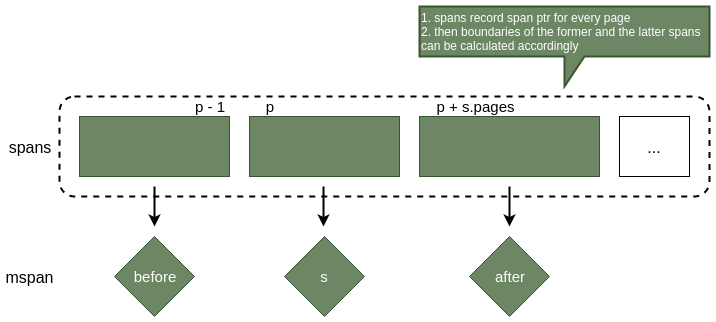

if s.npages > npage {

// create new mspan to govern trimmed memory blocke

t := (*mspan)(h.spanalloc.alloc())

t.init(s.base()+npage<<_PageShift, s.npages-npage)

s.npages = npage

// compute trimmed memory block index and modify heap.spans look-up table

p := (t.base() - h.arena_start) >> _PageShift

if p > 0 {

h.spans[p-1] = s // s_spans tail boundary

}

h.spans[p] = t // t_spans header boundary

h.spans[p+t.npages-1] = t // t_spans tail boundary

// put trimmed memory block into heap, it will cause memory block merge operation

h.freeSpanLocked(t, false, false, s.unusedsince)

}

// compute index and use span pointers to fill heap.spans look-up table

p := (s.base() - h.arena_start) >> _PageShift

for n := uintptr(0); n < npage; n++ {

h.spans[p+n] = s

}

return s

}heap.spans stores mspan (memory block governance object) pointers

neighbouring spans can be accessed through spans look-up table, if they are in free state, they will be merged. Then bigger free space can be achieved and reduce the memory fragmentation.

Go will once apply at least 1MB memory from OS, and it always is 64KB's multiples. Since this memory is not used now, OS will NOT allocate physical memory immediately.

In the former context, mspan represents memory governance object itself and span represents memory block

Allocate

Recycle

Free

UNIX-like system uses

madviseto suggest kernel to release physical memory mapping. the virtual memory is remained but physical memory is released. if reuse these virtual memory, kernel will auto replenish required physical memory.

madviseis only suggestion, it will not be really executed or executed immediately. if the physical memory is enough, some kernels will ignore this suggestion or delay executing it.Windows does NOT support similar mechanism, so need to use system API to release and re-allocate memory.

Former memory is mostly referred to user object storage.

Complied program consisted with two parts including user code and runtime.

Runtime operations require allocating memory as well.

But these runtime objects are not allocated in reserved region and have different lifetime and re-use strategy.

So they need specialized FixAlloc fixed allocator.

// mheap.go

type mheap struct {

spanalloc fixalloc // allocator for span*

cachealloc fixalloc // allocator for mcache*

treapalloc fixalloc // allocator for treapNodes* used by large objects

specialfinalizeralloc fixalloc // allocator for specialfinalizer*

specialprofilealloc fixalloc // allocator for specialprofile*

}

func (h *mheap) init(spansStart, spansBytes uintptr) {

h.treapalloc.init(unsafe.Sizeof(treapNode{}), nil, ...)

h.spanalloc.init(unsafe.Sizeof(mspan{}), recordspan, ...)

h.cachealloc.init(unsafe.Sizeof(mcache{}), nil, ...)

h.specialfinalizeralloc.init(unsafe.Sizeof(specialfinalizer{}), nil, ...)

h.specialprofilealloc.init(unsafe.Sizeof(specialprofile{}), nil, ...)

}// mfixalloc.go

// FixAlloc is a simple free-list allocator for fixed size objects.

// Malloc uses a FixAlloc wrapped around sysAlloc to manages its

// MCache and MSpan objects.

type fixalloc struct {

size uintptr

first func(arg, p unsafe.Pointer)

arg unsafe.Pointer

list *mlink // FixAlloc use early memory allocator design pattern,

// which use linked list to govern and recycle reusable memory block,

// and hold another divisible memory chunk as backup resources.

chunk uintptr // backup memory (similar with mheap in former 3-layers structure)

nchunk uint32 // backup memory divisible amount

}

func (f *fixalloc) init(size uintptr, first func(arg, p unsafe.Pointer), ...) {

f.size = size

f.first = first // linked function,

// only called when dividing new memory chunk,

// it is good for some logging job.

f.arg = arg

f.list = nil

...

}Recycle operation only put memory chunk into reusable linked list and no free operation.

Since the amount of these governance objects which use FixAlloc will not be too large, not releasing their physical memory does NOT affect too much.

// mfixalloc.go

func (f *fixalloc) free(p unsafe.Pointer) {

v := (*mlink)(p)

v.next = f.list

f.list = v

}Backup memory not only works for FixAlloc, but also frequently shows up in other runtime components.

Its data structure is simple, only stores memory address and allocation offset.

// malloc.go

type persistentAlloc struct {

base *notInHeap

off uintptr

}notInHeap is a empty struct and includes a method to calculate offset address.

type notInHeap struct{}

func (p *notInHeap) add(bytes uintptr) *notInHeap {

return (*notInHeap)(unsafe.Pointer(uintptr(unsafe.Pointer(p)) + bytes))

}P has individual backup memory and has global variable as supplement.

Every working thread (M) is binding a P.

// runtime2.go

type p struct {

palloc persistentAlloc

}

// malloc.go

var globalAlloc struct {

mutex

persistentAlloc

}So P will extract memory from its persistentAlloc first and it will apply from OS if memory is not enough.

// malloc.go

func persistentalloc1(size, align uintptr, sysStat *uint64) *notInHeap {

const (

chunk = 256 << 10 // 256KB

maxBlock = 64 << 10 // 64KB

)

// if required chunck size > 64 KB, directly apply from OS

if size >= maxBlock {

return (*notInHeap)(sysAlloc(size, sysStat))

}

// make sure backup memory location (local or global)

if mp != nil && mp.p != 0 {

persistent = &mp.p.ptr().palloc

} else {

lock(&globalAlloc.mutex)

persistent = &globalAlloc.persistentAlloc

}

// if memory is not enough, apply from OS (256 KB)

if persistent.off+size > chunk || persistent.base == nil {

persistent.base = (*notInHeap)(sysAlloc(chunk, &memstats.other_sys))

persistent.off = 0

}

// divide from backup memory

p := persistent.base.add(persistent.off)

persistent.off += size

return p

}directly use sysAlloc when apply memory from OS, it will avoid the reserved region where memory allocator use.

// mem_linux.go

func sysAlloc(n uintptr, sysStat *uint64) unsafe.Pointer {

p, err := mmap(nil, n, _PROT_READ|_PROT_WRITE, _MAP_ANON|_MAP_PRIVATE, -1, 0)

}apart from heap.sysAlloc assign arena_alloc as start address, backup memory sysAlloc function has nil as its address argument, it will be determined by kernel.

// malloc.go

func (h *mheap) sysAlloc(n uintptr) unsafe.Pointer {

p := h.arena_alloc

sysMap(unsafe.Pointer(p), n, h.arena_reserved, &memstats.heap_sys)

}when create (no re-use) new mspan instance, store it into heap.allspans list.

this list is used to traverse allocated chunk in mgcmark and heapdump.

type mheap struct {

allspans []*mspan // all spans out there

}

// recordspan adds a newly allocated span to h.allspans.

//

// This only happens the first time a span is allocated from

// mheap.spanalloc (it is not called when a span is reused).

func recordspan(vh unsafe.Pointer, p unsafe.Pointer) {

h := (*mheap)(vh)

s := (*mspan)(p)

// if allspans is full, expand its capacity

if len(h.allspans) >= cap(h.allspans) {

// ...

}

// add mspan into allspans

h.allspans = h.allspans[:len(h.allspans)+1]

h.allspans[len(h.allspans)-1] = s

}