Last active

June 20, 2022 07:47

-

-

Save TyeolRik/8163a43d38213a811e72da7d41961a54 to your computer and use it in GitHub Desktop.

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| #!/bin/bash | |

| NC='\033[0m' # No Color | |

| YELLOW='\033[1;33m' | |

| sudo dnf update -y &&\ | |

| sudo dnf install -y kernel-devel kernel-header* make gcc elfutils-libelf-devel | |

| printf "${YELLOW}Installing Docker${NC}\n" | |

| sudo yum install -y yum-utils telnet &&\ | |

| sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo &&\ | |

| sudo yum install -y --allowerasing git docker-ce docker-ce-cli containerd.io docker-compose-plugin &&\ | |

| sudo systemctl enable docker.service &&\ | |

| sudo systemctl start docker.service &&\ | |

| printf "${YELLOW}Docker install complete${NC}\n" | |

| # https://docs.docker.com/engine/install/linux-postinstall/ | |

| printf "${YELLOW}Get Permissions as recommended\n${NC}" | |

| sudo usermod -aG docker test | |

| sudo chmod 666 /var/run/docker.sock # Due to permission problem. I can't get GROUP(docker) permission with Account of User, named 'test' | |

| printf "${YELLOW}Open Port${NC}\n" | |

| sudo firewall-cmd --zone=public --permanent --add-port 443/tcp | |

| sudo firewall-cmd --zone=public --permanent --add-port 6443/tcp | |

| sudo firewall-cmd --zone=public --permanent --add-port 2379/tcp | |

| sudo firewall-cmd --zone=public --permanent --add-port 2380/tcp | |

| sudo firewall-cmd --zone=public --permanent --add-port 8080/tcp | |

| sudo firewall-cmd --zone=public --permanent --add-port 10250/tcp | |

| sudo firewall-cmd --zone=public --permanent --add-port 10259/tcp | |

| sudo firewall-cmd --zone=public --permanent --add-port 10257/tcp | |

| sudo firewall-cmd --zone=public --permanent --add-port 179/tcp # Calico networking (BGP) | |

| sudo firewall-cmd --zone=public --permanent --add-port 3300/tcp # CEPH | |

| sudo firewall-cmd --zone=public --permanent --add-port 6789/tcp # CEPH | |

| sudo firewall-cmd --reload | |

| sudo firewall –cmd --state | |

| sudo systemctl stop firewalld | |

| sudo systemctl status firewalld | |

| sudo systemctl disable firewalld | |

| sudo systemctl status firewalld | |

| sudo systemctl mask --now firewalld | |

| printf "${YELLOW}Check br_netfilter${NC}\n" | |

| sudo printf "br_netfilter" > /etc/modules-load.d/k8s.conf | |

| sudo printf "net.bridge.bridge-nf-call-ip6tables = 1\nnet.bridge.bridge-nf-call-iptables = 1" > /etc/sysctl.d/k8s.conf | |

| sudo sysctl --system | |

| printf "${YELLOW}SELinux permissive${NC}\n" | |

| sudo setenforce 0 | |

| sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config | |

| printf "${YELLOW}Swap off${NC}\n" | |

| sudo swapon && sudo cat /etc/fstab | |

| sudo swapoff -a && sudo sed -i '/swap/s/^/#/' /etc/fstab | |

| printf "${YELLOW}Install Kubernetes${NC}\n" | |

| sudo cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo | |

| [kubernetes] | |

| name=Kubernetes | |

| baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 | |

| enabled=1 | |

| gpgcheck=1 | |

| repo_gpgcheck=1 | |

| gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg | |

| EOF | |

| sudo yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes | |

| sudo systemctl enable --now kubelet && sudo systemctl restart kubelet | |

| printf "${YELLOW}Configure cgroup driver${NC}\n" | |

| sudo mkdir -p /etc/docker | |

| sudo cat <<EOF | sudo tee /etc/docker/daemon.json | |

| { | |

| "exec-opts": ["native.cgroupdriver=systemd"], | |

| "log-driver": "json-file", | |

| "log-opts": { | |

| "max-size": "100m" | |

| }, | |

| "storage-driver": "overlay2" | |

| } | |

| EOF | |

| sudo systemctl enable docker | |

| sudo systemctl daemon-reload | |

| sudo systemctl restart docker | |

| # Due to Error: "getting status of runtime: rpc error: code = Unimplemented desc = unknown service runtime.v1alpha2.RuntimeService" | |

| sudo rm -f /etc/containerd/config.toml | |

| sudo systemctl restart containerd | |

| sudo kubeadm init | |

| mkdir -p $HOME/.kube | |

| sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config | |

| sudo chown $(id -u):$(id -g) $HOME/.kube/config | |

| sudo chmod 777 /etc/kubernetes/admin.conf | |

| export KUBECONFIG=/etc/kubernetes/admin.conf | |

| kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')" # CNI - Weave | |

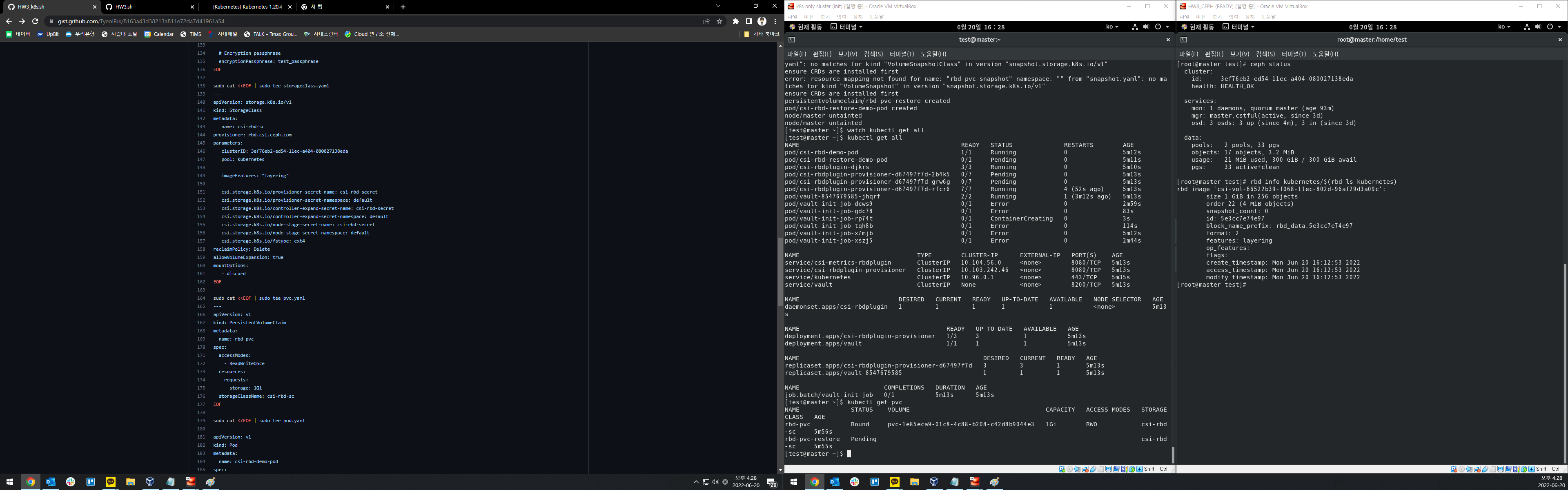

| # New Trial | |

| git clone https://github.com/ceph/ceph-csi.git | |

| kubectl apply -f ./ceph-csi/examples/ceph-conf.yaml | |

| cd ceph-csi/deploy/rbd/kubernetes/ | |

| sudo cat <<EOF | sudo tee csi-config-map.yaml | |

| --- | |

| apiVersion: v1 | |

| kind: ConfigMap | |

| data: | |

| config.json: |- | |

| [ | |

| { | |

| "clusterID": "3ef76eb2-ed54-11ec-a404-080027138eda", | |

| "monitors": [ | |

| "10.0.2.12:6789", | |

| "10.0.2.12:3300" | |

| ] | |

| } | |

| ] | |

| metadata: | |

| name: ceph-csi-config | |

| EOF | |

| sudo cat <<EOF | sudo tee secret.yaml | |

| --- | |

| apiVersion: v1 | |

| kind: Secret | |

| metadata: | |

| name: csi-rbd-secret | |

| namespace: default | |

| stringData: | |

| # Key values correspond to a user name and its key, as defined in the | |

| # ceph cluster. User ID should have required access to the 'pool' | |

| # specified in the storage class | |

| userID: kubernetes | |

| userKey: AQAh1qtiEGb6AxAAM6DyB6sMYnJlAvK8LtX1yA== | |

| # Encryption passphrase | |

| encryptionPassphrase: test_passphrase | |

| EOF | |

| sudo cat <<EOF | sudo tee storageclass.yaml | |

| --- | |

| apiVersion: storage.k8s.io/v1 | |

| kind: StorageClass | |

| metadata: | |

| name: csi-rbd-sc | |

| provisioner: rbd.csi.ceph.com | |

| parameters: | |

| clusterID: 3ef76eb2-ed54-11ec-a404-080027138eda | |

| pool: kubernetes | |

| imageFeatures: "layering" | |

| csi.storage.k8s.io/provisioner-secret-name: csi-rbd-secret | |

| csi.storage.k8s.io/provisioner-secret-namespace: default | |

| csi.storage.k8s.io/controller-expand-secret-name: csi-rbd-secret | |

| csi.storage.k8s.io/controller-expand-secret-namespace: default | |

| csi.storage.k8s.io/node-stage-secret-name: csi-rbd-secret | |

| csi.storage.k8s.io/node-stage-secret-namespace: default | |

| csi.storage.k8s.io/fstype: ext4 | |

| reclaimPolicy: Delete | |

| allowVolumeExpansion: true | |

| mountOptions: | |

| - discard | |

| EOF | |

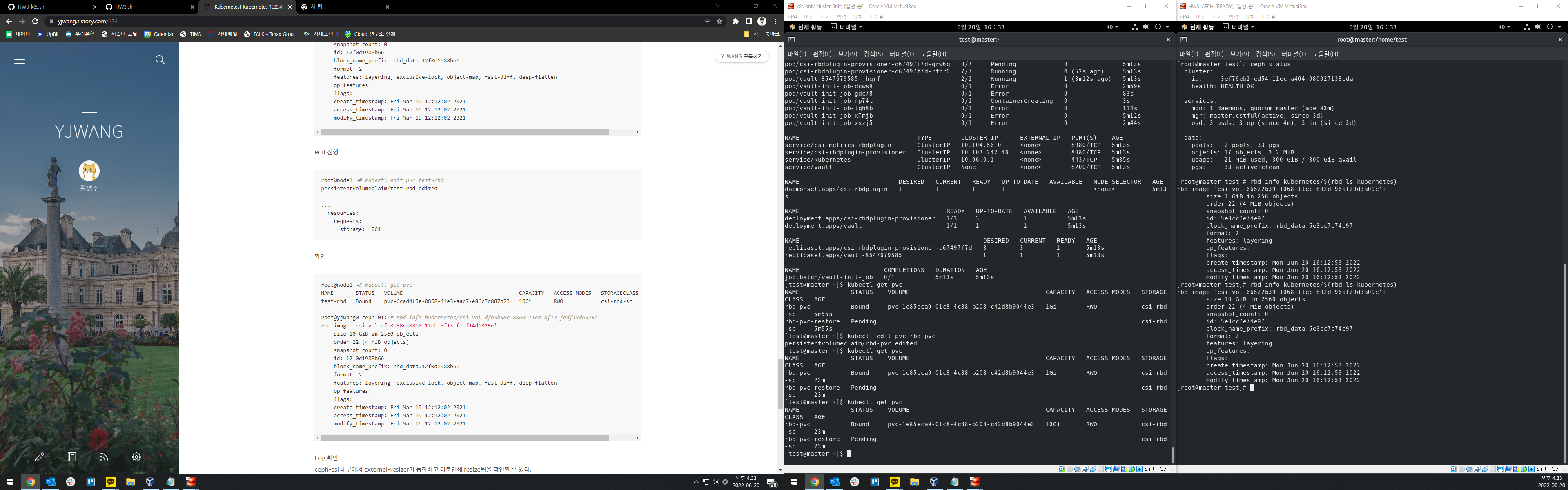

| sudo cat <<EOF | sudo tee pvc.yaml | |

| --- | |

| apiVersion: v1 | |

| kind: PersistentVolumeClaim | |

| metadata: | |

| name: rbd-pvc | |

| spec: | |

| accessModes: | |

| - ReadWriteOnce | |

| resources: | |

| requests: | |

| storage: 1Gi | |

| storageClassName: csi-rbd-sc | |

| EOF | |

| sudo cat <<EOF | sudo tee pod.yaml | |

| --- | |

| apiVersion: v1 | |

| kind: Pod | |

| metadata: | |

| name: csi-rbd-demo-pod | |

| spec: | |

| containers: | |

| - name: web-server | |

| image: docker.io/library/nginx:latest | |

| volumeMounts: | |

| - name: mypvc | |

| mountPath: /var/lib/www/html | |

| volumes: | |

| - name: mypvc | |

| persistentVolumeClaim: | |

| claimName: rbd-pvc | |

| readOnly: false | |

| EOF | |

| sudo cat <<EOF | sudo tee snapshotclass.yaml | |

| --- | |

| apiVersion: snapshot.storage.k8s.io/v1 | |

| kind: VolumeSnapshotClass | |

| metadata: | |

| name: csi-rbdplugin-snapclass | |

| driver: rbd.csi.ceph.com | |

| parameters: | |

| clusterID: 3ef76eb2-ed54-11ec-a404-080027138eda | |

| csi.storage.k8s.io/snapshotter-secret-name: csi-rbd-secret | |

| csi.storage.k8s.io/snapshotter-secret-namespace: default | |

| deletionPolicy: Delete | |

| EOF | |

| sudo cat <<EOF | sudo tee snapshot.yaml | |

| --- | |

| apiVersion: snapshot.storage.k8s.io/v1 | |

| kind: VolumeSnapshot | |

| metadata: | |

| name: rbd-pvc-snapshot | |

| spec: | |

| volumeSnapshotClassName: csi-rbdplugin-snapclass | |

| source: | |

| persistentVolumeClaimName: rbd-pvc | |

| EOF | |

| sudo cat <<EOF | sudo tee pvc-restore.yaml | |

| --- | |

| apiVersion: v1 | |

| kind: PersistentVolumeClaim | |

| metadata: | |

| name: rbd-pvc-restore | |

| spec: | |

| storageClassName: csi-rbd-sc | |

| dataSource: | |

| name: rbd-pvc-snapshot | |

| kind: VolumeSnapshot | |

| apiGroup: snapshot.storage.k8s.io | |

| accessModes: | |

| - ReadWriteOnce | |

| resources: | |

| requests: | |

| storage: 1Gi | |

| EOF | |

| sudo cat <<EOF | sudo tee pod-restore.yaml | |

| --- | |

| apiVersion: v1 | |

| kind: Pod | |

| metadata: | |

| name: csi-rbd-restore-demo-pod | |

| spec: | |

| containers: | |

| - name: web-server | |

| image: docker.io/library/nginx:latest | |

| volumeMounts: | |

| - name: mypvc | |

| mountPath: /var/lib/www/html | |

| volumes: | |

| - name: mypvc | |

| persistentVolumeClaim: | |

| claimName: rbd-pvc-restore | |

| readOnly: false | |

| EOF | |

| cd $HOME/ceph-csi/examples/rbd/ | |

| sh plugin-deploy.sh | |

| cd $HOME/ceph-csi/deploy/rbd/kubernetes/ | |

| kubectl create -f secret.yaml | |

| kubectl create -f storageclass.yaml | |

| kubectl create -f pvc.yaml | |

| kubectl create -f pod.yaml | |

| kubectl create -f snapshotclass.yaml | |

| kubectl create -f snapshot.yaml | |

| kubectl create -f pvc-restore.yaml | |

| kubectl create -f pod-restore.yaml | |

| # Reference: https://yjwang.tistory.com/124 | |

| kubectl taint nodes --all node-role.kubernetes.io/master- | |

| kubectl taint nodes --all node-role.kubernetes.io/control-plane- | |

| watch kubectl get all |

Author

Author

Author

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

ERROR: 43, 44번줄 허가거부

BUT, 정상작동됨.