Below you have some articles related about prompting and some papers

GPT can [tell bad jokes](https://zapier.com/blog/can-chatgpt-be-funny) and write accidentally hilarious poems about your life, but it can also help you do your job better. The catch: you need to help it do _its_ job better, too.At its most basic level, OpenAI's GPT-3 and GPT-4 predict text based on an input called a prompt. But to get the best results, you need to write a clear prompt with ample context. After tinkering with it for more hours than I'd like to admit, these are my tips for writing an effective GPT-3 or GPT-4 prompt.

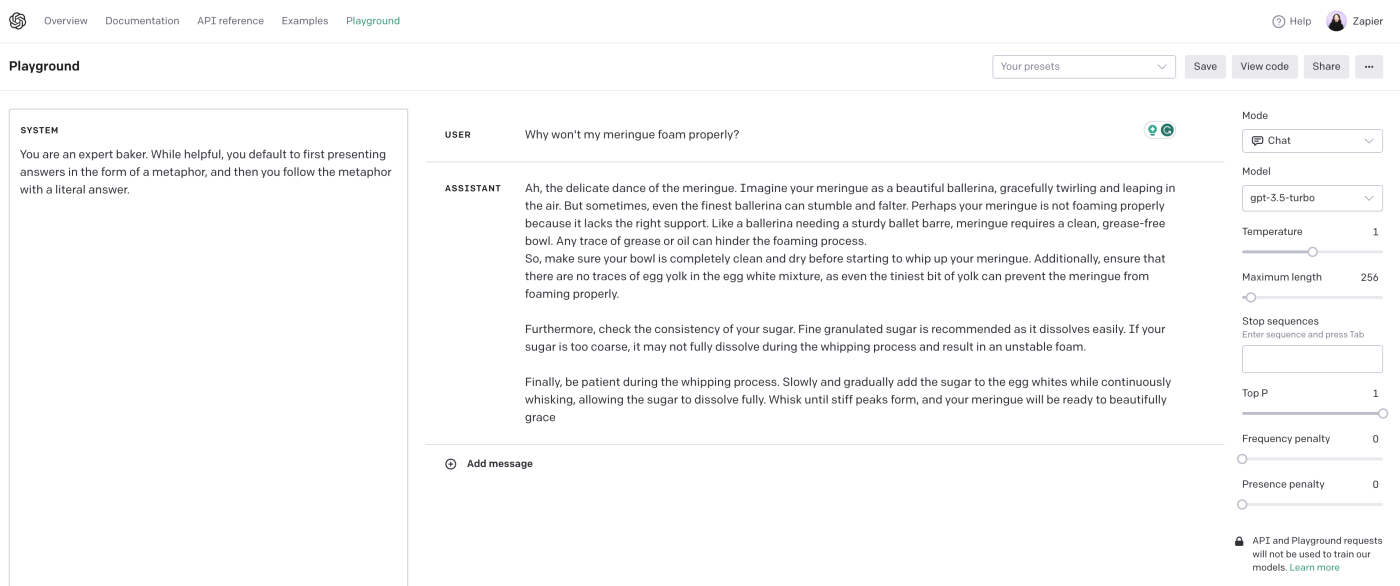

There's very little chance that the first time you put your AI prompt in, it'll spit out exactly what you're looking for. You need to write, test, refine, test, and so on, until you consistently get an outcome you're happy with. I recommend testing your prompt in the OpenAI playground or with Zapier's OpenAI integration.

GPT vs. ChatGPT. GPT-3 and GPT-4 aren't the same as ChatGPT. ChatGPT, the conversation bot that you've been hanging out with on Friday nights, has more instructions built in from OpenAI. GPT-3 and GPT-4, on the other hand, are a more raw AI that can take instructions more openly from users. The tips here are for GPT-3 and GPT-4—but they can apply to your ChatGPT prompts, too.

As you're testing, you'll see a bunch of variables—things like model, temperature, maximum length, stop sequences, and more. It can be a lot to get the hang of, so to get started, I suggest playing with just two of them.

-

Temperature allows you to control how creative you want the AI to be (on a scale of 0 to 1). A lower score makes the bot less creative and more likely to say the same thing given the same prompt. A higher score gives the bot more flexibility and will cause it to write different responses each time you try the same prompt. The default of 0.7 is pretty good for most use cases.

-

Maximum length is a control of how long the combined prompt and response can be. If you notice the AI is stopping its response mid-sentence, it's likely because you've hit your max length, so increase it a bit and test again.

Help the bot help you. If you do each of the things listed below—and continue to refine your prompt—you should be able to get the output you want.

Just like humans, AI does better with context. Think about exactly what you want the AI to generate, and provide a prompt that's tailored specifically to that.

Here are a few examples of ways you can improve a prompt by adding more context:

Basic prompt: "Write about productivity."

Better prompt: "Write a blog post about the importance of productivity for small businesses."

By including the type of content ("blog") as well as some details on what specifically to cover in the blog post, the bot will be a lot more helpful.

Here's another example, this time with different types of details.

Basic prompt: "Write about how to house train a dog."

Better prompt: "As a professional dog trainer, write an email to a client who has a new 3-month-old Corgi about the activities they should do to house train their puppy."

In the better prompt, we ask the AI to take on a specific role ("dog trainer"), and we offer specific context around the age and type of dog. We also, like in the previous example, tell them what type of content we want ("email").

The AI can change the writing style of its output, too, so be sure to include context about that if it matters for your use case.

Basic prompt: "Write a poem about leaves falling."

Better prompt: "Write a poem in the style of Edgar Allan Poe about leaves falling."

This can be adapted to all sorts of business tasks (think: sales emails), too—e.g., "write a professional but friendly email" or "write a formal executive summary."

Let's say you want to write a speaker's introduction for yourself: how is the AI supposed to know about you? It's not that smart (yet). But you can give it the information it needs, so it can reference it directly. For example, you could copy your resume or LinkedIn profile and paste it at the top of your prompt like this:

Reid's resume: [paste full resume here]

Given the above information, write a witty speaker bio about Reid.

Another common use case is getting the AI to summarize an article for you. Here's an example of how you'd get OpenAI's GPT-3 to do that effectively.

[Paste the full text of the article here]

Summarize the content from the above article with 5 bullet points.

Remember that GPT-3 and GPT-4 only have access to things published prior to 2021, and they have no internet access. This means you shouldn't expect it to be up to date with recent events, and you can't give it a URL to read from. While it might appear to work sometimes, it's actually just using the text within the URL itself (as well as its memory of what's typically on that domain) to generate a response.

(The exception here is if you're using ChatGPT Plus and have turned on access to its built-in Bing web browser.)

Providing examples in the prompt can help the AI understand the type of response you're looking for (and gives it even more context).

For example, if you want the AI to reply to a user's question in a chat-based format, you might include a previous example conversation between the user and the agent. You'll want to end your prompt with "Agent:" to indicate where you want the AI to start typing. You can do so by using something like this:

You are an expert baker answering users' questions. Reply as agent.

Example conversation:

User: Hey can you help me with something

Agent: Sure! What do you need help with?

User: I want to bake a cake but don't know what temperature to set the oven to.

Agent: For most cakes, the oven should be preheated to 350°F (177°C).

Current conversation:

User: [Insert user's question]

Agent:

Examples can also be helpful for math, coding, parsing, and anything else where the specifics matter a lot. If you want to use OpenAI to format a piece of data for you, it'll be especially important to give it an example. Like this:

Example:

Input: 2020-08-01T15:30:00Z

Add 3 days and convert the following time stamp into MMM/DD/YYYY HH:MM:SS format

Output: Aug/04/2020 15:30:00

Input: 2020-07-11T12:18:03.934Z

Output:

Providing positive examples (those that you like) can help guide the AI to deliver similar results. But you can also tell it what to avoid by showing it negative examples—or even previous results it generated for you that you didn't like.

Basic prompt: Write an informal customer email for a non-tech audience about how to use Zapier Interfaces.

Better prompt: Write a customer email for a non-tech audience about how to use Zapier Interfaces. It should not be overly formal. This is a "bad" example of the type of copy you should avoid: [Insert bad example].

When crafting your GPT prompts, It's helpful to provide a word count for the response, so you don't get a 500-word answer when you're looking for a sentence (or vice versa). You might even use a range of acceptable lengths.

For example, if you want a 500-word response, you could provide a prompt like "Write a 500-750-word summary of this article." This gives the AI the flexibility to generate a response that's within the specified range. You can also use less precise terms like "short" or "long."

Basic prompt: "Summarize this article."

Better prompt: "Write a 500-word summary of this article."

GPT can output various code languages like Python and HTML as well as visual styles like charts and CSVs. Telling it the format of both your input and your desired output will help you get exactly what you need. For example:

Product Name,Quantity

Apple,1

Orange,2

Banana,1

Kiwi,1

Pineapple,2

Jackfruit,1

Apple,2

Orange,1

Banana,1

Using the above CSV, output a chart of the frequency each product appears in the text above.

It's easy to forget to define the input format (in this case, CSV), so be sure to double-check that you've done that.

Another example: perhaps you want to add the transcript of your latest podcast interview to your website but need it converted to HTML. The AI is great at doing this, but you need to tell it exactly what you need.

[Insert full text of an interview transcript]

Output the above interview in HTML.

Another effective strategy for creating powerful prompts is to have the AI do it for you. That's not a joke: you can ask GPT to craft the ideal prompt based on your specific needs—and then reuse it on itself.

The idea here is to use the AI model as a brainstorming tool, employing its knowledge base and pattern recognition capabilities to generate prompt ideas that you might not have considered.

To do this, you just need to frame your request as clearly and specifically as possible—and detail the parameters of your needs. For example, say you want GPT to help you understand error messages when something goes wrong on your computer.

Basic prompt: I'm looking to create a prompt that explains an error message.

Better prompt: I'm looking to create a prompt for error messages. I have a few needs: I need to understand the error, I need the main components of the error broken down, and I need to know what's happened sequentially leading up to the error, its possible root causes, and recommended next steps—and I need all this info formatted in bullet points.

GPT will take those requirements into consideration and return a prompt that you can then use on it—it's the circle of (artificial) life.

Sometimes it's just about finding the exact phrase that OpenAI will respond to. Here are a few phrases that folks have found work well with OpenAI to achieve certain outcomes.

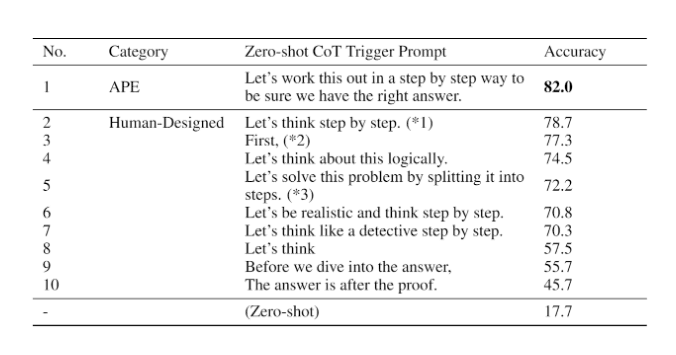

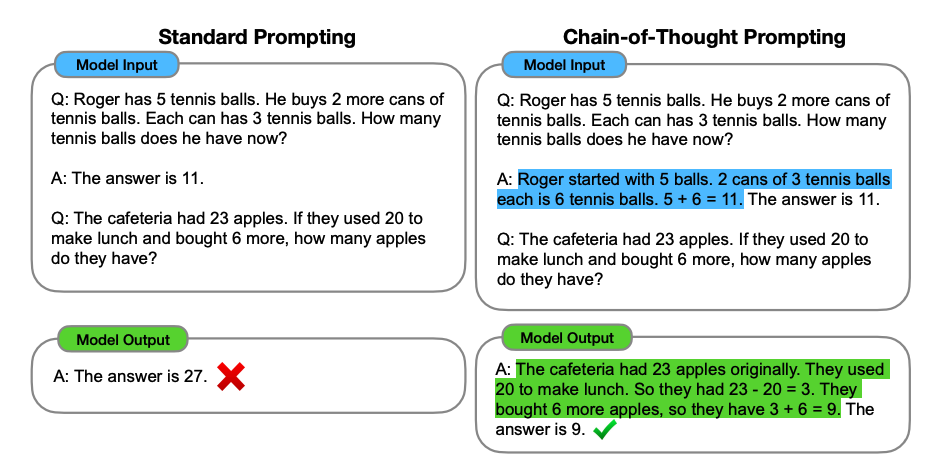

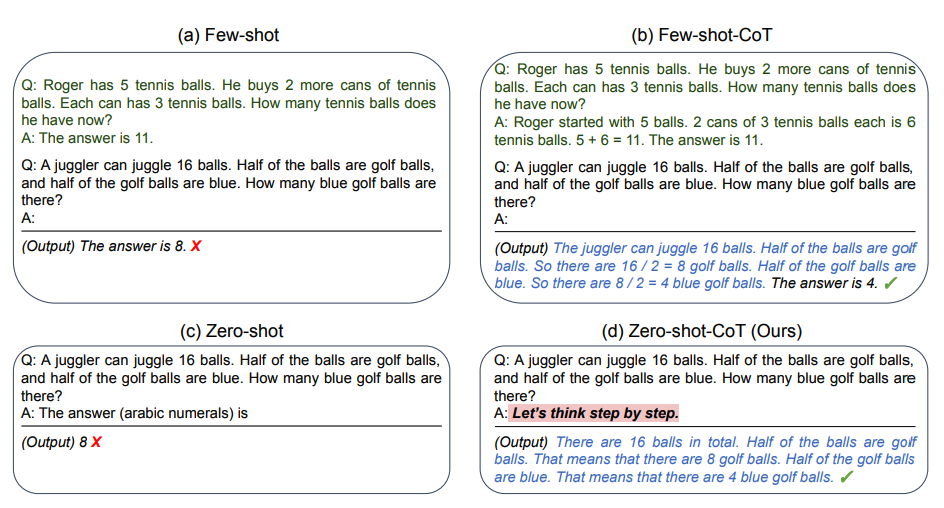

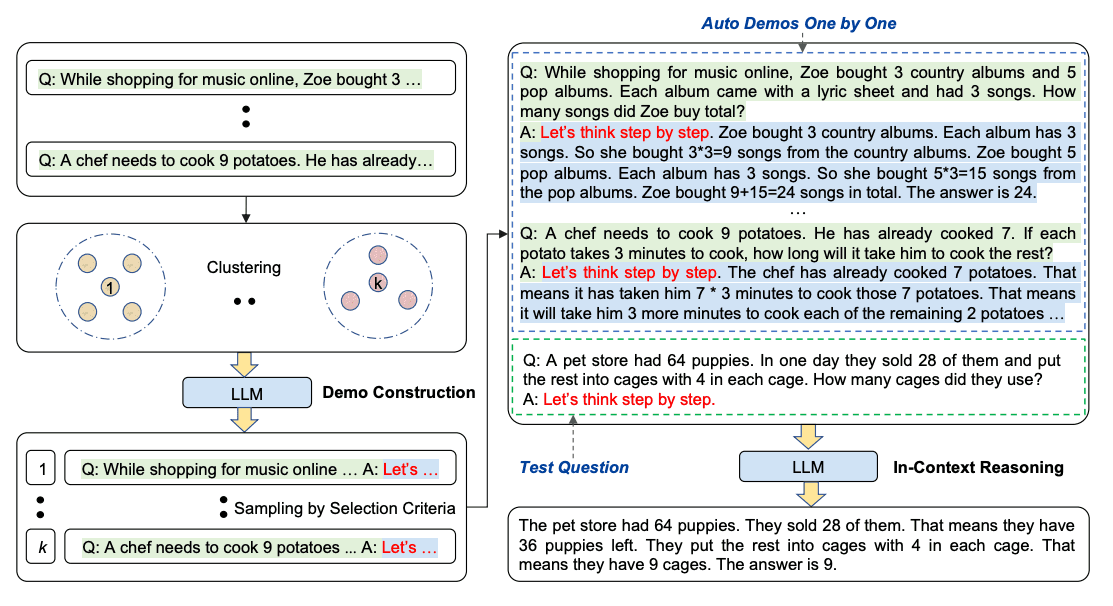

"Let's think step by step"

This makes the AI think logically and can be specifically helpful with math problems.

"Thinking backwards"

This can help if the AI keeps arriving at inaccurate conclusions.

"In the style of [famous person]"

This will help match styles really well.

"As a [insert profession/role]"

This helps frame the bot's knowledge, so it knows what it knows—and what it doesn't.

"Explain this topic for [insert specific age group]"

Defining your audience and their level of understanding of a certain topic will help the bot respond in a way that's suitable for the target audience.

"For the [insert company/brand publication]"

This helps GPT understand which company you're writing or generating a response for, and can help it adjust its voice and tone accordingly.

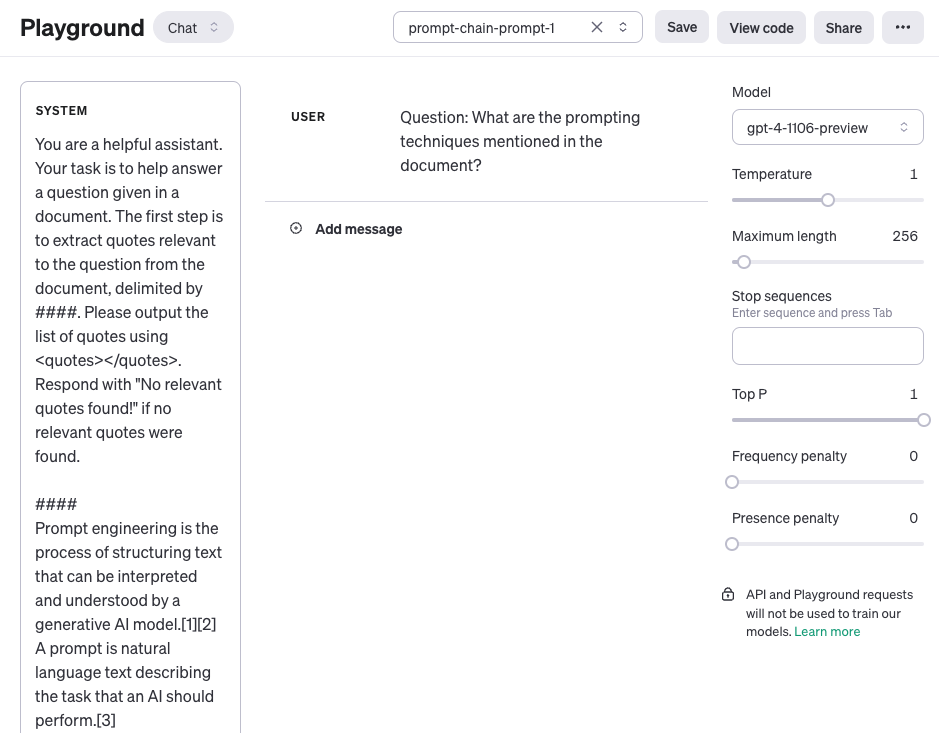

GPT can respond from a designated point of view (e.g., market researcher or expert in solar technologies) or in a specific coding language without you having to repeat these instructions every time you interact with it.

To do this, in the OpenAI playground, modify the System prompt from its default "You are a helpful assistant" to whatever you want GPT to be. For example:

You are an expert baker. While helpful, you default to first presenting answers in the form of a metaphor, and then you follow the metaphor with a literal answer.

By giving GPT a role, you're automatically adding persistent context to any future prompts you input.

If you're using ChatGPT, you can do something similar with custom instructions. Simply tell ChatGPT the reference material or instructions you want it to consider every time it generates a response, and you're set.

Now that you know how to write an effective prompt, it's time to put that skill to use in your workflows. With Zapier's OpenAI and ChatGPT integrations, you can automate your prompts, so they run whenever things happen in the apps you use most. That way, you can do things like automatically draft email responses, brainstorm content ideas, or create task lists. Here are a few pre-made workflows to get you started.

Slack + ChatGPT

More details

Ready to bring the power of ChatGPT to your Slack workspace? Use this Zap to create a reply bot that lets you have a conversation with ChatGPT right inside Slack when a prompt is posted in a particular channel. Now, your team can ask questions and get responses without having to leave Slack.

Dropbox + PDF.co + ChatGPT

More details

Whenever a new file is added to a Dropbox folder, PDF.co will convert it to a PDF automatically. Afterwards get the document summary with the help of ChatGPT.

Slack + ChatGPT + Notion

More details

This Zapier template automates the process of task creation in Notion's kanban board using ChatGPT and Slack reactions. When a user adds a specific reaction to a message in Slack, ChatGPT generates a task from the message and adds it to Notion. The template then adds the task to the designated kanban board, making it easy to track and manage. This template streamlines task management and saves valuable time for teams.

Gmail + OpenAI (GPT-3, DALL-E, Whisper)

More details

Whenever you receive a new customer email, create different email responses with OpenAI and save them automatically as Gmail drafts. This Zap lets you send your prospects and clients better-worded, faster email responses without any added clicks or keystrokes.

Zapier Chrome extension + OpenAI (GPT-3, DALL-E, Whisper) + Web Parser by Zapier

More details

Take control of your reading list with this AI automation. With this Zap, you can select an article right from your browser and automatically get a summary of the content from OpenAI. Catch up on industry news or create content for an email newsletter with just a few clicks.

Or you can use Zapier and GPT to build your own AI chatbot. Zapier's free AI chatbot builder uses the power of GPT to help you customize your own chatbot—no coding required.

Zapier is the leader in workflow automation—integrating with 6,000+ apps from partners like Google, Salesforce, and Microsoft. Use interfaces, data tables, and logic to build secure, automated systems for your business-critical workflows across your organization's technology stack. Learn more.

And here are some deeper dives into how you can automate your GPT-3 and GPT-4 prompts:

Want to build GPT-powered apps for your clients or coworkers? Zapier's new Interfaces product makes it easy to create dynamic web pages that trigger automated workflows.

This article was originally published in January 2023 and has also had contributions from Elena Alston and Jessica Lau. The most recent update was in August 2023.

Generative AI is finally coming into its own as a tool for research, learning, creativity and interaction. The key to a modern generative AI interface is the prompt: the request that users compose to query an AI system in an attempt to elicit a desirable response.But AI has its limits, and getting the right answer means asking the right question. AI systems lack the insight and intuition to actually understand users' needs and wants. Prompts require careful wording, proper formatting and clear details. Often, prompts should avoid much of the slang, metaphors and social nuance that humans take for granted in everyday conversation.

Getting the most from a generative AI system requires expertise in creating and manipulating prompts. Here are some ideas that can help users construct better prompts for AI interactions.

A prompt is a request made by a human to a generative AI system, often a large language model.

Many prompts are simply plain-language questions, such as "What was George Washington's middle name?" or "What time is sunset today in the city of Boston?" In other cases, a prompt can result in tangible outcomes, such as the command "Set the thermostat in the house to 72 degrees Fahrenheit" for a smart home system.

But prompts can also involve far more complicated and detailed requests. For example, a user might request a 2,000-word explanation of marketing strategies for new computer games, a report for a work project, or pieces of artwork and music on a specific topic.

Prompts can grow to dozens or even hundreds of words that detail the user's request, set formats and limits, and include guiding examples, among other parameters. The AI system's front-end interface parses the prompt into actionable tasks and parameters, then uses those extracted elements to access data and perform tasks that meet the user's request within the limits of the system's underlying models and data set.

At a glance, the idea of a prompt is easy to understand. For example, many users interact with online chatbots and other AI entities by asking and answering questions to resolve problems, place orders, request services or perform other simple business transactions.

However, complex prompts can easily become large, highly structured and exquisitely detailed requests that elicit very specific responses from the model. This high level of detail and precision often requires the extensive expertise provided by a type of AI professional called a prompt engineer.

A successful prompt engineer has the following characteristics:

- A working knowledge of the AI system's underlying model architecture.

- An understanding of the contents and limitations of the training data set.

- The ability to extend the data set and use new data to fine-tune the AI system.

- Detailed knowledge of the AI system's prompt interface mechanics, including formatting and parsing methodologies.

Ultimately, a prompt engineer is well versed in creating and refining effective prompts for complex and detailed AI requests. But regardless of your level of prompt engineering experience and knowledge, the following 10 tips can help you improve the quality and results of your AI prompts.

<iframe id="ytplayer-0" src="https://www.youtube.com/embed/Bq-ncjOGeVU?autoplay=0&modestbranding=1&rel=0&widget_referrer=https://www.techtarget.com/searchenterpriseai/tip/Prompt-engineering-tips-and-best-practices&enablejsapi=1&origin=https://www.techtarget.com" type="text/html" height="360" width="640" frameborder="0" data-gtm-yt-inspected-45516328_67="true" title="What is Prompt Engineering? (in about a minute)" data-gtm-yt-inspected-83="true" data-gtm-yt-inspected-88="true"></iframe>Any user can prompt an AI tool, but creating good prompts takes some knowledge and skill. The user must have a clear perspective of the answer or result they seek and possess a thorough understanding of the AI system, including the nuances of its interface and its limitations.

This is a broader challenge than it might initially seem. Keep the following guidelines in mind when creating prompts for generative AI tools.

Successful prompt engineering is largely a matter of knowing what questions to ask and how to ask them effectively. But this means nothing if the user doesn't know what they want in the first place.

Before a user ever interacts with an AI tool, it's important to define the goals for the interaction and develop a clear outline of the anticipated results beforehand. Plan it out: Decide what to achieve, what the audience should know and any associated actions that the system must perform.

AI systems can work with simple, direct requests using casual, plain-language sentences. But complex requests will benefit from detailed, carefully structured queries that adhere to a form or format that is consistent with the system's internal design.

This kind of knowledge is essential for prompt engineers. Form and format can differ for each model, and some tools, such as art generators, might have a preferred structure that involves using keywords in predictable locations. For example, the company Kajabi recommends a prompt format similar to the following for its conversational AI assistant, Ama:

"Act like" + "write a" + "define an objective" + "define your ideal format"

A sample prompt for a text project, such as a story or report, might resemble the following:

Act like a history professor who is writing an essay for a college class to provide a detailed background on the Spanish-American War using the style of Mark Twain.

AI is neither psychic nor telepathic. It's not your best buddy and it has not known you since elementary school; the system can only act based on what it can interpret from a given prompt.

Form clear, explicit and actionable requests. Understand the desired outcome, then work to describe the task that needs to be performed or articulate the question that needs to be answered.

For example, a simple question such as "What time is high tide?" is an ineffective prompt because it lacks essential detail. Tides vary by day and location, so the model would not have nearly enough information to provide a correct answer. A much clearer and more specific query would be "What times are high tides in Gloucester Harbor, Massachusetts, on August 31, 2023?"

Prompts might be subject to minimum and maximum character counts. Many AI interfaces don't impose a hard limit, but extremely long prompts can be difficult for AI systems to handle.

AI tools often struggle to parse long prompts due to the complexity involved in organizing and prioritizing the essential elements of a lengthy request. Recognize any word count limitations for a given AI and make the prompt only as long as it needs to be to convey all the required parameters.

Like any computer system, AI tools can be excruciatingly precise in their use of commands and language, including not knowing how to respond to unrecognized commands or language.

The most effective prompts use clear and direct wording. Avoid ambiguity, colorful language, metaphors and slang, all of which can produce unexpected and undesirable results.

However, ambiguity and other discouraged language can sometimes be employed with the deliberate goal of provoking unexpected or unpredictable results from a model. This can produce some interesting outputs, as the complexity of many AI systems renders their decision-making processes opaque to the user.

Generative AI is designed to create -- that's its purpose. Simple yes-or-no questions are limiting and will likely yield short and uninteresting output.

Posing open questions, in contrast, gives room for much more flexibility in output. For example, a simple prompt such as "Was the American Civil War about states' rights?" will likely lead to a similarly simple, brief response. However, a more open-ended prompt, such as "Describe the social, economic and political factors that led to the outbreak of the American Civil War," is far more likely to provoke a comprehensive and detailed answer.

A generative AI tool can frame its output to meet a wide array of goals and expectations, from short, generalized summaries to long, detailed explorations. To make use of this versatility, well-crafted prompts often include context that helps the AI system tailor its output to the user's intended audience.

For example, if a user simply asks an LLM to explain the three laws of thermodynamics, it's impossible to predict the length and detail of the output. But adding context can help ensure the output is suitable for the target reader. A prompt such as "Explain the three laws of thermodynamics for third-grade students" will produce a dramatically different level of length and detail compared with "Explain the three laws of thermodynamics for Ph.D.-level physicists."

Although generative AI is intended to be creative, it's often wise to include guardrails on factors such as output length. Context elements in prompts might include requesting a simplified and concise versus lengthy and detailed response, for example.

Keep in mind, however, that generative AI tools generally can't adhere to precise word or character limits. This is because natural language processing models such as GPT-3 are trained to predict words based on language patterns, not count. Thus, LLMs can usually follow approximate guidance such as "Provide a two- or three-sentence response," but they struggle to precisely quantify characters or words.

Long and complex prompts sometimes include ambiguous or contradictory terms. For example, a prompt that includes both the words detailed and summary might give the model conflicting information about expected level of detail and length of output. Prompt engineers take care to review the prompt formation and ensure all terms are consistent.

The most effective prompts use positive language and avoid negative language. A good rule of thumb is "Do say 'do,' and don't say 'don't.'" The logic here is simple: AI models are trained to perform specific tasks, so asking an AI system not to do something is meaningless unless there is a compelling reason to include an exception to a parameter.

Just as humans rely on punctuation to help parse text, AI prompts can also benefit from the judicious use of commas, quotation marks and line breaks to help the system parse and operate on a complex prompt.

Consider the simple elementary school grammar example of "Let's eat Grandma" versus "Let's eat, Grandma." Prompt engineers are thoroughly familiar with the formation and formatting of the AI systems they use, which often includes specific recommendations for punctuation.

The 10 tips covered above are primarily associated with LLMs, such as ChatGPT. However, a growing assortment of generative AI image platforms is emerging that can employ additional prompt elements or parameters in requests.

When working specifically with image generators such as Midjourney and Stable Diffusion, keep the following seven tips in mind:

- Describe the image. Offer some details about the scene -- perhaps a cityscape, field or forest -- as well as specific information about the subject. When describing people as subjects, be explicit about any relevant physical features, such as race, age and gender.

- Describe the mood. Include descriptions of actions, expressions and environments -- for example, "An old woman stands in the rain and cries by a wooded graveside."

- Describe the aesthetic. Define the overall style desired for the resulting image, such as watercolor, sculpture, digital art or oil painting. You can even describe techniques or artistic styles, such as impressionist, or an example artist, such as Monet.

- Describe the framing. Define how the scene and subject should be framed: dramatic, wide-angle, close-up and so on.

- Describe the lighting. Describe how the scene should be lit using terms such as morning, daylight, evening, darkness, firelight and flashlight. All these factors can affect light and shadow.

- Describe the coloring. Denote how the scene should use color with descriptors such as saturated or muted.

- Describe the level of realism. AI art renderings can range from abstract to cartoonish to photorealistic. Be sure to denote the desired level of realism for the resulting image.

Prompt formation and prompt engineering can be more of an art than a science. Subtle differences in prompt format, structure and content can have profound effects on AI responses. Even nuances in how AI models are trained can result in different outputs.

Along with tips to improve prompts, there are several common prompt engineering mistakes to avoid:

- Don't be afraid to test and revise. Prompts are never one-and-done efforts. AI systems such as art generators can require enormous attention to detail. Be prepared to make adjustments and take multiple attempts to build the ideal prompt.

- Don't look for short answers. Generative AI is designed to be creative, so form prompts that make the best use of the AI system's capabilities. Avoid prompts that look for short or one-word answers; generative AI is far more useful when prompts are open-ended.

- Don't stick to the default temperature. Many generative AI tools incorporate a "temperature" setting that, in simple terms, controls the AI's creativity. Based on your specific query, try adjusting the temperature parameters: higher to be more random and diverse, or lower to be narrower and more focused.

- Don't use the same sequence in each prompt. Prompts can be complex queries with many different elements. The order in which instructions and information are assembled into a prompt can affect the output by changing how the AI parses and interprets the prompt. Try switching up the structure of your prompts to elicit different responses.

- Don't take the same approach for every AI system. Because different models have different purposes and areas of expertise, posing the same prompt to different AI tools can produce significantly different results. Tailor your prompts to the unique strengths of the system you're working with. In some cases, additional training, such as introducing new data or more focused feedback, might be needed to refine the AI's responses.

- Don't forget that AI can be wrong. The classic IT axiom "garbage in, garbage out" applies perfectly to AI outputs. Model responses can be wrong, incomplete or simply made-up, a phenomenon often termed hallucination. Always fact-check AI output for inaccurate, misleading, biased, or plausible yet incorrect information.

Creating a detailed guide for crafting prompts, especially for AI applications or creative endeavors, is indeed a valuable resource. Let’s break down how we can expand on our initial outline and transform it into a comprehensive guide that can be shared widely, such as on a blog or GitHub Pages.

Step 1: Define the Scope and Objective

Before diving into the details, clearly define the scope of your guide. Are you focusing on prompts for AI text generation, image creation, or another specific application? Once the scope is clear, outline the objective of your guide. What will the reader learn or be able to do after reading it?

Step 2: Identify Your Audience

Understand who your guide is for. Is it aimed at beginners, intermediate users, or experts? Knowing your audience will help you tailor the content’s depth and complexity. For instance, beginners may need more background information and definitions, while experts might appreciate advanced tips and tricks.

Step 3: Create a Detailed Outline

Expand your initial outline into a more detailed structure. Here’s an example of what this could look like:

- Introduction to Prompts

- Definition and importance

- Types of prompts (text, image, code, etc.)

- Basics of Crafting Prompts

- Understanding the AI’s capabilities and limitations

- The role of specificity and clarity

- Examples of simple prompts and their outcomes

- Advanced Techniques

- Using prompts to guide AI creativity

- Adjusting tone and style

- Combining prompts for complex tasks

- Examples of advanced prompts and their outcomes

- Practical Applications

- Case studies or scenarios where prompts have been effectively used

- How to iterate on prompts based on outcomes

- Resources and Tools

- Software or platforms for testing prompts

- Communities and forums for feedback and ideas

- Conclusion and Next Steps

- Summary of key points

- Encouragement to practice and experiment

- How to share feedback and results with the community

Step 4: Write Detailed Content for Each Section

For each section in your outline, write content that is informative, engaging, and easy to follow. Include examples, screenshots, or diagrams where applicable to illustrate your points more clearly. When discussing advanced techniques or specific scenarios, provide real-world examples to demonstrate how your advice can be applied.

Step 5: Publish and Share Your Guide

Once your guide is written, choose a platform for sharing. For a text-based guide, a blog platform like WordPress or Medium can be ideal. If your guide includes code snippets or interactive elements, consider GitHub Pages. Ensure your guide is well-formatted, with a clear structure and navigation.

Step 6: Engage with Your Audience

After publishing your guide, share the link in communities where potential readers might be. This could include forums, social media groups, or Reddit. Be open to feedback and questions, and engage with your audience to refine and improve your guide based on their experiences.

See you later :ok_hand

[](https://medium.com/@2twitme?source=post_page-----24d2ff4f6bca--------------------------------)[

Better prompts is all you need for better responses

The notion of a prompt is not new. This idea of offering a prompt permeates many disciplines: arts (to spur a writer or to speak spontaneously); science (to commence an experiment); criminal investigation (to offer or follow initial clues); computer programming (to take an initial step, given a specific context). All these are deliberative prompts as requests to elicit a desired respective response.

Interacting with large language models (LLMs) is no different. You engineer the best prompt to generate the best response. To get an LLM to generate a desired response has borne a novel discipline: prompt engineering–as “the process [and practice] of structuring text that can be interpreted and understood by a generative AI model.” [1]

In this article, we explore what is prompt engineering, what constitutes best techniques to engineer a well-structured prompt, and what prompt types steer an LLM to generate the desired response; contrastingly, what prompt types deter an LLM to generate a desired response.

Prompt engineering is not strictly an engineering discipline or precise science, supported by mathematical and scientific foundations; rather it’s a practice with a set of guidelines to craft precise, concise, creative wording of text to instruct an LLM to carry out a task. Another way to put it is that “prompt engineering is the art of communicating with a generative large language model.” [2]

To communicate with LLM with precise and task-specific instructions prompts, Bsharat et.al [3] present a comprehensive principled instructions and guidelines to improve the quality of prompts for LLMs. The study suggests that because LLMs exhibit an impressive ability and quality in understanding natural language and in carrying out tasks in various domains such as answering questions, mathematical reasoning, code generation, translation and summarization of tasks, etc., a principled approach to curate various prompt types and techniques immensely improves a generated response.

Take this simple example of a prompt before and after applying one of the principles of conciseness and precision in the prompt. The difference in the response suggests both readability and simplicity–and the desired target audience for the response.

Figure 1: An Illustration showing a general prompt on the left and a precise and specific prompte on the right.[4]

As you might infer from the above example that the more concise and precise your prompt, the better LLM comprehends the task at hand, and, hence, the better response it formulates.

Let’s examine some of the principles, techniques and types of prompt that offer better insight in how to carry out a task in various domains of natural language processing.

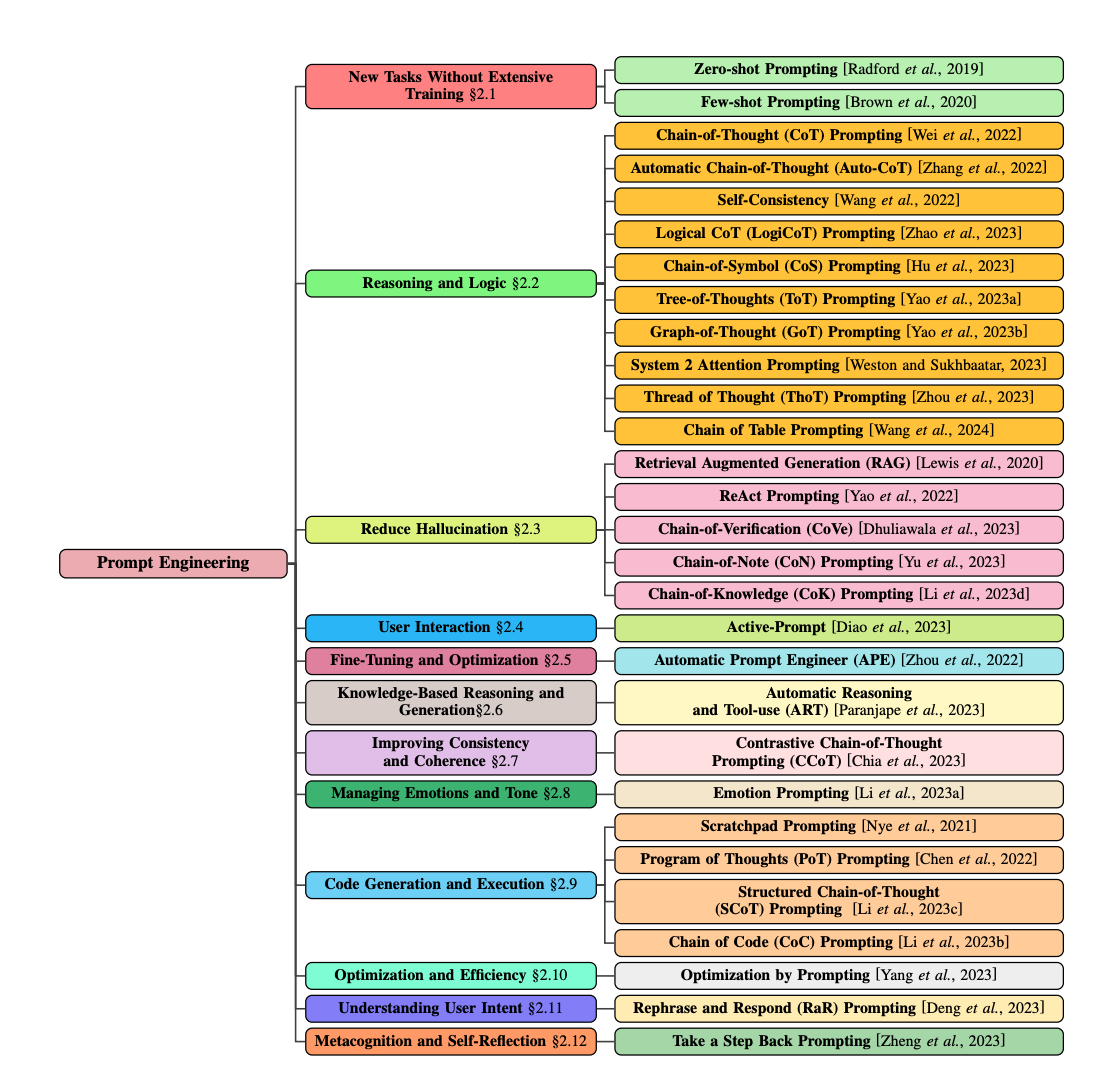

Bsharat et.al tabulate 26 ordered prompt principles, which can further be categorized into five distinct categories, as shown in Figure 2:

- Prompt Structure and Clarity: Integrate the intended audience in the prompt.

- Specificity and Information: Implement example-driven prompting (Use few-shot prompting)

- User Interaction and Engagement: Allow the model to ask precise details and requirements until it has enough information to provide the needed response

- Content and Language Style: Instruct the tone and style of response

- Complex Tasks and Coding Prompts: Break down complex tasks into a sequence of simpler steps as prompts.

Figure 2. : Prompt principle categories.

Equally, Elvis Saravia’s prompt engineering guide states that a prompt can contain many elements:

Instruction: describe a specific task you want a model to perform

Context: additional information or context that can guide’s a model’s response

Input Data: expressed as input or question for a model to respond to

Output and Style Format: the type or format of the output, for example, JSON, how many lines or paragraphs. Prompts are associated with roles, and roles inform an LLM who is interacting with it and what the interactive behavior ought to be. For example, a system prompt instructs an LLM to assume a role of an Assistant or Teacher.

A user takes a role of providing any of the above prompt elements in the prompt for the LLM to use to respond. Saravia, like the Bsharat et.al’s study, echoes that prompt engineering is an art of precise communication. That is, to obtain the best response, your prompt must be designed and crafted to be precise, simple, and specific. The more succinct and precise the better the response.

Also, OpenAI’s guide on prompt engineering imparts similar authoritative advice, with similar takeaways and demonstrable examples:

- Write clear instructions

- Provide reference text

- Split complex tasks into simpler subtasks

- Give the model time to “think”

Finally, Sahoo, Singh and Saha, et.al [5] offer a systematic survey of prompt engineering techniques, and offer a concise “overview of the evolution of prompting techniques, spanning from zero-shot prompting to the latest advancements.” They breakdown into distinct categories as shows in the figure below.

Figure 3. Taxonomy of prompt engineering techniques

But the CO-STAR prompt framework [6] goes a step further. It simplifies and crystalizes all these aforementioned guidelines and principles as a practical approach.

In her prompt engineering blog that won Singapore’s GPT-4 prompt engineering competition, Sheila Teo offers a practical strategy and worthy insights into how to obtain the best results from LLM by using the CO-STAR framework.

In short, Ms. Teo condenses and crystallizes Bsharat et.al (see Figure. 2) principles, along with other above principles, into six simple and digestible terms, as CO-STAR (Figure 4):

C: Context: Provide background and information on the task

O: Objective: Define the task that you want the LLM to perform

S: Style: Specify the writing style you want the LLM to use

T: Tone: Set the attitude and tone of the response

A: Audience: Identify who the response is for

R: Response: Provide the response format and style

Figure 4: The CO-STAR’s distinct and succinct five principles of a prompt guide

Here is a simple example of a CO-STAR prompt in a demonstrative notebook:

Figure 4 (a): An example prompt with CO-STAR framework

With this CO-STAR prompt, we get the following concise response from our LLM.

Figure 4(b): An example response with CO-STAR framework

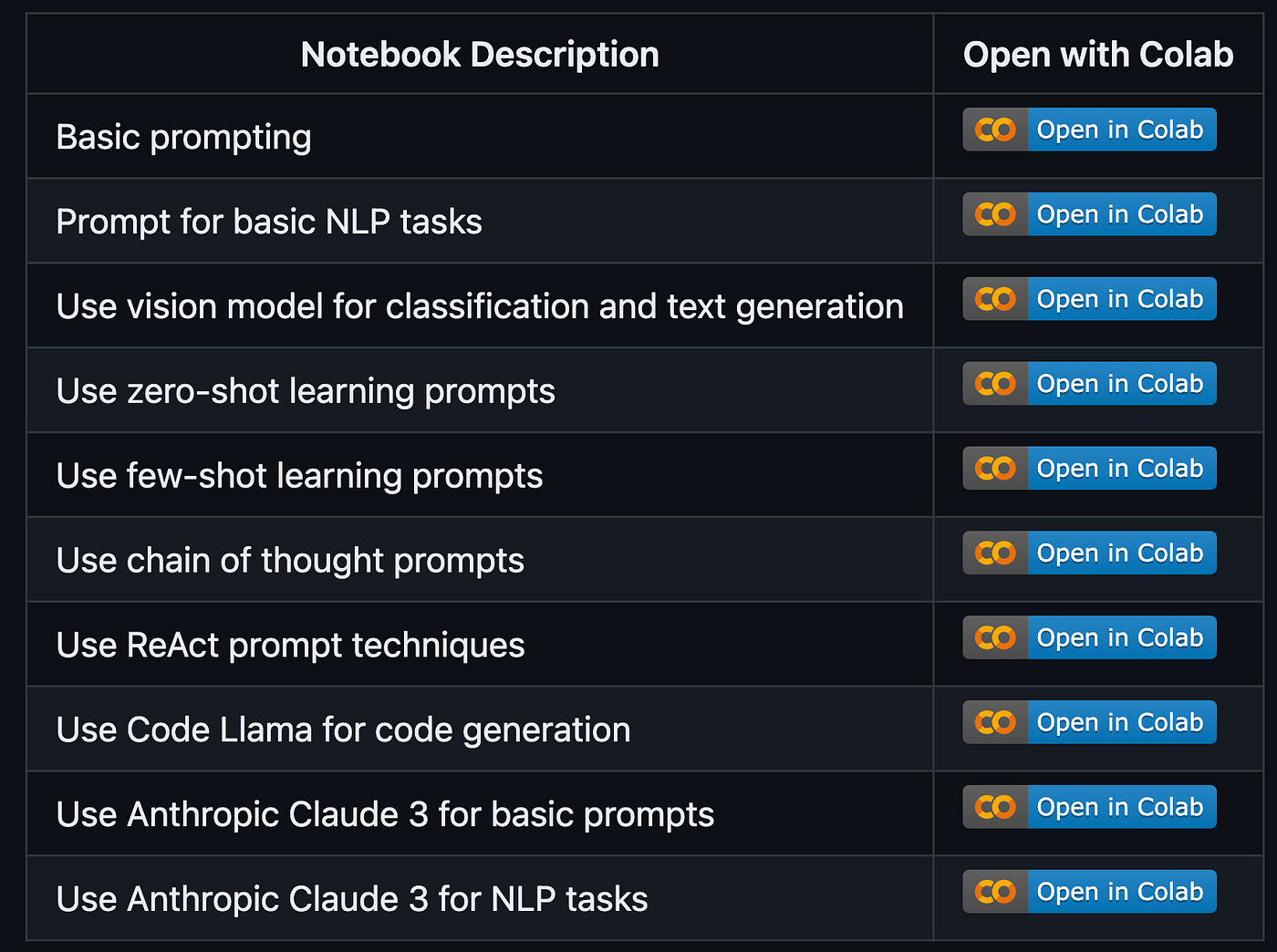

You can view extensive examples in these two Colab notebooks:

Besides CO-STAR, other frameworks specific to ChatGPT have emerged, yet the core of crafting effective prompts remains remains the same: clarity, specificity, context, objective, task, action etc. [7]

So far we have explored best practices, guiding principles, and techniques, from an array of sources, on how to craft a concise prompt to interact with an LLM — all to generate the desired and accurate response. Prompts are linked to type of tasks, meaning the kind of task you wish the LLM perform equates to a type of prompt–and how you will craft it.

Saravia [8] analyzes various task-related prompts and advises on crafting effective prompts to accomplish these tasks. Generally, these tasks include:

- Text summarization

- Zero and few shot learning

- Information extractions

- Question answering

- Text and image classification

- Conversation

- Reasoning

- Code generation

I won’t elaborate on the explanation for brevity. But do explore prompt types through illustrative examples in the Colab notebooks below for insights on crafting effective prompts and obtaining accurate responses.

Figure 5. Example notebooks that explore type of prompts associated with type of tasks.

Note: All notebook examples have been tested using OpenAI APIs on GPT-4-turbo, Llama 2 series models, Mixtral series, Code Llama 70B (running on Anyscale Endpoints). To execute these examples, you must have accounts on OpenAI and Ansyscale Endpoints. These examples are derived from a subset of GenAI Cookbook GitHub Repository.

In this article, we covered what is prompt engineering, provided an array of authoritative guiding principles and techniques to curate and craft effective prompts to obtain the best response from LLMs. At the same time, the OpenAI guide also advised what prompts not to use.

Incorporating above principles, we discussed the CO-STAR prompt framework and provided a couple of examples on how to use this prompting framework.

Finally, we linked prompt types to common tasks for LLMs and offered a set of illustrative notebook examples for each type of task.

All the above serve a helpful first step into your journey into crafting and curating effective prompts for LLMs and obtaining the best response.

Read the next sequence of blogs on GenAI Cookbook series on LLMs:

- LLM Beyond its Core Capabilities as AI Assistants or Agents

- Crafting Intelligent User Experiences: A Deep Dive into OpenAI Assistants API

[1] https://en.wikipedia.org/wiki/Prompt_engineering

[2] ChatGPT, 2023

[3] https://arxiv.org/pdf/2312.16171.pdf

[4] https://arxiv.org/pdf/2312.16171.pdf

[5] https://arxiv.org/pdf/2402.07927.pdf

[7] https://www.linkedin.com/pulse/9-frameworks-master-chatgpt-prompt-engineering-edi-hezri-hairi/

[8] https://www.promptingguide.ai/introduction/examples

[9] https://platform.openai.com/docs/guides/prompt-engineering

[10] https://platform.openai.com/examples

[11] https://github.com/dmatrix/genai-cookbook/blob/main/README.m

Excerpt from M. C. Escher, Metamorphosis III (1968)

December 2020

Contents

- The central role of retrieval practice

- A recipe for chicken stock

- Factual knowledge

- Procedural knowledge

- Conceptual knowledge

- Open lists

- Salience prompts

- Prompt-writing, in practice

This guide and its embedded spaced repetition system were made possible by a crowd-funded research grant from my Patreon community. If you find my work interesting, you can become a member to get ongoing behind-the-scenes updates and early access to new work.

As a child, I had a goofy recurring daydream: maybe if I type just the right sequence of keys, the computer would beep a few times in sly recognition, then a hidden world would suddenly unlock before my eyes. I’d find myself with new powers which I could use to transcend my humdrum life.

Such fantasies probably came from playing too many video games. But the feelings I have when using spaced repetition systems are strikingly similar. At their best, these systems feel like magic.This guide assumes basic familiarity with spaced repetition systems. For an introduction, see Michael Nielsen, Augmenting Long-term Memory (2018), which is also the source of the phrase “makes memory a choice.” Memory ceases to be a haphazard phenomenon, something you hope happens: spaced repetition systems make memory a choice. Used well, they can accelerate learning, facilitate creative work, and more. But like in my childhood daydreams, these wonders unfold only when you press just the right sequence of keys, producing just the right incantation. That is, when you manage to write good prompts—the questions and answers you review during practice sessions.

Spaced repetition systems work only as well as the prompts you give them. And especially when new to these systems, you’re likely to give them mostly bad prompts. It often won’t even be clear which prompts are bad and why, much less how to improve them. My early experiments with spaced repetition systems felt much like my childhood daydreams: prodding a dusty old artifact, hoping it’ll suddenly spring to life and reveal its magic.

Happily, prompt-writing does not require arcane secrets. It’s possible to understand somewhat systematically what makes a given prompt effective or ineffective. From that basis, you can understand how to write good prompts. Now, there are many ways to use spaced repetition systems, and so there are many ways to write good prompts. This guide aims to help you create understanding in the context of an informational resource like an article or talk. By that I mean writing prompts not only to durably internalize the overt knowledge presented by the author, but also to produce and reinforce understandings of your own, understandings which you can carry into your life and creative work.

For readers who are new to spaced repetition, this guide will help you overcome common problems that often lead people to abandon these systems. In later sections, we’ll cover some unusual prompt-writing perspectives which may help more experienced readers deepen their practice.If you don’t have a spaced repetition system, I’d suggest downloading Anki and reading Michael’s aforementioned essay. Our discussion will focus on high-level principles, so you can follow along using any spaced repetition system you like. Let’s get started.

No matter the application, it’s helpful to remember that when you write a prompt in a spaced repetition system, you are giving your future self a recurring task. Prompt design is task design.

If a prompt “works,” it’s because performing that task changes you in some useful way. It’s worth trying to understand the mechanisms behind those changes, so you can design tasks which produce the kind of change you want.

The most common mechanism of change for spaced repetition learning tasks is called retrieval practice. In brief: when you attempt to recall some knowledge from memory, the act of retrieval tends to reinforce those memories.For more background, see Roediger and Karpicke, The Power of Testing Memory (2006). Gwern Branwen’s article on spaced repetition is a good popular overview. You’ll forget that knowledge more slowly. With a few retrievals strategically spaced over time, you can effectively halt forgetting. The physical mechanisms are not yet understood, but hundreds of cognitive scientists have explored this effect experimentally, reproducing the central findings across various subjects, knowledge types (factual, conceptual, procedural, motor), and testing modalities (multiple choice, short answer, oral examination).

The value of fluent recall isn’t just in memorizing facts. Many of these experiments tested students not with parroted memory questions but by asking them to make inferences, draw concept mapsSee e.g. Karpicke and Blunt, Retrieval Practice Produces More Learning than Elaborative Studying with Concept Mapping (2011); and Blunt and Karpicke, Learning With Retrieval-Based Concept Mapping (2014)., or answer open-ended questions. In these studies, improved recall translated into improved general understanding and problem-solving ability.

Retrieval is the key element which distinguishes this effective mode of practice from typical study habits. Simply reminding yourself of material (for instance by re-reading it) yields much weaker memory and problem-solving performance. The learning produced by retrieval is called the “testing effect” because it occurs when you explicitly test yourself, reaching within to recall some knowledge from the tangle of your mind. Such tests look like typical school exams, but in some sense they’re the opposite: retrieval practice is about testing your knowledge to produce learning, rather than to assess learning.

Spaced repetition systems are designed to facilitate this effect. If you want prompts to reinforce your understanding of some topic, you must learn to write prompts which collectively invoke retrieval practice of all the key details.

We’ll have to step outside the scientific literature to understand how to write good prompts: existing evidence stops well short of exact guidance. In lieu of that, I’ve distilled the advice in this guide from my personal experience writing thousands of prompts, grounded where possible in experimental evidence.

For more background on the mnemonic medium, see Matuschak and Nielsen, How can we develop transformative tools for thought? (2019).This guide is an example of what Michael Nielsen and I have called a mnemonic medium. It exemplifies its own advice through spaced repetition prompts interleaved directly into the text. If you’re reading this, you’ve probably already used a spaced repetition system. This guide’s system, Orbit, works similarly.If you have an existing spaced repetition practice, you may find it annoying to review prompts in two places. As Orbit matures, we’ll release import / export tools to solve this problem. But it has a deeper aspiration: by integrating expert-authored prompts into the reading experience, authors can write texts which readers can deeply internalize with relatively little effort. If you’re an author, then, this guide may help you learn how to write good prompts both for your personal practice and also for publications you write using Orbit. You can of course read this guide without answering the embedded prompts, but I hope you’ll give it a try.

These embedded prompts are part of an ongoing research project. The first experiment in the mnemonic medium was Quantum Country, a primer on quantum computation. Quantum Country is concrete and technical: definitions, notation, laws. By contrast, this guide mostly presents heuristics, mental models, and advice. The embedded prompts therefore play quite different roles in these two contexts. You may not need to memorize precise definitions here, but I believe the prompts will help you internalize the guide’s ideas and put them into action. On the other hand, this guide’s material may be too contingent and too personal to benefit from author-provided prompts. It’s an experiment, and I invite you to tell me about your experiences.

One important limitation is worth noting. This guide describes how to write prompts which produce and reinforce understandings of your own, going beyond what the author explicitly provides. Orbit doesn’t yet offer readers the ability to remix author-provided prompts or add their own. Future work will expand the system in that direction.

Writing good prompts feels surprisingly similar to translating written text. When translating prose into another language, you’re asking: which words, when read, would light a similar set of bulbs in readers’ minds? It’s not a rote operation. If the passage involves allusion, metaphor, or humor, you won’t translate literally. You’ll try to find words which recreate the experience of reading the original for a member of a foreign culture.

When writing spaced repetition prompts meant to invoke retrieval practice, you’re doing something similar to language translation. You’re asking: which tasks, when performed in aggregate, require lighting the bulbs which are activated when you have that idea “fully loaded” into your mind?

The retrieval practice mechanism implies some core properties of effective prompts. We’ll review them briefly here, and the rest of this guide will illustrate them through many examples.

These properties aren’t laws of nature. They’re more like rules you might learn in an English class. Good writers can (and should!) strategically break the rules of grammar to produce interesting effects. But you need to have enough experience to understand why doing something different makes sense in a given context.

Retrieval practice prompts should be focused. A question or answer involving too much detail will dull your concentration and stimulate incomplete retrievals, leaving some bulbs unlit. Unfocused questions also make it harder to check whether you remembered all parts of the answer and to note places where you differed. It’s usually best to focus on one detail at a time.

Retrieval practice prompts should be precise about what they’re asking for. Vague questions will elicit vague answers, which won’t reliably light the bulbs you’re targeting.

Retrieval practice prompts should produce consistent answers, lighting the same bulbs each time you perform the task. Otherwise, you may run afoul of an interference phenomenon called “retrieval-induced forgetting”This effect has been produced in many experiments but is not yet well understood. For an overview, see Murayama et al, Forgetting as a consequence of retrieval: a meta-analytic review of retrieval-induced forgetting (2014).: what you remember during practice is reinforced, but other related knowledge which you didn’t recall is actually inhibited. Now, there is a useful type of prompt which involves generating new answers with each repetition, but such prompts leverage a different theory of change. We’ll discuss them briefly later in this guide.

SuperMemo’s algorithms (also used by most other major systems) are tuned for 90% accuracy. Each review would likely have a larger impact on your memory if you targeted much lower accuracy numbers—see e.g. Carpenter et al, Using Spacing to Enhance Diverse Forms of Learning (2012). Higher accuracy targets trade efficiency for reliability.Retrieval practice prompts should be tractable. To avoid interference-driven churn and recurring annoyance in your review sessions, you should strive to write prompts which you can almost always answer correctly. This often means breaking the task down, or adding cues.

Retrieval practice prompts should be effortful. It’s important that the prompt actually involves retrieving the answer from memory. You shouldn’t be able to trivially infer the answer. Cues are helpful, as we’ll discuss later—just don’t “give the answer away.” In fact, effort appears to be an important factor in the effects of retrieval practice.For more on the notion that difficult retrievals have a greater impact than easier retrievals, see the discussion in Bjork and Bjork, A New Theory of Disuse and an Old Theory of Stimulus Fluctuation (1992). Pyc and Rawson, Testing the retrieval effort hypothesis: Does greater difficulty correctly recalling information lead to higher levels of memory? (2009) offers some focused experimental tests of this theory, which they coin the “retrieval effort hypothesis.” That’s one motivation for spacing reviews out over time: if it’s too easy to recall the answer, retrieval practice has little effect.

Achieving these properties is mostly about writing tightly-scoped questions. When a prompt’s scope is too broad, you’ll usually have problems: retrieval will often lack a focused target; you may produce imprecise or inconsistent answers; you may find the prompt intractable. But writing tightly-scoped questions is surprisingly difficult. You’ll need to break knowledge down into its discrete components so that you can build those pieces back up as prompts for retrieval practice. This decomposition also makes review more efficient. The schedule will rapidly remove easy material from regular practice while ensuring you frequently review the components you find most difficult.

Now imagine you’ve just read a long passage on a new topic. What, specifically, would have to be true for you to say you “know” it? To continue the translation metaphor, you must learn to “read” the language of knowledge—recognizing nouns and verbs, sentence structures, narrative arcs—so that you can write their analogues in the translated language. Some details are essential; some are trivial. And you can’t stop with what’s on the page: a good translator will notice allusions and draw connections of their own.

So we must learn two skills to write effective retrieval practice prompts: how to characterize exactly what knowledge we’ll reinforce, and how to ask questions which reinforce that knowledge.

Our discussion so far has been awfully abstract. We’ll continue by analyzing a concrete example: a recipe for chicken stock.

A recipe may seem like a fairly trivial target for prompt-writing, and in some sense that’s true. It’s a conveniently short and self-contained example. But in fact, my spaced repetition library contains hundreds of prompts capturing foundational recipes, techniques, and observations from the kitchen. This is itself an essential prompt-writing skill to build—noticing unusual but meaningful applications for prompts—so I’ll briefly describe my experience.

I’d cooked fairly seriously for about a decade before I began to use spaced repetition, and of course I naturally internalized many core techniques and ratios. Yet whenever I was making anything complex, I’d constantly pause to consult references, which made it difficult to move with creativity and ease. I rarely felt “flow” while cooking. My experiences felt surprisingly similar to my first few years learning to program, in which I encountered exactly the same problems. With years of full-time attention, I automatically internalized all the core knowledge I needed day-to-day as a programmer. I’m sure that I’d eventually do the same in the kitchen, but since cooking has only my part-time attention, the process might take a few more decades.

I started writing prompts about core cooking knowledge three years ago, and it’s qualitatively changed my life in the kitchen. These prompts have accelerated my development of a deeply satisfying ability: to show up at the market, choose what looks great in that moment, and improvise a complex meal with confidence. If the sunchokes look good, I know they’d pair beautifully with the mustard greens I see nearby, and I know what else I need to buy to prepare those vegetables as I imagine. When I get home, I already know how to execute the meal; I can move easily about the kitchen, not hesitating to look something up every few minutes. Despite what this guide’s lengthy discussion might suggest, these prompts don’t take me much time to write. Every week or two I’ll trip on something interesting and spend a few minutes writing prompts about it. That’s been enough to produce a huge impact.

At a decent restaurant, even simple foods often taste much better than most home cooks’ renditions. Sautéed vegetables seem richer; grains seem richer; sauces seem more luscious. One key reason for this is stock, a flavorful liquid building block. Restaurants often use stocks in situations where home cooks might use water: adding a bit of steam to sautéed vegetables, thinning a purée, simmering whole grains, etc. Stocks are also the base of many sauces, soups, and braises.

Stock is made by simmering flavorful ingredients in water. By varying the ingredients, we can produce different types of stock: chicken stock, vegetable stock, mushroom stock, pork stock, and so on. But unlike a typical broth, stock isn’t meant to have a distinctive flavor that can stand on its own. Instead, its job is to provide a versatile foundation for other preparations.

One of the most useful stocks is chicken stock. When used to prepare vegetables, chicken stock doesn’t make them taste like chicken: it makes them taste more savory and complete. It also adds a luxurious texture because it’s rich in gelatin from the chicken bones. Chicken stock takes only a few minutes of active time to make, and in a typical kitchen, it’s basically free: the primary ingredient is chicken bones, which you can naturally accumulate in your freezer if you cook chicken regularly.

- 2lbs (~1kg) chicken bones

- 2qt (~2L) water

- 1 onion, roughly chopped

- 2 carrots, roughly chopped

- 2 ribs of celery, roughly chopped

- 4 cloves garlic, smashed

- half a bunch of fresh parsley

- Combine all the ingredients in a large pot.

- Bring to a simmer on low heat (this will take about an hour). We use low heat to produce a bright, clean flavor: at higher temperatures, the stock will both taste and look duller.

- Lower heat to maintain a bare simmer for an hour and a half.

- Strain, wait until cool, then transfer to storage containers.

Chicken stock will keep for a week in the fridge or indefinitely in the freezer. There will be a cap of fat on the stock; skim that off before using the stock, and deploy the fat in place of oil or butter in any savory cooking situation.

This recipe can be scaled up or down to the quantity of chicken bones you have. The basic ratio is a pound of bones to a quart of water. The vegetables are flexible in choice and ratio.

For a more French flavor profile, replace the celery with leeks and add any/all of bay leaves, black peppercorns, and thyme. For a deeper flavor, roast the bones and vegetables first to make what’s called a “brown chicken stock” (the recipe above is for a “white chicken stock,” which is more delicate but also more versatile).

A few ideas for what you might do with your chicken stock:

- Cook barley, farro, couscous and other grains in it.

- Purée with roasted vegetables to make soup.

- Wilt hearty greens like kale, chard, or collards in oil, then add a bit of stock and cover to steam through.

- After roasting or pan-searing meat, deglaze the pan with stock to make a quick sauce.

To organize our efforts, it’s helpful to ask: what would it mean to “know” this material? I’d suggest that someone who “knows” this material should:

- know how to make and store chicken stock

- know what stock is and (at least shallowly) understand why and when it matters

- know the role and significance of chicken stock, specifically

- know some ways one might use chicken stock, both generally and with some specific examples

- know of a few common variations and when they might be used

Some of this knowledge is factual; some of it is procedural; some of it is conceptual. We’ll see strategies for dealing with each of these types of knowledge.

But understanding is inherently personal. Really “knowing” something often involves going beyond what’s on the page to connect it to your life, other ideas you’re exploring, and other activities you find meaningful. We’ll also look at how to write questions of that kind.

If you’re a vegetarian, I hope you can look past the discussion of bones: choosing this example involved many trade-offs.In this guide, we’ll imagine that you’re an interested home cook who’s never made stock before. Naturally, if you’re an experienced cook, you’d probably need only a few of these prompts. And of course, if you don’t cook at all, you’d write none of these prompts! Try to read the examples as demonstrations of how you might internalize a resource deeply without much prior fluency.

To demonstrate a wide array of principles, we’ll treat this material quite exhaustively. But it’s worth noting that in practice, you usually won’t study resources as systematically as this. You’ll jump around, focusing only on the parts which seem most valuable. You may return to a resource on a few occasions, writing more prompts as you understand what’s most relevant. That’s good! Exhaustiveness may seem righteous in a shallow sense, but an obsession with completionism will drain your gumption and waste attention which could be better spent elsewhere. We’ll return to this issue in greater depth later.

This guide aspires to demonstrate a wide variety of techniques, so I’ve deliberately analyzed the chicken stock recipe quite exhaustively. But in practice, if you were examining a recipe for the first time, I certainly wouldn’t recommend writing dozens of prompts at once like we’ve done here. If you try to analyze everything you read so comprehensively, you’re likely to waste time and burn yourself out.

Those issues aside, it’s hard to write good prompts on your first exposure to new ideas. You’re still developing a sense of which details are important and which are not—both objectively, and to you personally. You likely don’t know which elements will be particularly challenging to remember (and hence worth extra reinforcement). You may not understand the ideas well enough to write prompts which access their “essence”, or which capture subtle implications. And you may need to live with new ideas for a while before you can write prompts which connect them vibrantly with whatever really matters to you.

All this suggests an iterative approach.

Say you’re reading an article that seems interesting. Try setting yourself an accessible goal: on your first pass, aim to write a small number of prompts (say, 5-10) about whatever seems most important, meaningful, or useful.

I find that such goals change the way I read even casual texts. When first adopting spaced repetition practice, I felt like I “should” write prompts about everything. This made reading a chore. By contrast, it feels quite freeing to aim for just a few key prompts at a time.As Michael Nielsen notes, similar lightweight prompt-writing goals can enliven seminars, professional conversations, events, and so on. I read a notch more actively, noticing a tickle in the back of my mind: “Ooh, that’s a juicy bit! Let’s get that one!”

If the material is fairly simple, you may be able to write these prompts while you read. But for texts which are challenging or on an unfamiliar topic, it may be too disruptive to switch back and forth. In such cases it’s better to highlight or make note of the most important details. Then you can write prompts about your understanding of those details in a batch at the end or at a suitable stopping point. For these tougher topics, I find it’s best to focus initially on prompts about basic details you can build on: raw facts, terms, notation, etc.

Books are more complicated: there are many kinds of books and many ways to read them. This is true of articles, too, of course, but books amplify the variance. For one thing, you’re less likely to read a book linearly than an article. And, of course, they’re longer, so a handful of prompts will rarely suffice. The best prompt-writing approach will depend on how and why you’re reading the book, but in general, if I’m trying to internalize a non-fiction book, I’ll often begin by aiming to write a few key prompts on my first pass through a chapter or major section.

For many resources, one pass of prompt-writing is all that’s worth doing, at least initially. But if you have a rich text which you’re trying to internalize thoroughly, it’s often valuable to make multiple passes, even in the first reading session. That doesn’t necessarily mean doubling down on effort: just write another handful of apparently-useful prompts each time. For a vivid account of this process in mathematics, see Michael Nielsen, Using spaced repetition systems to see through a piece of mathematics (2019).With each iteration, you’ll likely find yourself able to understand (and write prompts for) increasingly complex details. You may notice your attention drawn to patterns, connections, and bigger-picture insights. Even better: you may begin to focus on your own observations and questions, rather than those of the author. But it’s also important to notice if you feel yourself becoming restless. There’s no deep virtue in writing a prompt about every detail. In fact, it’s much more important to remain responsive to your sense of curiosity and interest.

Piotr Wozniak, a pioneer of spaced repetition, has been developing a system he calls incremental reading which attempts to actively support this kind of iterative, incremental prompt writing.If you notice a feeling of duty or completionism, remind yourself that you can always write more prompts later. In fact, they’ll probably be better if you do: motivated by something meaningful, like a new connection or a gap in your understanding.

Let’s consider our chicken stock recipe again for a moment. If I were an aspiring cook who had never heard of stock before, I’d probably write a few prompts about what stock is and why it matters: those details seem useful beyond the scope of this single recipe, and they connect to happy dining experiences I’ve had. That’s probably all I’d do until I actually made a batch of stock for myself. At that point, I’d know which steps were obvious and which made me consult the recipe. If I found I wanted to make stock again, I’d write another batch of prompts to recall details like ingredient ratios and times. I’d try to notice places where I found myself straining, vaguely aware that I’d “read something about this” but unsure of the details. As I used my first batch of stock in subsequent dishes, I might then write prompts about those experiences. And so on.

While you’re drafting prose, a spell checker and grammar checker can help you avoid some simple classes of error. Such tools don’t yet exist for prompt-writing, so it’s helpful to collect simple tests which can serve a similar function.

False positives: How might you produce the correct answer without really knowing the information you intend to know?

Discourage pattern matching. If you write a long question with unusual words or cues, you might eventually memorize the shape of that question and learn its corresponding answer—not because you’re really thinking about the knowledge involved, but through a mechanical pattern association. Cloze deletions seem particularly susceptible to this problem, especially when created by copying and editing passages from texts. This is best avoided by keeping questions short and simple.

Avoid binary prompts. Questions which ask for a yes/no or this/that answer tend to require little effort and produce shallow understanding. I find I can often answer such questions without really understanding what they mean. Binary prompts are best rephrased as more open-ended prompts. For instance, the first of these can be improved by transforming it into the second:

Q. Does chicken stock typically make vegetable dishes taste like chicken?

A. No.

Q. How does chicken stock affects the flavor of vegetable dishes? (according to Andy’s recipe)

A. It makes them taste more “complete.”

Improving a binary prompt often involves connecting it to something else, like an example or an implication. The lenses in the conceptual knowledge section are useful for this.

False negatives: How might you know the information the prompt intends to capture but fail to produce the correct answer? Such failures are often caused by not including enough context.

It’s easy to accidentally write a question which has correct answers besides the one you intend. You must include enough context that reasonable alternative answers are clearly wrong, while not including so much context that you encourage pattern matching or dilute the question’s focus.

For example, if you’ve just read a recipe for making an omelette, it might feel natural to ask: “What’s the first step to cook an omelette?” The answer might seem obvious relative to the recipe you just read: step one is clearly “heat butter in pan”! But six months from now, when you come back to this question, there are many reasonable answers: whisk eggs; heat butter in a pan; mince mushrooms for filling; etc.

One solution is to give the question extremely precise context: “What’s the first step in the Bon Appetit Jun ’18 omelette recipe?” But this framing suggests the knowledge is much more provincial than it really is. When possible, general knowledge should be expressed generally, so long as you can avoid ambiguity. This may mean finding another angle on the question; for instance: “When making an omelette, how must the pan be prepared before you add the eggs?”

False negatives often feel like the worst nonsense from school exams: “Oh, yes, that answer is correct—but it’s not the one we were looking for. Try again?” Soren Bjornstad points out that a prompt which fails to exclude alternative correct answers requires that you also memorize “what the prompt is asking.”

It’s often tough to diagnose issues with prompts while you’re writing them. Problems may become apparent only upon review, and sometimes only once a prompt’s repetition interval has grown to many months. Prompt-writing involves long feedback loops. So just as it’s important to write prompts incrementally over time, it’s also important to revise prompts incrementally over time, as you notice problems and opportunities.

In your review sessions, be alert to feeling an internal “sigh” at a prompt. Often you’ll think: “oh, jeez, this prompt—I can never remember the answer.” Or “whenever this prompt comes up, I know the answer, but I don’t really understand what it means.” Listen for those reactions and use them to drive your revision. To avoid disrupting your review session, most spaced repetition systems allow you to flag a prompt as needing revision during a review. Then once your session is finished, you can view a list of flagged prompts and improve them.

The analogy to sentences is drawn from Matuschak and Nielsen, How can we develop transformative tools for thought? (2019).Learning to write good prompts is like learning to write good sentences. Each of these skills sometimes seems trivial, but each can be developed to a virtuosic level. And whether you’re writing prompts or writing sentences, revision is a holistic endeavor. When editing prose, you can sometimes focus your attention on a single sentence. But to fix an awkward line, you may find yourself merging several sentences together, modifying your narrative devices, or changing broad textual structures. I find a similar observation applies to editing spaced repetition prompts. A prompt can sometimes be improved in isolation, but as my understanding shifts I’ll often want to revise holistically—merge a few prompts here, reframe others there, split these into finer details. If you’ve attempted the exercises, you may notice that it’s easier to revise across question boundaries when composing multiple questions in the same text field. As an experiment, I’ve written almost all new prompts in 2020 as simple “Q. / A.” lines (like the examples in this guide) embedded in plaintext notes, using an old-fashioned text editor instead of a dedicated interface. I find I prefer this approach in most situations. In the future, I may release tools which allow others to write prompts in this way.Unfortunately, most spaced repetition interfaces treat each prompt as a sovereign unit, which makes this kind of high-level revision difficult. It’s as if you’re being asked to write a paper by submitting sentence one, then sentence two, and so on, revising only by submitting a request to edit a specific sentence number. Future systems may improve upon this limitation, but in the meantime, I’ve found I can revise prompts more effectively by simply keeping a holistic aspiration in mind.

In this guide, I’ve analyzed an example quite exhaustively to illustrate a wide array of principles, and I’ve advised you to write more prompts than might feel natural. So I’d like to close by offering a contrary admonition.

I believe the most important thing to “optimize” in spaced repetition practice is the emotional connection to your review sessions and their contents. It’s worth learning how to create prompts which effectively represent many different kinds of understanding, but a prompt isn’t worth reviewing just because it satisfies all the properties I’ve described here. If you find yourself reviewing something you don’t care about anymore, you should act. Sometimes upon reflection you’ll remember why you cared about the idea in the first place, and you can revise the prompt to cue that motivation. But most of the time the correct way to revise such prompts is to delete them.

Another way to approach this advice is to think about its reverse: what material should you write prompts about? When are these systems worth using? Many people feel paralyzed when getting started with spaced repetition, intrigued but unsure where it applies in their life. Others get started by trying to memorize trivia they feel they “should” know, like the names of all the U.S. presidents. Boredom and abandonment typically ensue. The best way to begin is to use these systems to help you do something that really matters to you—for example, as a lever to more deeply understand ideas connected to your core creative work. With time and experience, you’ll internalize the benefits and costs of spaced repetition, which may let you identify other useful applications (like I did with cooking). If you don’t see a way to use spaced repetition systems to help you do something that matters to you, then you probably shouldn’t bother using these systems at all.

These resources have been especially useful to me as I’ve developed an understanding of how to write good prompts:

- Piotr Wozniak’s Effective learning: Twenty rules of formulating knowledge and the more detailed Knowledge structuring and representation in learning based on active recall tackle the same topic as this guide from a different perspective.

- Michael Nielsen’s Augmenting Long-term Memory: a thorough review of how spaced repetition works and why you should care; more details on how to practically integrate prompt-writing into your reading practices, particularly when reading academic literature; notes on creative applications; and much more.

- Michael Nielsen’s Using spaced repetition systems to see through a piece of mathematics demonstrates how to iteratively deepen one’s understanding of a piece of mathematics, using spaced repetition prompts as a lever.

For more perspectives on this and related topics, see:

- Soren Bjornstad’s series on memory systems helpfully covers many practical topics in maintaining a prompt library, including some advice on prompt-writing.

- Nicky Case’s How To Remember Anything Forever-ish introduces spaced repetition and covers some prompt-writing techniques through delightful illustrations.

- How can we develop transformative tools for thought? (from Michael Nielsen and me) discusses the challenges of prompt-writing with particular focus on the “mnemonic medium,” which involves embedding prompts in narrative prose.

My thanks to Peter Hartree, Michael Nielsen, Ben Reinhardt, and Can Sar for helpful feedback on this guide; to the many attendees of the prompt-writing workshops I held while developing this guide; and to Gwern Branwen and Taylor Rogalski for helpful discussions on prompt-writing which informed this work. I’m particularly grateful to Michael Nielsen for years of conversations and collaborations around memory systems, which have shaped all aspects of how I think about the subject.