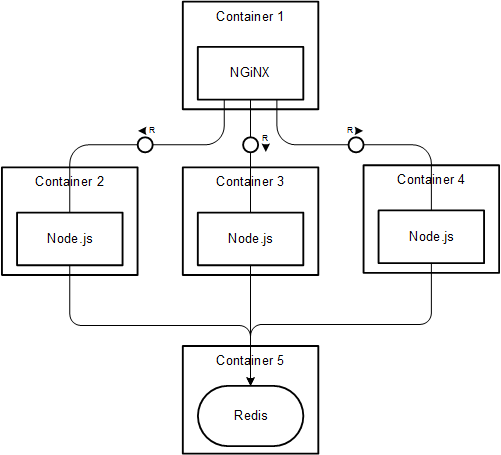

For this example, I have a very simple Node.js applications that increments a counter stored on Redis. I want to run Redis and the node application independently as I want to have the ability to scale the node application depending on the load. To start off, I have 3 instances of the node server running the application. I have an Nginx server in front of node for load balancing the node instances.

Let’s now talk in terms of containers, specifically Docker containers. Simple; 1 container for each service/process!

- 1 Redis container

- 3 Node containers

- 1 Nginx container So, the overall picture looks something like this:

The index.js :

var express = require('express'),

http = require('http'),

redis = require('redis');

app = express();

console.log(process.env.REDIS_PORT_6379_TCP_ADDR + ':' + process.env.REDIS_PORT_6379_TCP_PORT);

// APPROACH 1: Using environment variables created by Docker

// var client = redis.createClient(

// process.env.REDIS_PORT_6379_TCP_PORT,

// process.env.REDIS_PORT_6379_TCP_ADDR

// );

// APPROACH 2: Using host entries created by Docker in /etc/hosts (RECOMMENDED)

var client = redis.createClient('6379', 'redis');

app.get('/', function(req, res, next) {

client.incr('counter', function(err, counter) {

if(err) return next(err);

res.send(`

<head>

<link href="https://fonts.googleapis.com/css?family=Roboto:100" rel="stylesheet" type="text/css">

</head>

<style>

body {

font-family: 'Roboto', sans-serif;

background-color: rgb(49, 49, 49);

color: rgb(255, 136, 0);

text-align: center;

display: flex;

align-items: center;

justify-content: center;

font-size: 60px;

flex-direction: column;

}

footer {

margin-top: 20px;

font-size: 28px;

color: #848484;

}

a {

color: white;

text-decoration: none;

cursor: pointer;

&:hover {

opacity: 0.8;

}

}

span {

margin: 0 5px;

border-radius: 4px;

color: white;

}

span::before {

content: " ";

position: absolute;

background-color: black;

width: 40px;

margin-left: -4px;

height: 36px;

z-index: -1;

border-radius: 4px;

}

span::after {

content: " ";

position: absolute;

margin-top: 37px;

background-color: black;

width: 40px;

height: 36px;

z-index: -1;

margin-left: -37px;

border-radius: 4px;

}

</style>

<body>

<div>This page has been viewed <script>document.write(String(${counter}).split('').map(function(c) {return '<span>' + c + '</span>'}).join(''))</script> times</div>

<footer>© 2016, HyperHQ Inc. All rights reserved. For more information, visit <a href="https://hyper.sh">hyper.sh</a></footer>

</body>

`);

});

});

http.createServer(app).listen(process.env.PORT || 8080, function() {

console.log('Listening on port ' + (process.env.PORT || 8080));

});

The Dockerfile to build node image:

# Set the base image to Ubuntu

FROM ubuntu

# File Author / Maintainer

MAINTAINER Lei Xue

# Install Node.js and other dependencies

RUN apt-get update && \

apt-get -y install curl && \

curl -sL https://deb.nodesource.com/setup_6.x | sudo bash - && \

apt-get -y install python build-essential nodejs

# Provides cached layer for node_modules

ADD package.json /tmp/package.json

RUN cd /tmp && npm install

RUN mkdir -p /src && cp -a /tmp/node_modules /src/

# Define working directory

WORKDIR /src

ADD . /src

# Expose port

EXPOSE 8080

# Run app using nodemon

CMD ["node", "/src/index.js"]

worker_processes 4;

events { worker_connections 1024; }

http {

upstream node-app {

least_conn;

server node1:8080 weight=10 max_fails=3 fail_timeout=30s;

server node2:8080 weight=10 max_fails=3 fail_timeout=30s;

server node3:8080 weight=10 max_fails=3 fail_timeout=30s;

}

server {

listen 80;

location / {

proxy_pass http://node-app;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

proxy_set_header Host $host;

proxy_cache_bypass $http_upgrade;

}

}

}

Compose is a tool for defining and running complex applications with Hyper_. It can get pretty tedious to build the images, run and link containers using individual commands, especially when you are dealing with many. Hyper Compose lets you define a multi-container application in a single file, and spin up the application with a single command.

I’ve defined a hyper compose YAML as follows:

version: "2"

services:

nginx:

image: carmark/nginx

links:

- node1:node1

- node2:node2

- node3:node3

node1:

image: carmark/node

links:

- redis

node2:

image: carmark/node

links:

- redis

node3:

image: carmark/node

links:

- redis

redis:

image: redis

With a single command, Hyper_ Compose will build the required images, expose the required ports, run and link the containers as defined in the YAML. All you need to do is run docker-compose up! Your 5 container application is up and running. Hit your host URL on port 80 and you have your view counter!

One of the significant features of Docker Compose is the ability to dynamically scale a container. Using the command docker-compose scale node=5, one can scale the number of containers to run for a service. If you had a Docker based microservices architecture, you could easily scale specific services dynamically depending on the load distribution requirements. Ideally, I would have preferred defining 1 node service and scaling it up using Docker Compose. But I haven’t figured a way to adjust the Nginx configuration dynamically. Please leave a comment if you have any thoughts on this. (UPDATE: See comments below for approaches to maintaining a dynamic Nginx configuration)