- Market Share

- General Testrunner Ergonomics

- e2e testing perspective

- Debugging

- TypeScript Support

- Parallelization

Test Runner market share, as per developer survey:

(source: State of JavaScript 2019)

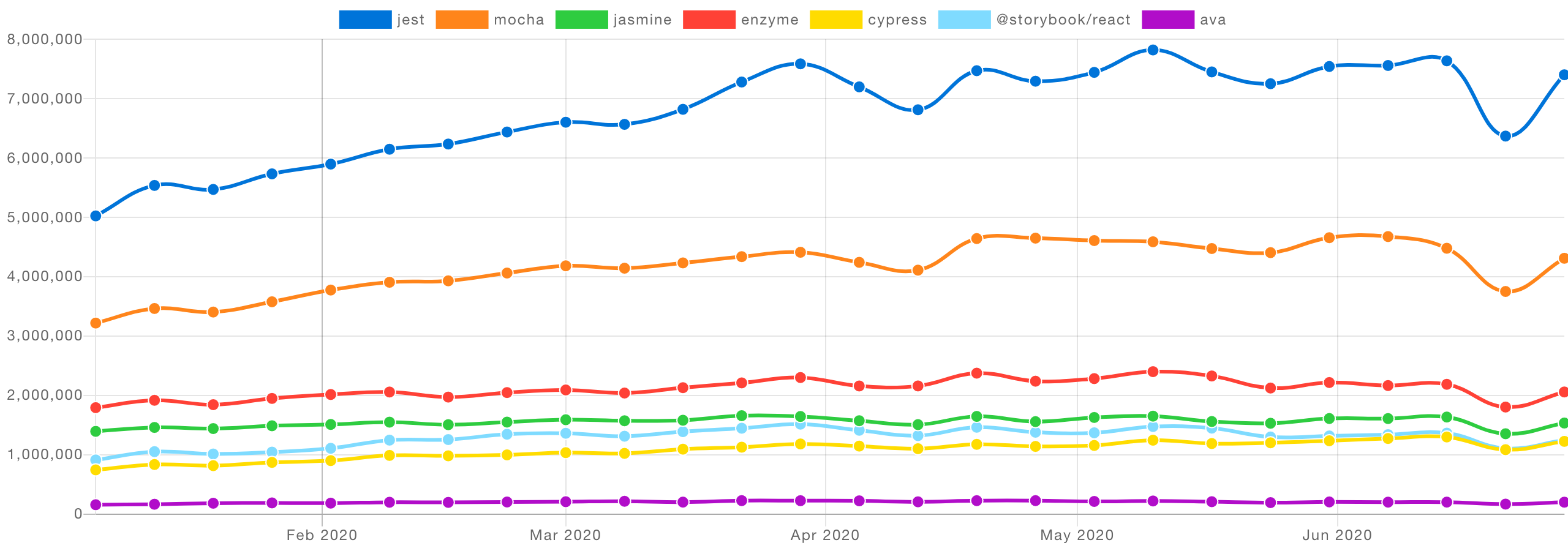

NPM Downloads:

(source: npm trends)

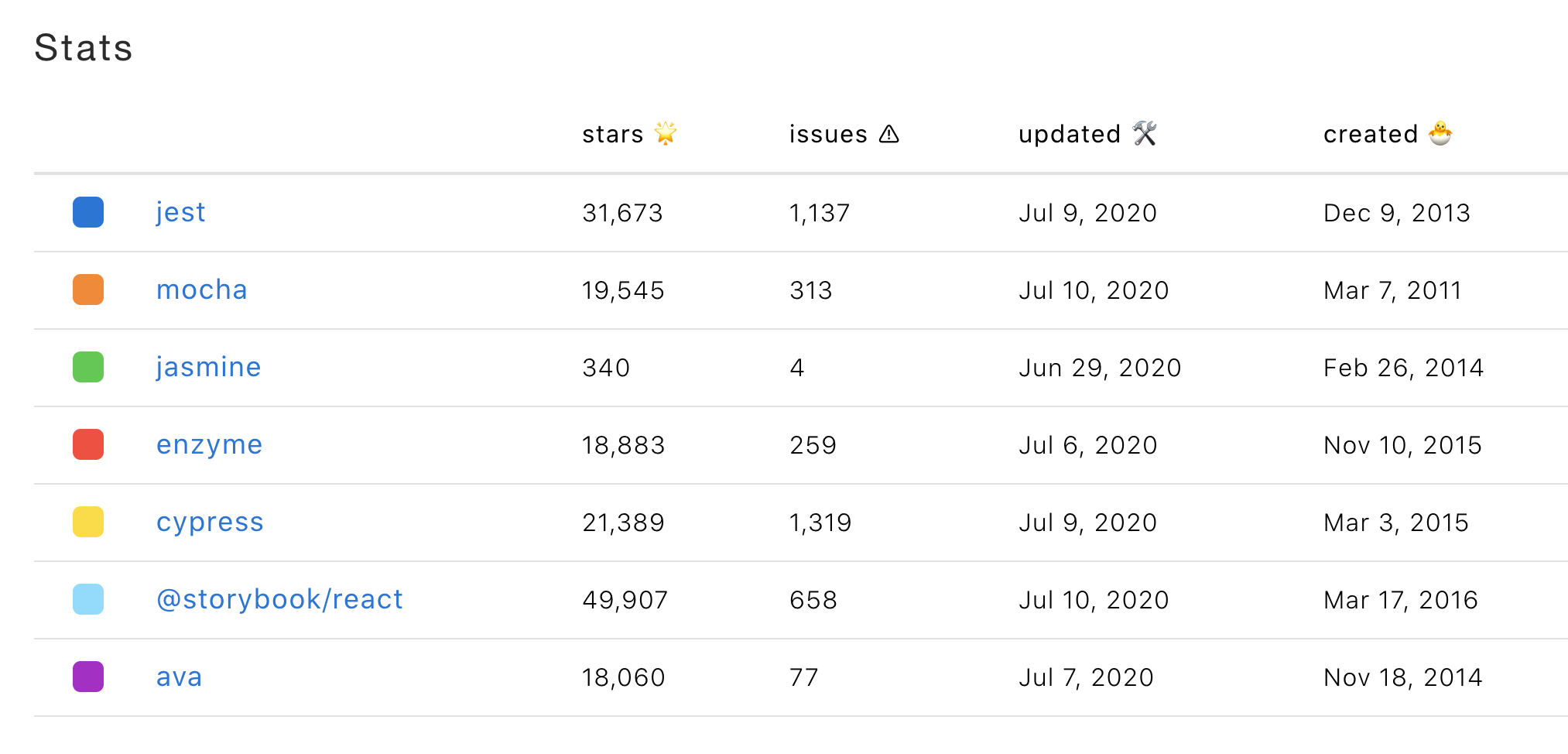

Github stats:

(source: npm trends)

Take-Aways:

- Enzyme uses jest internally, so jest downloads include all Enzyme downloads as well

- Mocha is on par with Jest

- There are 4 "just testrunners" that are used today: Jest, AVA, Mocha, Jasmine. Other "testing solutions" either rely on some of these testrunners, and/or provide a very different core value (like "cypress").

For the rest of this document, we'll look into Jest, AVA, Mocha and Jasmine.

For TestRunner ergonomics, I find the following lists fascinating to read:

Below is a rough summary of the concerns from the current test-running solutions.

- Fast & Lean! Fast to install, fast to run: #6 jest bug, #9 jest bug, make jest small! bug

- First-class support for ESModules: #1 jest bug, #3 ava bug

- Capable programmatic API, allowing to fully inspect the set of tests, their expected results, timeouts, environments and so on. This is to power future test-related tooling (linters, flakiness dashboards, test explorers e.t.c.): jest bug, jest blogpost

- Sourcemaps support: #3 jasmine bug

- Optional opt-out from global poisoning: #5 mocha bug

- Suites support: #1 ava bug

- Async suites: popular closed jest bug

These come from my experimentation with running Playwright & Puppeteer tests, as well as the client interviews that we participated together with Arjun.

The following tasks are pretty common yet are hard to accomplish with either of the 4 testrunners out there:

- Setup a cross-browser testing to satisfy the following scenarios:

- write a single test that captures screenshot and run it across 3 browsers.

- configure some subtests to run in certain browsers only ("regression tests")

- support running tests with

playwright-webkit,playwright-firefoxandplaywright-chromium. (according to NPM download stats, at least 1/3 of our users uses per-browser playwight packages)

- Image and text expectations are first-class citizens.

- easy to save image and text snapshots per-browser, per-platform

- easy to configure custom snapshot strategies that depend on my environments / test specifics (e.g. Playwright snapshots are the same across OS'es)

- generate snapshot name automatically based on the test in a reliable way. Make sure snapshots won't clash across tests!

- TestRunner artifacts is a first-class citizen.

- setup all snapshot diffs, HTML report, screencasts, DOMSNapshots and e.t.c. go to a single

//artifactsfolder. - make all plugins write to the same

//artifactsfolder as well //artifactsfolder is what we upload on CI after every run

- setup all snapshot diffs, HTML report, screencasts, DOMSNapshots and e.t.c. go to a single

- HTML & JUNIT reports are first-class citizens and are generated after each run into the same

//artifactsfolder. - Run tests in parallel in a highly-efficient manner (e.g. re-using browser instance and using browser contexts as a test isolation primitive).

- It should be trivial to re-use browser across parallel workers as well. (this is one of the top-requested issues in ava)

- Collect fail-over artifacts. For example, it's tedious to setup jest to take a page screenshot only after all 3 retries of the test failed.

- Per-test retries. Even though we believe that tests should not be flaky, progmatic retries are still very useful.

Ideally, test debugging is not different from any other node.js debugging. If there's already a node.js debugging

set up in VSCode, or CLI-based approach with --inspect-brk node flag, having same experience in tests

would benefit users.

Today, this is not the case.

- JEST: VSCode debugging requries custom launch config in vscode; the

--inspect-brkdocs are tedious. - Mocha implements a custom "debug" command for the framework. According to StackOverflow, how do I debug mocha tests? is still a popular question

- AVA implements the "debug" command as well

- Jasmine doesn't have any debugging story and falls back to manually running jasmine-cli with node with

--inspect-brk.

However, in all of testrunner debugging experiences:

- if using

--inspect-brkapproach, I was facing with the breakpoint in some test runner internals - everywhere besides jest, test runner wouldn't disable timeout so the test will timeout while I'm debugging it

TypeScript support is very important, given how big TypeScript adoption is. For example, AVA was faced with a huge demand to run .ts files: avajs/ava#1109 (this is #2 overall ava bug, closed now).

Today's TypeScript support across test runners is powered by 3rd party modules:

- jest: supports TypeScript with

ts-jest, also requires DefenitelyTyped installation for environments you use. - ava: basic support via

ava/typescript. - mocha: powered by

ts-mocha(which relies uponts-node) - jasmine: powered by

jasmine-ts(which relies uponts-node)

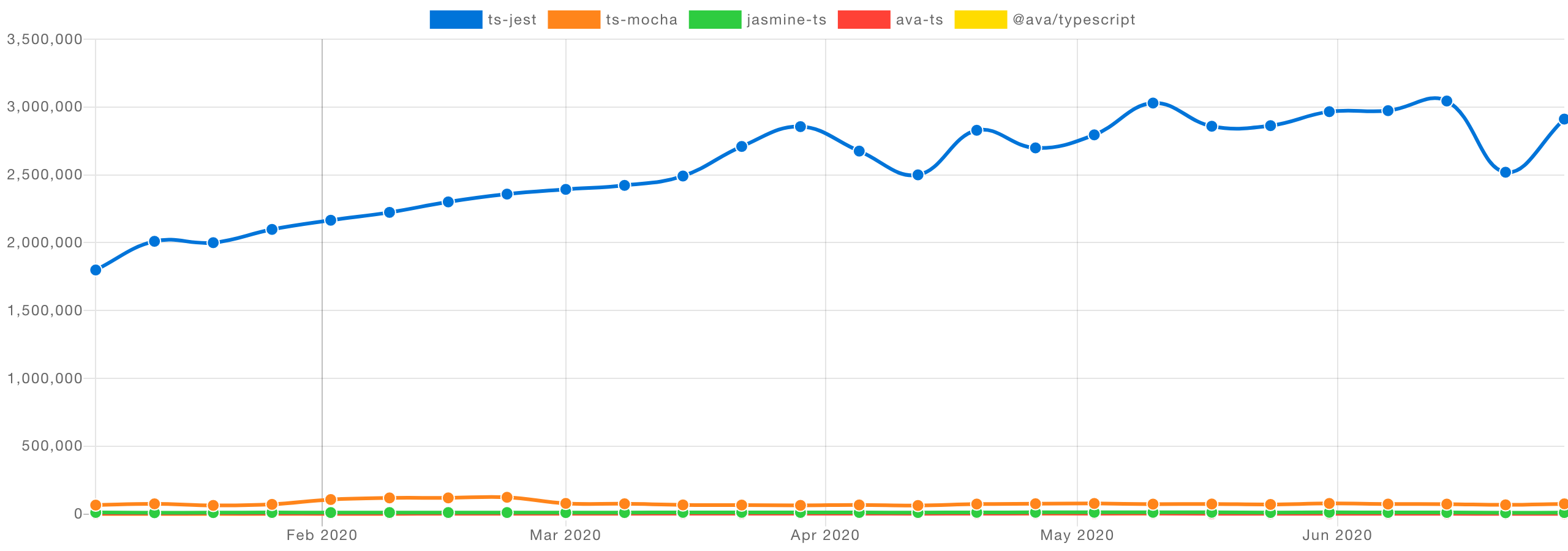

However, almost everywhere besides Jest, TypeScript-running solutions are very unpopular:

It's unclear why, but one of the reasons might be due to the jest-jsdom-react-typescript combination that is very popular and is bringing TypeScript into jest testing land.

Test parallelization is a very popular topic in front-end world:

- jest runs in parallel by default (that's why it's important to pass

--runInBandfor e2e tests on CI) - ava runs tests in parallel by default

- mocha recently released long-awaited parallel support: announcement

All current test runners use child processes to parallelize jobs.

PROs:

- the only "real" way to parallelize work. CPU-heavy node.js tests can be effectively parallelized

CONs:

- For the users, resource sharing between tests is troublesome. E.g. re-using a single browser between workers, re-using a single database connection, and so on is hard: jest workaround, jest bug, ava stack-overflow, ava bug

- Depending on the parallelization approach, tests performance varies: ava.js benchmarks

However, browser e2e-tests are not CPU-heavy: majority of the work is happenning in the driven entity (browser, database, other microservices). Node.js tests are purely orchestrating (e.g. with playwright) and running assertions (e.g. with jest-expect or chai).

This allows to parallelize tests in a single node.js process relying on async I/O. This approach is currently explored in the playwright's testrunner

PROs:

- easy for users to share resources across workers

- fastest performance

CONs:

- might not be efficient if there's a lot of CPU work in node.js process