⚠️ Note 2023-01-21

Some things have changed since I originally wrote this in 2016. I have updated a few minor details, and the advice is still broadly the same, but there are some new Cloudflare features you can (and should) take advantage of. In particular, pay attention to Trevor Stevens' comment here from 22 January 2022, and Matt Stenson's useful caching advice. In addition, Backblaze, with whom Cloudflare are a Bandwidth Alliance partner, have published their own guide detailing how to use Cloudflare's Web Workers to cache content from B2 private buckets. That is worth reading, but I thought it important to note in particular this statement at the top of that article should there be any doubt as to whether using Cloudflare's CDN is permitted or not: "Please Note: Cloudflare can be used as a CDN for a B2 bucket without workers if the bucket is public, not private."

I've been using Backblaze for a while now as my online backup service. I have used a few others in the past. None were particularly satisfactory until Backblaze came along.

It is keenly priced at a flat $7 per month (or $70 a year) for unlimited backup (I've currently got just under half a terabyte backed-up). It has a fast, reliable client. The company itself is transparent about their operations and generous with their knowledge sharing. To me, this says they understand their customers well. I've never had reliability problems and everything about the outfit exudes a sense of simple, quick, solid quality. The service has even saved the day on a couple of occasions where I've lost files.

Safe to say, I'm a happy customer. If you're not already using Backblaze, I highly recommend you do.

So when Backblaze announced they were getting into the cloud storage business, taking on the likes of Amazon S3, Microsoft Azure, and Google Cloud, I paid attention. Even if the cost were the same, or a little bit more, I'd be interested because I like the company. I like their product, and I like their style.

What I wasn't expecting was for them to be cheaper. Much cheaper. How about 400% cheaper than S3 per GB? Don't believe me? Take a look. Remarkable.

What's more, they offer a generous free tier of 10 GB free storage and 1 GB free download per day.

If it were any other company, I might think they're a bunch of clowns trying it on. But I know from my own experience and following their journey, they're genuine innovators and good people.

B2 is pretty simple. You can use their web UI, which is decent. Or you can use Cyberduck, which is what I use, is free, and of high quality. There is also a command-line tool and a number of other integrated tools. There is also a web API, of course.

You can set up a "vanity" URL for your public B2 files. Do it for free using CloudFlare. There's a PDF [1.3 MB] documenting how.

You can also configure CloudFlare to aggressively cache assets served by your B2 service. It is not immediately obvious how to do this, and took a bit of poking around to set up correctly.

By default, B2 serves with cache-invalidating headers: cache-control:max-age=0, no-cache, no-store, which causes CloudFlare to skip caching of assets. You can see this happening by looking for the cf-cache-status:MISS header.

To work around this problem, you can use CloudFlare's PageRules specifying an "Edge cache expire TTL". I won't explain what that means here as it is covered in-depth on the CloudFlare blog.

So, to cache your B2 assets, you need to create a PageRule that includes all files on your B2 domain. For example:

files.silversuit.net/*

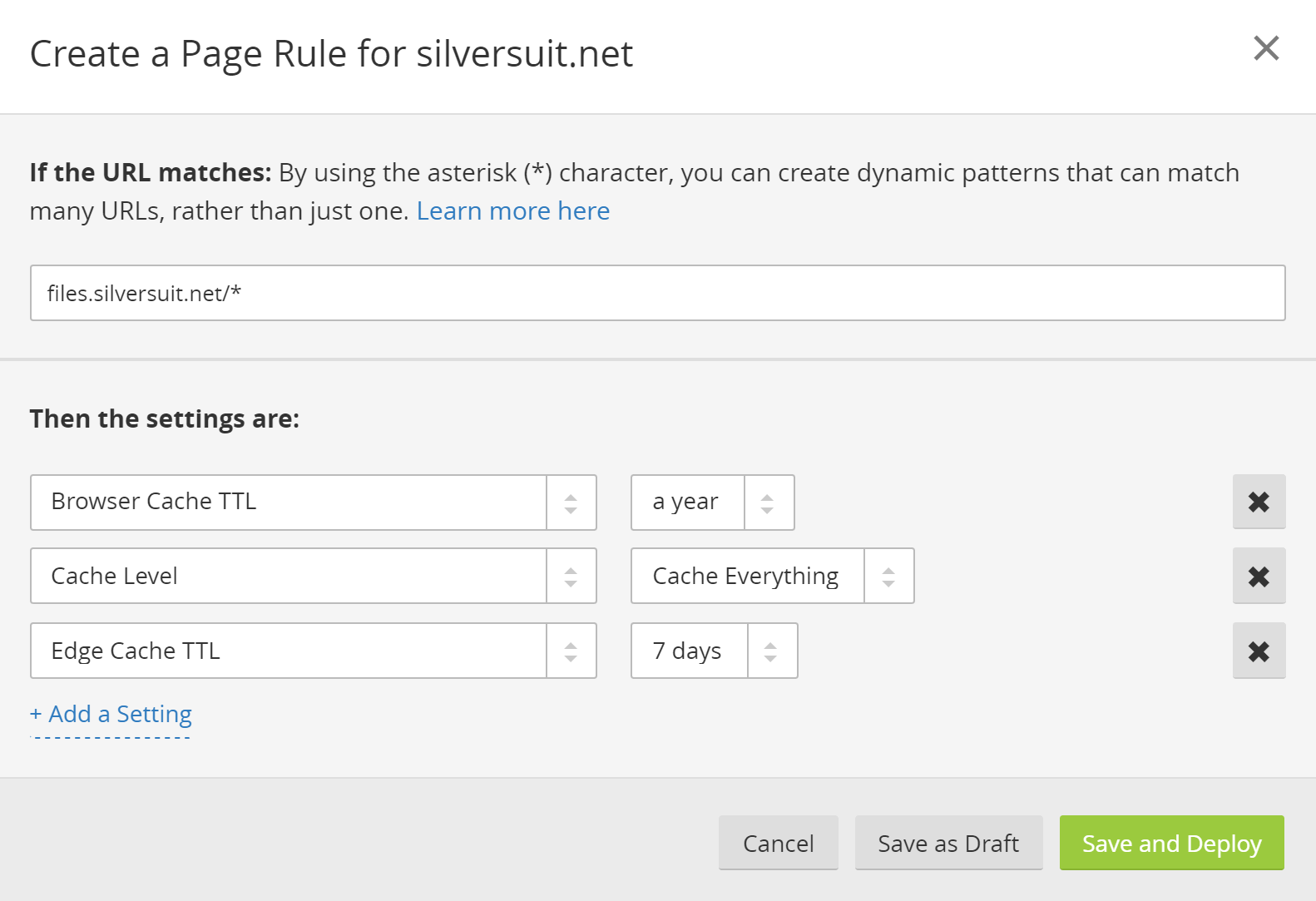

You then need to add your cache settings. I have Cache Level set to Cache Everything; Browser Cache TTL set to a year; Edge Cache TTL set to 7 days. I'm caching aggressively here, but you can tweak these settings to suit. Here's a screenshot:

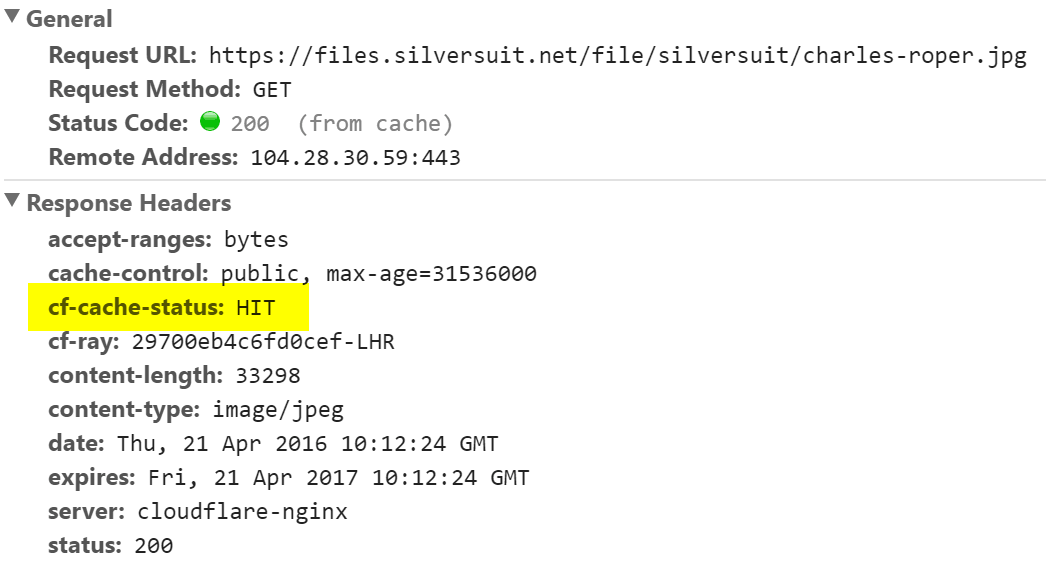

Screenshot showing PageRules settingsTo check that it's working correctly, use DevTools to look for the cf-cache-status:HIT header:

So, with that, you're making use of already very inexpensive B2 storage coupled with CloudFlare's free CDN to serve your assets almost entirely for free. And it's not like these are rinky-dink services that are going to fall over regularly; these are both high-quality, reputable companies.

What a time to be alive, eh?

Did basically the same thing recently but came across a few tips that might be helpful to others.

First, you can configure the cache-control headers originating at the B2 on a per bucket basis (along with at the per file basis at upload). After doing this Cloudflare should respect the headers by default and use that to cache your content in the CDN without needing to use up a Page rule. Instructions do this can be found here. Also, it looks like Backblaze tweaked their cache header policy recently. Specifically buckets created after Sept 8, 2021 no longer include any cache control headers by default, those created before that date still do.

Next, the Backblaze instructions warn that you will want to configure Cloudflare to only allow your buckets to be fetched from your domain. They recommend using a page rule to accomplish this, which does work but I found another option which saves you a page rule. Instead I used a Transform rule, specifically a URL Rewrite configured to prefix the bucket path to all requests for the Backblaze B2 backed domain. The URLs change to only include the asset you want to retrieve from the B2 bucket, excluding the bucket path. For example, files.silversuit.net/file/silversuit/charles-roper.jpg becomes files.silversuit.net/charles-roper.jpg.

On the Cloudflare domain page under Rules > Transform Rules > URL Rewrite > Create transform rule > URL Rewrite