A DynamoDB stream is an ordered flow of information about changes to items in an Amazon DynamoDB table. In this case, lambda function poll the stream of DynamoDB 4 times per seconds and be trigered by an event of DynamoDB stream.

Futher reading:

First, git clone the sam example from GitHub

# clone the examples

$ git clone https://github.com/awslabs/serverless-application-model

# change dir to dynamodb-process-stream-python3

$ cd serverless-application-model/examples/apps/dynamodb-process-stream-python3

# run the sam local generate-event to generate dynamodb stream event

$ sam local generate-event dynamodb > dynamodb-event.json

# invoke the lambda function

$ sam local invoke -e dynamodb-event.json dynamodbprocessstreampython

# package the lambda function

$ sam package --template-file template.yaml --s3-bucket crazyaws-lambda --output-template-file packaged.yaml

# deploy the lambda function to aws

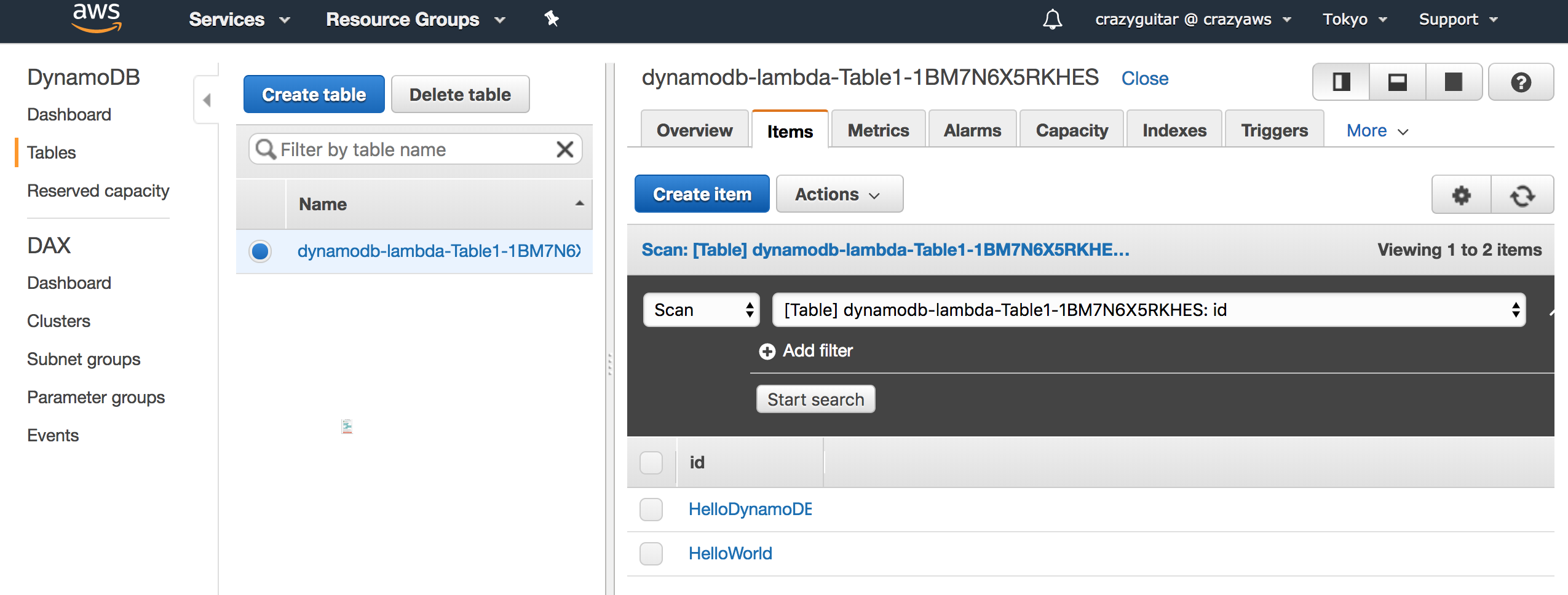

$ sam deploy --template-file packaged-template.yaml --stack-name dynamodb-lambda --capabilities CAPABILITY_IAMAfter run the command: sam deploy, we can find a dynamodb table on AWS. In this case, creating a item in table

can trigger the lambda function which we have created previously.

- Step 1: Go to DynamoDB console

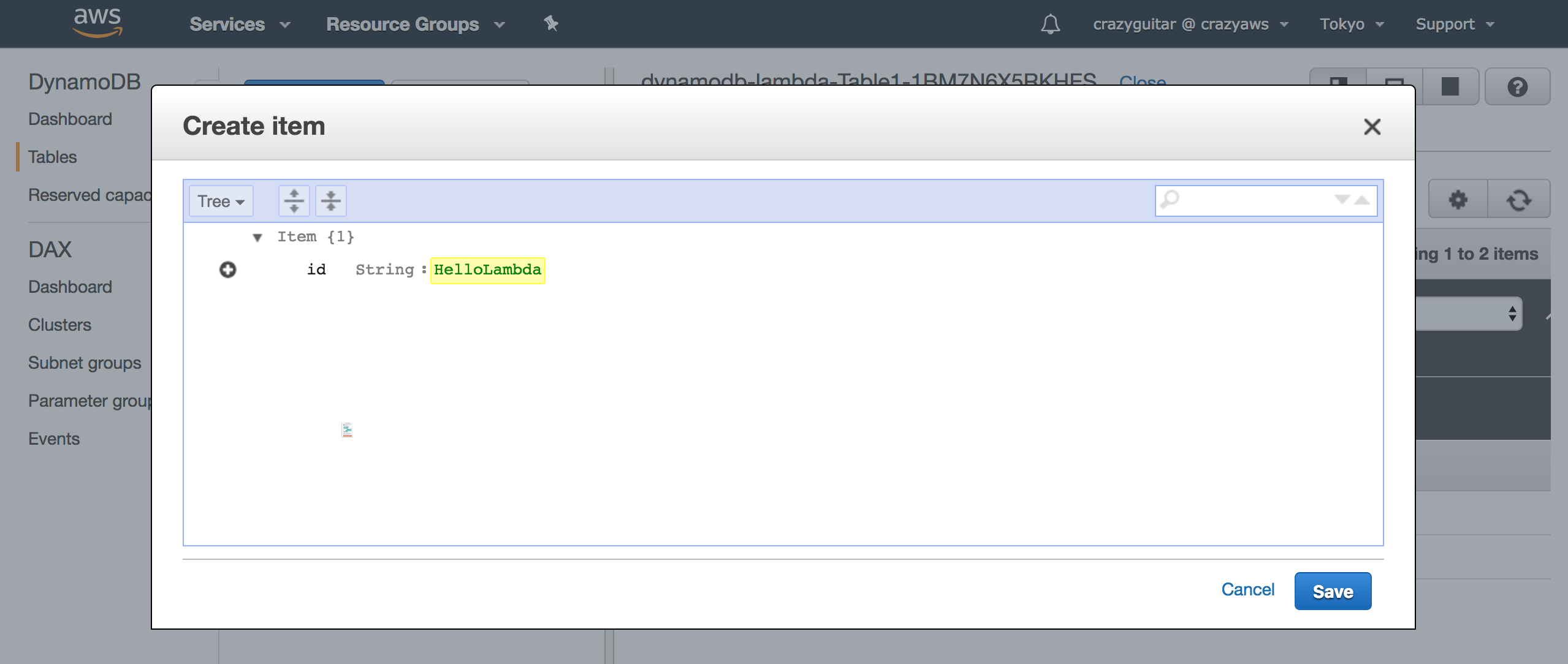

- Step 2: Create an Item in Table

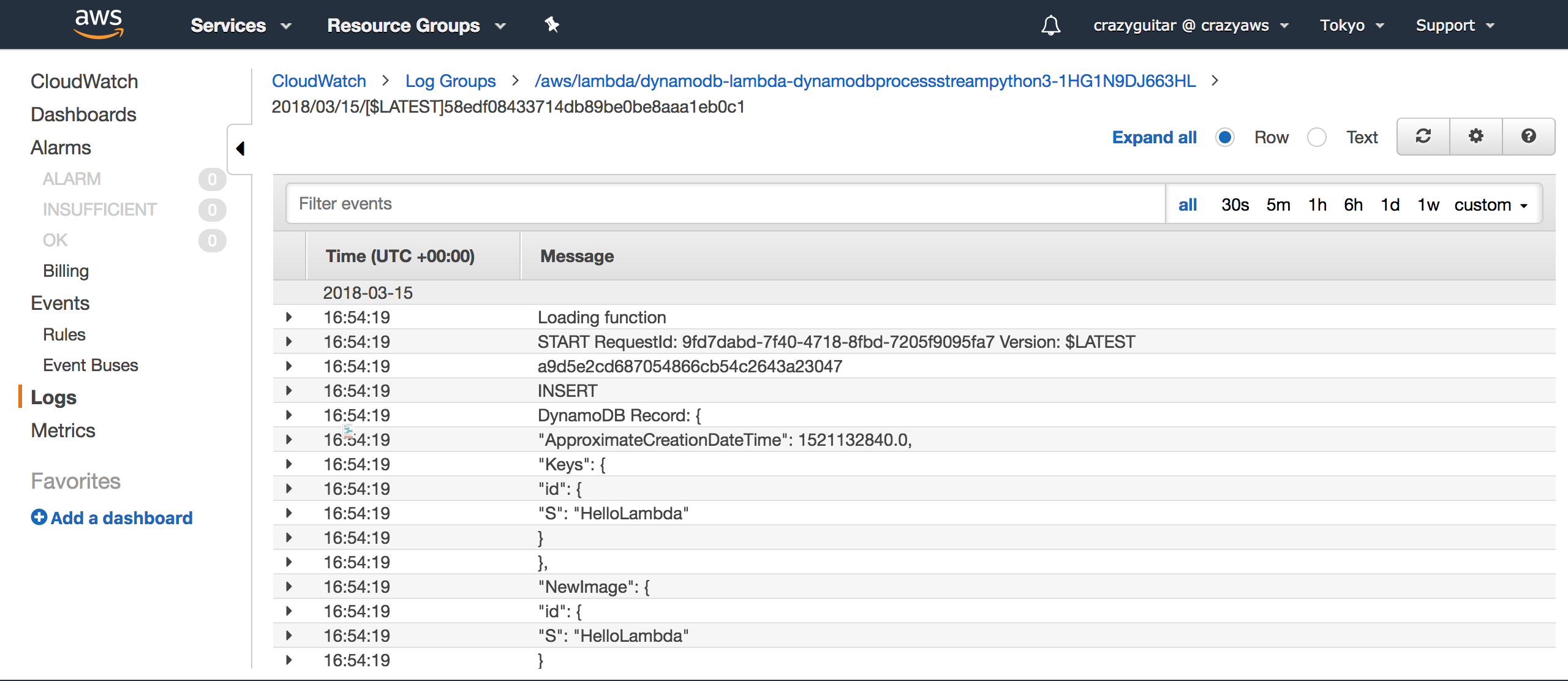

- Step 3: Go to CloudWatch console and click Logs, we can find that the lambda function has been triggered.

template.yaml

AWSTemplateFormatVersion: '2010-09-09'

Transform: 'AWS::Serverless-2016-10-31'

Description: An Amazon DynamoDB trigger that logs the updates made to a table.

Resources:

dynamodbprocessstreampython3:

Type: 'AWS::Serverless::Function'

Properties:

Handler: lambda_function.lambda_handler

Runtime: python3.6

CodeUri: .

Description: An Amazon DynamoDB trigger that logs the updates made to a table.

MemorySize: 128

Timeout: 3

Policies: []

Events:

DynamoDB1:

Type: DynamoDB

Properties:

Stream:

'Fn::GetAtt':

- Table1

- StreamArn

StartingPosition: TRIM_HORIZON

BatchSize: 100

Table1:

Type: 'AWS::DynamoDB::Table'

Properties:

AttributeDefinitions:

- AttributeName: id

AttributeType: S

KeySchema:

- AttributeName: id

KeyType: HASH

ProvisionedThroughput:

ReadCapacityUnits: 5

WriteCapacityUnits: 5

StreamSpecification:

StreamViewType: NEW_IMAGElambda_function.py

import json

print('Loading function')

def lambda_handler(event, context):

#print("Received event: " + json.dumps(event, indent=2))

for record in event['Records']:

print(record['eventID'])

print(record['eventName'])

print("DynamoDB Record: " + json.dumps(record['dynamodb'], indent=2))

return 'Successfully processed {} records.'.format(len(event['Records']))event.json

{

"Records": [

{

"eventID": "1",

"eventVersion": "1.0",

"dynamodb": {

"Keys": {

"Id": {

"N": "101"

}

},

"NewImage": {

"Message": {

"S": "New item!"

},

"Id": {

"N": "101"

}

},

"StreamViewType": "NEW_AND_OLD_IMAGES",

"SequenceNumber": "111",

"SizeBytes": 26

},

"awsRegion": "us-east-1",

"eventName": "INSERT",

"eventSourceARN": "arn:aws:dynamodb:us-east-1:account-id:table/ExampleTableWithStream/stream/2015-06-27T00:48:05.899",

"eventSource": "aws:dynamodb"

}

]

}Futher reading: