Well, Because in past years, most of AI researchers didn't talk about this. Majority was focused on increasing 1% imagenet accuracy even if it makes model size 3x (It have its own advantages). But now, we have good accuracy with models in GBs and we can't deploy them (more problematic for edge devices).

Umm.. Yes. While designing models, one thing researchers find particularly interesting is that most of the weights in neural networks are redundant. They don't contribute in increasing accuracy (sometimes even decrease).

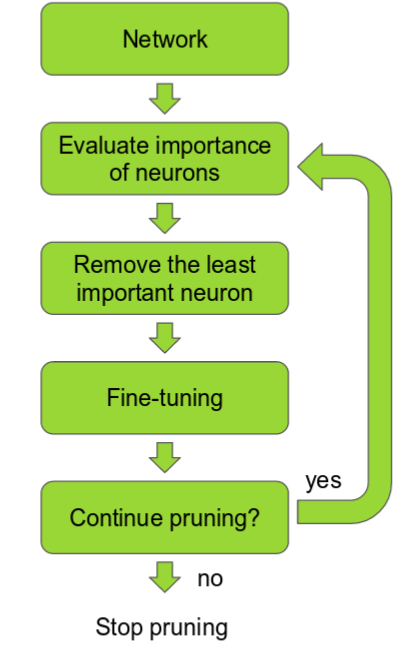

In Pruning, we rank the neurons in the network according to how much they contribute. The ranking can be done according to the L1/L2 mean of neuron weights, their mean activations, the number of times a neuron wasn’t zero on some validation set, and other cr