- These are some thoughts I threw together (with a little help from my friends) around testing, and more specifically unit tests.

- Everything here is an opinionated statement. There are a zillion exceptions to these rules

- the following sections

- a review of an untested section of code, describe tests we would want to write for it.

- a review of hard-to-test code, refactor to make it testable.

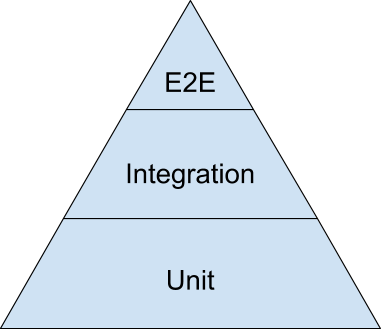

a test that covers a small unit of work, verifying it works as expect.

- we integrate with a lot of 3rd party APIs, what happens if one changes how it responds to us?

- a class depending on another class tests that the full setup works.

user actions, such as logging in to the site, can be tested to get a full round trip request verification.

- could be unit/integration/e2e

- fixed a bug? write a test

making data setup DRY and best practices on how to do it / where to put it / factory style. ~ Peter

this goes with small.

Factories are a class that will give you an instance of a model, where you can specify, if you wish, fields on it, but it does have defaults. Isolating the model instance building into factories allows for a lot of logic to live outside of the tests.

- If a model changes, we have a single location to update, no the thousands of tests.

Flaky tests are evil, they can be worse than having no tests, and you should destroy them with extreme prejudice. I suppose this is a variance on the “no side effects/isolated” bit as well though flaky can be for things like timezone issues (ex: a test only passes when it’s a particular time of day, etc). ~ Adam

Test names should express a specific requirement.

- Suggested format “unitOfWork_stateUnderTest_expectedBehavior”.

- Using this convention means we can answer to the following questions without reading the code of our test methods.

- What are the features and business logic of the application?

- Given a particular input or state, what’s the expected behaviour? ~ Tim

you should be able to change an implementation of an algorithm and your tests should still pass without modification ~ Adam

Code Coverage is a metric of how many lines of code are covered in our tests. If one path is not considered in the tests, those lines aren't counted. You can use this to discover areas of code with low amounts of testing. This shouldn't be a gold standard, since you can write tests that get complete code coverage and don't test anything.

it should take a few seconds to read the test (good naming) to understand what it's intending to verify.

- note: we have base test classes that do all the boiler plate in

core/utils/tests.py

the code of the test is arranged in 3 blocks:

- Arrange: setup the specific situation (possibly asserting it's as expected)

- Act: a single line, that does the action, and changes the state.

- Assert: assertions proving the new state is as expected. Bonus: this makes it easy to read if spaced out in 3 blocks

- testing one function, if it's a method on a class, you have to consider the class's state

- tests ideally should fail for exactly one reason. The more things a test tests, the more likely it’ll break for unrelated changes

if tests are slow, people don't run them before pushing to CI. pushing to CI just to find the tests break is slow, causes context switching, and slows down development.

- slow? probably not a unit test

they should be able to run in any order, we run our tests in parallel (for speed), so their order is undeterministic.

Write the test that fails. Then go adjust the code to make the test pass. (good for regression tests) This helps encourage testable code, keeping 3rd party code decoupled.

Running your tests on n-1 cores of your machine saves you time (the -1 is so we don't lock your machine up).

if we layout all permutations of types of input, and the expected output, it is a good way to cover a lot of surface area.

for input, expected in [

("input1", "output1"),

("input2", "output2"),

("input3", "output3"),

("input4", "output4"),

]:

# act

actual = func(input)

# asserts

assert actual == expecteda specific model setup described in a file you can load as data. The downside is it gets out of date from the model definitions, and update them is tidious.

the expected output of a test, stored in a seperate file. If you are testing a realllly big output is what you expect. compare it against a golden file (known to be correct)

- setUp gets called before each test function

- setUpTestData gets called at the beginning of the test class (once) Moving most Arrange (see arrange/act/assert) code to the latter shaved tens of minutes off our tests. (thanks Brendon)

it's a fully featured testing library (and it supports unittest classes!) link

pytest --exitfirst -vv --no-migrations -n 15

grid tests with permutations

@pytest.mark.parametrize("x", [0, 1])

@pytest.mark.parametrize("y", [2, 3])

@pytest.mark.parametrize("expected", [2, 3])

def test_foo(x, y, expected):

assert func(a, b) == expected@brendon and @nick, 30s to sell it.

- make a fake version of the part you don't want to cover in your test.

- check how the code acts when all those edge/error cases happen in the mocked object.

- there are libraries that do this for you: mock_requests/responses, moto, freezetime

ANY(use sparingly)- if module foo imports function bar from module baz, then you do @patch('foo.bar') and not @patch('baz.bar').

Lots of good stuff here. One of the things that I like about TDD is that it helps you to write better code and prevents you from writing implementations that are hard to test. Often it means keeping 3rd party code at arms length and keeping things pretty decoupled. Especially when unit testing.