This is a draft list of what we're thinking about measuring in Etsy's native apps.

Currently we're looking at how to measure these things with Espresso and Kif (or if each metric is even possible to measure in an automated way). We'd like to build internal dashboards and alerts around regressions in these metrics using automated tests. In the future, we'll want to measure most of these things with RUM too.

- App launch time - how long does it take between tapping the icon and being able to interact with the app?

- Time to complete critical flows - using automated testing, how long does it take a user to finish the checkout flow, etc.?

- Battery usage, including radio usage and GPS usage

- Peak memory allocation

- Frame rate - we need to figure out where we're dropping frames (and introducing scrolling jank). We should be able to dig into render, execute, and draw times.

- Memory leaks - using automated testing, can we find flows or actions that trigger a memory leak?

- An app version of Speed Index - visible completion of the above-the-fold screen over time.

- Time it takes for remote images to appear on the screen

- Time between tapping a link and being able to do something on the next screen

- Average time looking at spinners

- API performance

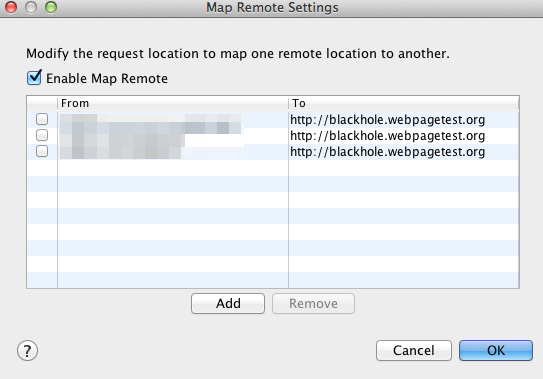

- Webview Performance

The team over at AT&T has thought a lot about this. It is worth reaching out to Michael Merritt to pick his brain on what they've done: http://www.research.att.com/people/Merritt_Michael/?fbid=eZmepfpOvRb