Last active

June 1, 2024 05:11

-

-

Save maelvls/c23558b717422c4c648f4258a7f2fb19 to your computer and use it in GitHub Desktop.

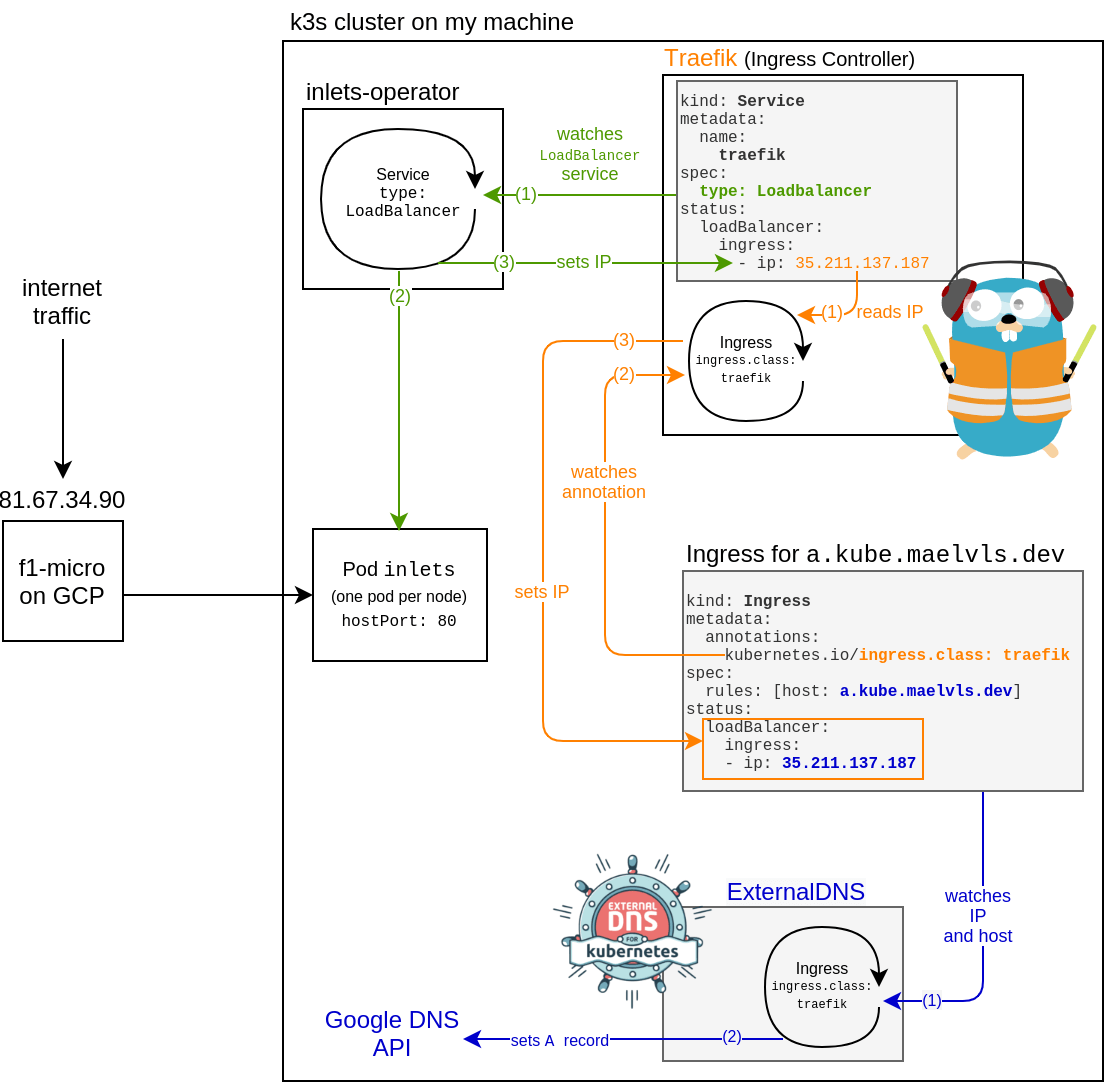

Setup a local k3s cluster with cert-manager and Let's Encrypt with DNS01 and HTTP01 working. 🚧 REQUIRES INLETS PRO. 🚧

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| #! /bin/bash | |

| set -euo pipefail | |

| CM_VERSION=v1.3.0 | |

| CLUSTER= | |

| PROJECT= | |

| DNS_ZONE= | |

| INLETS_TEXT= | |

| help() { | |

| cat <<EOF | |

| Have a local k3s cluster with cert-manager that can issue Let's Encrypt | |

| certificates both using HTTP01 and DNS01. Also does a health-check with an | |

| HTTP01 certificate created using an Ingress. | |

| The VM created on GCP is a f1-micro and its name is NAME-tunnel. | |

| To work around the 5 duplicate certificates per week on Let's Encrypt, | |

| external-dns only allows the domains of the form: | |

| NAME.mael-valais-gcp.jetstacker.net | |

| For example, the sample app is created with "example": | |

| example.NAME.mael-valais-gcp.jetstacker.net | |

| REQUIRES AN INLETS PRO LICENSE (due to TCP tunnelling). | |

| Optionally, you an install bat to have color hightling on the manifests that | |

| are installed at the end while health-checking: https://github.com/sharkdp/bat | |

| Usage: | |

| $(basename "$0") up NAME --project PROJECT --clouddns-zone ZONE --license INLETS_TEXT [all-options] | |

| $(basename "$0") down NAME --project PROJECT | |

| Commands: | |

| up Install cert-manager, external-dns, traefik and inlets-operator, | |

| and serve an HTTPS NGINX example on the apex of your zone. | |

| Requires --project, --license, and --clouddns-zone. | |

| down Remove everything. Requires --project. | |

| flags: | |

| --project PROJECT Name of the GCP project you want the inlets f1-micro | |

| VM to be created on. The CloudDNS zone must also be on | |

| that same project. | |

| --clouddns-zone ZONE Name of the Google CloudDNS zone to be used by | |

| external-dns. Must be on the same project as --project. | |

| --license INLETS_TEXT The license text for inlets PRO. Right now, inlets | |

| PRO is required due to the reliance on the TCP tunnel | |

| (inlets "community" only does HTTP tunnels). | |

| --cm-version VERSION The Helm version of cert-manager to install | |

| (default: $CM_VERSION). You can use the flag | |

| "--cm-version bazel" if you are inside the cert-manager | |

| repository. | |

| General Options: | |

| --help Give some help. | |

| Maël Valais, 2021 | |

| EOF | |

| exit | |

| } | |

| COMMAND= | |

| while [ $# -ne 0 ]; do | |

| case "$1" in | |

| --*=*) | |

| echo "the flag $1 is invalid, please use '${1%=*} ${1#*=}'" >&2 | |

| exit 1 | |

| ;; | |

| -h | --help) | |

| help | |

| exit 0 | |

| ;; | |

| --project) | |

| if [ $# -lt 2 ]; then | |

| echo "$1 requires an argument" >&2 | |

| exit 124 | |

| fi | |

| PROJECT="$2" | |

| shift | |

| ;; | |

| --license) | |

| if [ $# -lt 2 ]; then | |

| echo "$1 requires an argument" >&2 | |

| exit 124 | |

| fi | |

| INLETS_TEXT="$2" | |

| shift | |

| ;; | |

| --cm-version) | |

| if [ $# -lt 2 ]; then | |

| echo "$1 requires an argument" >&2 | |

| exit 124 | |

| fi | |

| CM_VERSION="$2" | |

| shift | |

| ;; | |

| --clouddns-zone) | |

| if [ $# -lt 2 ]; then | |

| echo "$1 requires an argument" >&2 | |

| exit 124 | |

| fi | |

| DNS_ZONE="$2" | |

| shift | |

| ;; | |

| --cluster) | |

| if [ $# -lt 2 ]; then | |

| echo "$1 requires an argument" >&2 | |

| exit 124 | |

| fi | |

| CLUSTER="$2" | |

| shift | |

| ;; | |

| up | down) | |

| COMMAND=$1 | |

| ;; | |

| --*) | |

| echo "error: unknown flag: $1" >&2 | |

| exit 124 | |

| ;; | |

| *) | |

| if [ -n "$CLUSTER" ]; then | |

| echo "the positional argument NAME was already given ('$CLUSTER'), the positional argument '$1' is unexpected" >&2 | |

| exit 124 | |

| fi | |

| CLUSTER=$1 | |

| ;; | |

| esac | |

| shift | |

| done | |

| if [ -z "$COMMAND" ]; then | |

| echo "error: a command is required: [up, down], see --help" >&2 | |

| exit 124 | |

| fi | |

| if [ -z "$CLUSTER" ]; then | |

| echo "error: a NAME is required is required, see --help" >&2 | |

| exit 124 | |

| fi | |

| sanitize() { | |

| cat \ | |

| | sed "s/${INLETS_TEXT:-dummyazerty}/****************************/g" \ | |

| | sed "s/--license [^ ]*/--license ****************************/g" \ | |

| | sed "s/--license=[^ ]*/--license=****************************/g" \ | |

| | sed "s/inletsProLicense=[^ ]*/inletsProLicense=****************************/g" \ | |

| | sed "s/-----BEGIN PRIVATE KEY-----.*-----END PRIVATE KEY-----/****/g" | |

| } | |

| yel="\033[33m" | |

| gray="\033[90m" | |

| end='\033[0m' | |

| # color "$yel" | |

| color() { | |

| # Let's prevent accidental interference from programs that also print colors. | |

| # Caveat: does only work on lines that end with \n. Lines that do not end with | |

| # \n are discarded. | |

| sed -r "s/\x1B\[([0-9]{1,3}(;[0-9]{1,2})?)?[mGK]//g" | while read -r line; do | |

| printf "${1}%s${end}\n" "$line" | |

| done | |

| } | |

| yaml() { | |

| if command -v bat >/dev/null; then | |

| bat -lyml --pager=never | |

| else | |

| cat | |

| fi | |

| } | |

| # https://superuser.com/questions/184307/bash-create-anonymous-fifo | |

| PIPE=$(mktemp -u) | |

| mkfifo "$PIPE" | |

| exec 3<>"$PIPE" | |

| rm "$PIPE" | |

| exec 3>/dev/stderr | |

| # trace CMD AND ARGUMENTS | |

| trace() { | |

| printf "${yel}%s${end} " "$1" | sanitize >&3 | |

| LANG=C perl -e 'print join(" ", map { $_ =~ / / ? "\"".$_."\"" : $_} @ARGV)' -- "${@:2}" $'\n' | sanitize >&3 | |

| # (1) First, if stdin is attached, display stdin. | |

| # (2) Then, run the command and print stdout/stderr. | |

| if ! [ -t 0 ]; then | |

| tee >(sanitize | yaml >&3) | command "$@" 2> >(sanitize | color "$gray" >&3) > >(tee >(sanitize | color "$gray" >&3)) | |

| # <-------------(1)-------> <------------------------------------------(2)--------------------------------------------------------> | |

| else | |

| command "$@" 2> >(sanitize | color "$gray" >&3) > >(tee >(sanitize | color "$gray" >&3)) | |

| # <-------------------------------------------(2)----------------------------------------------------> | |

| fi | |

| } | |

| SA_EMAIL="give-me-my-cluster@$PROJECT.iam.gserviceaccount.com" | |

| case "$COMMAND" in | |

| up) | |

| if [ -z "$PROJECT" ]; then | |

| echo "no project was given, please give it using --project GCP_PROJECT_ID" >&2 | |

| exit 124 | |

| fi | |

| if [ -z "$DNS_ZONE" ]; then | |

| echo "no CloudDNS zone was given, please give one using --clouddns-zone ZONE" >&2 | |

| echo "You can list your managed zones using 'gcloud dns managed-zones list'" >&2 | |

| exit 124 | |

| fi | |

| if [ -z "$INLETS_TEXT" ]; then | |

| echo "no Inlets PRO license (\$300/year... yeah) was given, please give one using --license LICENSE_TEXT" >&2 | |

| exit 124 | |

| fi | |

| trace gcloud iam service-accounts describe "give-me-my-cluster@$PROJECT.iam.gserviceaccount.com" --project "$PROJECT" >/dev/null \ | |

| || trace gcloud iam service-accounts create give-me-my-cluster --display-name "For the give-me-my-cluster CLI" --project "$PROJECT" | |

| # Only add the missing roles. The command comm -23 EXPECTED EXISTING returns | |

| # the lines from EXPECTED that are not in EXISTING. The "true | trace" is a | |

| # workaround due to my trace function seemimgly stealing stdin from read -r. | |

| comm -23 \ | |

| <(echo roles/compute.admin roles/dns.admin roles/iam.serviceAccountUser | tr ' ' $'\n' | sort) \ | |

| <(gcloud projects get-iam-policy "$PROJECT" --format=json | jq -r ".bindings[] | select(.members[] == \"serviceAccount:give-me-my-cluster@$PROJECT.iam.gserviceaccount.com\") | .role" | sort) \ | |

| | while read -r role; do | |

| true | trace gcloud projects add-iam-policy-binding "$PROJECT" --role="$role" --member="serviceAccount:give-me-my-cluster@$PROJECT.iam.gserviceaccount.com" --format=disable | |

| done | |

| # k3d v5.0.0 is required due to breaking changes that happened in v5. | |

| # I had to use v1.21.1-k3s1 due to a bug with the 5.11.0 kernel and how | |

| # kube-proxy is set up by k3s. See: | |

| # https://github.com/rancher/k3d/blob/d668093/docs/faq/faq.md#solved-nodes-fail-to-start-or-get-stuck-in-notready-state-with-log-nf_conntrack_max-permission-denied | |

| k3d cluster list -ojson | jq -r '.[].name' | grep -q "$CLUSTER" \ | |

| || trace k3d cluster create "$CLUSTER" --k3s-arg=--disable=servicelb@server:* --k3s-arg=--disable=traefik@server:* >/dev/null | |

| trace kubectl config use-context "k3d-$CLUSTER" >/dev/null | |

| DOMAIN=$(gcloud dns managed-zones describe "$DNS_ZONE" --format json --project "$PROJECT" | jq -r .dnsName | sed s/\.$//) | |

| kubectl -n kube-system apply -f- <<EOF | |

| apiVersion: v1 | |

| kind: ConfigMap | |

| metadata: | |

| name: domain | |

| data: | |

| domain: ${CLUSTER}.${DOMAIN} | |

| EOF | |

| # cert-manager, external-dns and inlets-operator all require the service | |

| # account JSON key, but they are on different namespaces. So I create the | |

| # Secret two times here and once more further down for the inlets-operator. | |

| if ! kubectl -n kube-system get secret jsonkey 2>/dev/null; then | |

| trace kubectl -n kube-system apply -f- >/dev/null <<EOF | |

| apiVersion: v1 | |

| kind: Secret | |

| metadata: | |

| name: jsonkey | |

| stringData: | |

| jsonkey: | | |

| $(gcloud iam service-accounts keys create /dev/stdout --iam-account "give-me-my-cluster@$PROJECT.iam.gserviceaccount.com" | jq -c) | |

| EOF | |

| fi | |

| if ! kubectl get secret jsonkey 2>/dev/null; then | |

| trace kubectl apply -f- >/dev/null <<EOF | |

| apiVersion: v1 | |

| kind: Secret | |

| metadata: | |

| name: jsonkey | |

| stringData: | |

| jsonkey: | | |

| $(kubectl -n kube-system get secret jsonkey -ojsonpath='{.data.jsonkey}' | base64 -d) | |

| EOF | |

| fi | |

| trace helm repo add jetstack https://charts.jetstack.io >/dev/null | |

| trace helm repo add bitnami https://charts.bitnami.com/bitnami >/dev/null | |

| trace helm repo add traefik https://helm.traefik.io/traefik >/dev/null | |

| trace helm repo add inlets https://inlets.github.io/inlets-operator/ >/dev/null | |

| # Note that there are two Helm charts currently: | |

| # - https://github.com/bitnami/charts/blob/master/bitnami/external-dns, | |

| # - https://github.com/kubernetes-sigs/external-dns/tree/master/charts/external-dns | |

| # | |

| # I use Bitnami's chart because its chart is way more complete and | |

| # usable. For example, as of 11 Feb 2022, the kubernetes-sigs chart | |

| # doesn't have easy support for giving a JSON key for the Google | |

| # provider. | |

| trace helm upgrade --install external-dns bitnami/external-dns --namespace kube-system \ | |

| --set provider=google --set google.project="$PROJECT" --set google.serviceAccountSecret=jsonkey --set google.serviceAccountSecretKey=jsonkey \ | |

| --set sources='{ingress,service,crd}' --set domainFilters="{$DOMAIN}" \ | |

| --set crd.create=true \ | |

| --set extraArgs.crd-source-apiversion=externaldns\.k8s\.io\/v1alpha1 \ | |

| --set extraArgs.crd-source-kind=DNSEndpoint >/dev/null | |

| # Installing CRDs won't work with Helm 3 since 5 July 2021. That's why I | |

| # pinned the Helm chart to 9.20.1 (Traefik 2.4.8). See: | |

| # https://github.com/traefik/traefik-helm-chart/issues/441. | |

| # The Helm chart 10.14.1 (Traefik 2.6.0) supports Kubernetes 1.22 and above. | |

| trace helm upgrade --install traefik traefik/traefik --namespace kube-system \ | |

| --set additionalArguments="{--providers.kubernetesingress,--providers.kubernetesingress.ingressendpoint.publishedservice=kube-system/$CLUSTER,--experimental.kubernetesgateway=true,--providers.kubernetesgateway=true}" \ | |

| --set ssl.enforced=true --set dashboard.ingressRoute=true \ | |

| --version v10.14.1 --set service.enabled=false >/dev/null | |

| trace kubectl apply -n kube-system -f- >/dev/null <<EOF | |

| apiVersion: v1 | |

| kind: Service | |

| metadata: | |

| labels: | |

| app.kubernetes.io/instance: traefik | |

| app.kubernetes.io/name: traefik | |

| name: $CLUSTER | |

| spec: | |

| type: LoadBalancer | |

| ports: | |

| - name: web | |

| port: 80 | |

| targetPort: web | |

| - name: websecure | |

| port: 443 | |

| targetPort: websecure | |

| selector: | |

| app.kubernetes.io/instance: traefik | |

| app.kubernetes.io/name: traefik | |

| EOF | |

| trace helm upgrade --install inlets-operator inlets/inlets-operator --namespace kube-system \ | |

| --set projectID="$PROJECT" --set zone=europe-west2-b --set inletsProLicense="$INLETS_TEXT" \ | |

| --set provider=gce --set accessKeyFile=/var/secrets/inlets/inlets-access-key >/dev/null | |

| trace kubectl apply -f https://raw.githubusercontent.com/inlets/inlets-operator/master/artifacts/crds/inlets.inlets.dev_tunnels.yaml >/dev/null | |

| trace kubectl apply -n kube-system -f- >/dev/null <<EOF | |

| apiVersion: v1 | |

| kind: Secret | |

| metadata: | |

| name: inlets-access-key | |

| stringData: | |

| inlets-access-key: | | |

| $(kubectl -n kube-system get secret jsonkey -ojsonpath='{.data.jsonkey}' | base64 -d) | |

| EOF | |

| CHART="jetstack/cert-manager --version ${CM_VERSION/v/}" | |

| if [ "$CM_VERSION" = bazel ]; then | |

| APP_VERSION=$(git describe --always --tags) | |

| export APP_VERSION | |

| trace bazel run --ui_event_filters=-info --noshow_progress --stamp=true --platforms=@io_bazel_rules_go//go/toolchain:linux_amd64 "//devel/addon/certmanager:bundle" >/dev/null | |

| trace k3d image import --cluster "$CLUSTER" \ | |

| "quay.io/jetstack/cert-manager-controller:$APP_VERSION" \ | |

| "quay.io/jetstack/cert-manager-acmesolver:$APP_VERSION" \ | |

| "quay.io/jetstack/cert-manager-cainjector:$APP_VERSION" \ | |

| "quay.io/jetstack/cert-manager-ctl:$APP_VERSION" \ | |

| "quay.io/jetstack/cert-manager-webhook:$APP_VERSION" >/dev/null | |

| trace bazel build --ui_event_filters=-info --noshow_progress //deploy/charts/cert-manager | |

| CHART="bazel-bin/deploy/charts/cert-manager/cert-manager.tgz --set image.tag=$APP_VERSION --set cainjector.image.tag=$APP_VERSION --set webhook.image.tag=$APP_VERSION" | |

| trace helm upgrade --install cert-manager $CHART --namespace cert-manager --set installCRDs=true --create-namespace >/dev/null | |

| elif [ "$CM_VERSION" = make ]; then | |

| APP_VERSION=$(git describe --always --tags) | |

| export APP_VERSION | |

| trace make -j32 -f make/Makefile "bin/cert-manager-$APP_VERSION.tgz" bin/containers/cert-manager-{controller,cainjector,webhook,ctl}-linux-amd64.tar.gz >/dev/null | |

| echo bin/containers/cert-manager-{controller,cainjector,webhook,ctl}-linux-amd64.tar.gz \ | |

| | tr ' ' $'\n' \ | |

| | trace xargs -P"$(nproc)" -I@ bash -c "k3d image import --cluster $CLUSTER <(gzip -dc @)" | |

| CHART="bin/cert-manager-$APP_VERSION.tgz \ | |

| --set image.repository=docker.io/library/cert-manager-controller-amd64 \ | |

| --set cainjector.image.repository=docker.io/library/cert-manager-cainjector-amd64 \ | |

| --set webhook.image.repository=docker.io/library/cert-manager-webhook-amd64 \ | |

| --set startupapicheck.image.repository=docker.io/library/cert-manager-ctl-amd64 \ | |

| --set image.tag=$APP_VERSION \ | |

| --set cainjector.image.tag=$APP_VERSION \ | |

| --set webhook.image.tag=$APP_VERSION \ | |

| --set startupapicheck.image.tag=$APP_VERSION \ | |

| --set image.pullPolicy=Never \ | |

| --set cainjector.image.pullPolicy=Never \ | |

| --set webhook.image.pullPolicy=Never \ | |

| --set startupapicheck.image.pullPolicy=Never" | |

| trace helm upgrade --install cert-manager $CHART --namespace cert-manager --set installCRDs=true --create-namespace >/dev/null | |

| make -f make/Makefile bin/yaml/cert-manager.crds.yaml | |

| kubectl apply -f bin/yaml/cert-manager.crds.yaml | |

| kubectl patch deploy cert-manager -n cert-manager --patch 'spec: {template: {spec: {securityContext: {runAsNonRoot: false}}}}' | |

| kubectl patch deploy cert-manager-webhook -n cert-manager --patch 'spec: {template: {spec: {securityContext: {runAsNonRoot: false}}}}' | |

| kubectl patch deploy cert-manager-cainjector -n cert-manager --patch 'spec: {template: {spec: {securityContext: {runAsNonRoot: false}}}}' | |

| else | |

| trace helm upgrade --install cert-manager $CHART --namespace cert-manager --set installCRDs=true --create-namespace >/dev/null | |

| fi | |

| trace kubectl wait --for=condition=available deploy -l app.kubernetes.io/instance=cert-manager --namespace cert-manager --timeout=5m >/dev/null | |

| trace kubectl apply -f- >/dev/null <<EOF | |

| #apiVersion: cert-manager.io/v1alpha2 | |

| apiVersion: cert-manager.io/v1 | |

| kind: Issuer | |

| metadata: | |

| name: letsencrypt | |

| spec: | |

| acme: | |

| email: [email protected] | |

| server: https://acme-v02.api.letsencrypt.org/directory | |

| privateKeySecretRef: | |

| name: letsencrypt | |

| solvers: | |

| - http01: | |

| ingress: | |

| class: traefik | |

| - dns01: | |

| cloudDNS: | |

| project: $PROJECT | |

| serviceAccountSecretRef: | |

| name: jsonkey | |

| key: jsonkey | |

| EOF | |

| trace kubectl run example --image=nginx:alpine --port=80 --dry-run=client -oyaml | trace kubectl apply -f- >/dev/null | |

| trace kubectl expose pod example --port=80 --dry-run=client -oyaml | trace kubectl apply -f- >/dev/null | |

| trace kubectl apply -f- >/dev/null <<EOF | |

| apiVersion: networking.k8s.io/v1 | |

| kind: Ingress | |

| metadata: | |

| name: example | |

| annotations: | |

| kubernetes.io/ingress.class: traefik | |

| cert-manager.io/issuer: letsencrypt | |

| certmanager.k8s.io/cluster-issuer: letsencrypt | |

| traefik.ingress.kubernetes.io/redirect-entry-point: websecure | |

| traefik.ingress.kubernetes.io/router.tls: "true" | |

| spec: | |

| rules: | |

| - host: example.$CLUSTER.$DOMAIN | |

| http: | |

| paths: | |

| - path: / | |

| pathType: ImplementationSpecific | |

| backend: | |

| service: | |

| name: example | |

| port: | |

| number: 80 | |

| tls: | |

| - secretName: example | |

| hosts: | |

| - example.$CLUSTER.$DOMAIN | |

| EOF | |

| while ! test -n "$(trace kubectl get svc "$CLUSTER" -n kube-system -ojsonpath='{.status.loadBalancer.ingress[*].ip}')"; do | |

| sleep 10 | |

| done | |

| while ! trace nslookup "example.$CLUSTER.$DOMAIN" ns-cloud-d1.googledomains.com >/dev/null; do | |

| sleep 10 | |

| done | |

| trace kubectl get svc "$CLUSTER" -n kube-system >/dev/null | |

| while ! trace curl -isS -o /dev/null --show-error --fail --max-time 10 "https://example.$CLUSTER.$DOMAIN"; do | |

| sleep 10 | |

| done | |

| cat <<EOF | |

| To get the domain dynamically: | |

| kubectl -n kube-system get cm domain -ojsonpath='{.data.domain}' | |

| EOF | |

| ;; | |

| down) | |

| if [ -z "$PROJECT" ]; then | |

| echo "no project was given, please give it using --project GCP_PROJECT_ID" >&2 | |

| exit 124 | |

| fi | |

| trace gcloud iam service-accounts delete "$SA_EMAIL" --project "$PROJECT" --quiet || true | |

| trace gcloud compute instances delete "$CLUSTER-tunnel" --project "$PROJECT" --zone europe-west2-b --quiet || true | |

| trace k3d cluster delete "$CLUSTER" >/dev/null | |

| ;; | |

| esac |

Today I discovered that

- traefik 2.4.8

does not supportI was wrong, it listens to v1beta1 and uses the Kubernetes conversion webhooks to stay compatible with v1.networking.k8s.io/v1(same as cert-manager 1.3) - traefik expects the annotation with "true" to be a string, not a boolean.

FAQ

ERROR: (gcloud.iam.service-accounts.keys.create) FAILED_PRECONDITION: Precondition check failed.

This means that the service account has been too many keys associated with it. You can delete the service account to work around that by running the down command.

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

cc @irbekrm