Created

September 24, 2021 09:47

-

-

Save shoemoney/83183c196b3454ff53fa2eeebd9e4e94 to your computer and use it in GitHub Desktop.

Guide to become pro at machine learning

Cheat sheet and in-depth explanations located below main article contents… The UNIX shell program interprets user…bryanguner.medium.com These Are The Bash Shell Commands That Stand Between Me And Insanity

I will not profess to be a bash shell wizard… but I have managed to scour some pretty helpful little scripts from Stack…levelup.gitconnected.com Bash Commands That Save Me Time and Frustration

Here’s a list of bash commands that stand between me and insanity.medium.com Life Saving Bash Scripts Part 2

I am not saying they’re in any way special compared with other bash scripts… but when I consider that you can never…medium.com What Are Bash Aliases And Why Should You Be Using Them!

A Bash alias is a method of supplementing or overriding Bash commands with new ones. Bash aliases make it easy for…bryanguner.medium.com BASH CHEAT SHEET

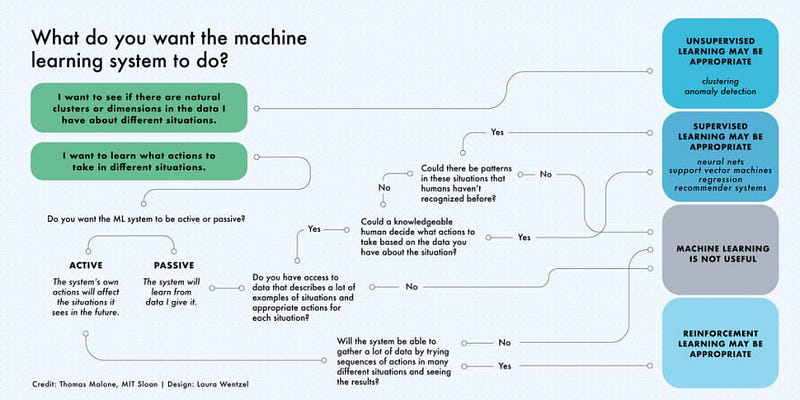

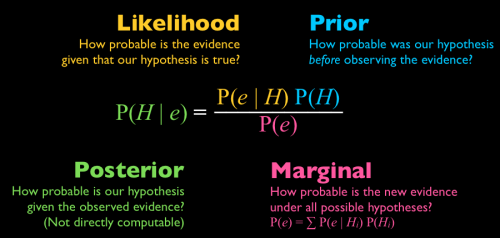

My Bash Cheatsheet Index:bryanguner.medium.com ------------------------------------------------------------------------ > holy grail of learning bash - **\[📰\]** Streamline your projects using Makefile - **\[📰\]** Understand Linux Load Averages and Monitor Performance of Linux - **\[📰\]** Command-line Tools can be 235x Faster than your Hadoop Cluster - **\[ \]** Calmcode: makefiles - **\[ \]** Calmcode: entr - **\[ \]** Codecademy: Learn the Command Line - **\[💻\]** Introduction to Shell for Data Science - **\[💻\]** Introduction to Bash Scripting - **\[💻\]** Data Processing in Shell - **\[ \]** MIT: The Missing Semester of CS Education - **\[ \]** Lecture 1: Course Overview + The Shell (2020) **0:48:16** - **\[ \]** Lecture 2: Shell Tools and Scripting (2020) **0:48:55** - **\[ \]** Lecture 3: Editors (vim) (2020) **0:48:26** - **\[ \]** Lecture 4: Data Wrangling (2020) **0:50:03** - **\[ \]** Lecture 5: Command-line Environment (2020) **0:56:06** - **\[ \]** Lecture 6: Version Control (git) (2020) **1:24:59** - **\[ \]** Lecture 7: Debugging and Profiling (2020) **0:54:13** - **\[ \]** Lecture 8: Metaprogramming (2020) **0:49:52** - **\[ \]** Lecture 9: Security and Cryptography (2020) **1:00:59** - **\[ \]** Lecture 10: Potpourri (2020) **0:57:54** - **\[ \]** Lecture 11: Q&A (2020) **0:53:52** - **\[ \]** Thoughtbot: Mastering the Shell - **\[ \]** Thoughtbot: tmux - **\[🅤\]ꭏ** Linux Command Line Basics - **\[🅤\]ꭏ** Shell Workshop - **\[🅤\]ꭏ** Configuring Linux Web Servers - **\[ \]** Web Bos: Command Line Power User - **\[📺 \]** GNU Parallel ### Be able to perform feature selection and engineering - **\[📰\]** Tips for Advanced Feature Engineering - **\[📰\]** Preparing data for a machine learning model - **\[📰\]** Feature selection for a machine learning model - **\[📰\]** Learning from imbalanced data - **\[📰\]** Hacker’s Guide to Data Preparation for Machine Learning - **\[📰\]** Practical Guide to Handling Imbalanced Datasets - **\[💻\]** Analyzing Social Media Data in Python - **\[💻\]** Dimensionality Reduction in Python - **\[💻\]** Preprocessing for Machine Learning in Python - **\[💻\]** Data Types for Data Science - **\[💻\]** Cleaning Data in Python - **\[💻\]** Feature Engineering for Machine Learning in Python - **\[💻\]** Importing & Managing Financial Data in Python - **\[💻\]** Manipulating Time Series Data in Python - **\[💻\]** Working with Geospatial Data in Python - **\[💻\]** Analyzing IoT Data in Python - **\[💻\]** Dealing with Missing Data in Python - **\[💻\]** Exploratory Data Analysis in Python - **\[ \]** edX: Data Science Essentials - **\[🅤\]ꭏ** Creating an Analytical Dataset - **\[📺 \]** AppliedMachine Learning 2020–04 — Preprocessing **1:07:40** - **\[📺 \]** AppliedMachine Learning 2020–11 — Model Inspection and Feature Selection **1:15:15** ### Be able to experiment in a notebook - **\[📰\]** Securely storing configuration credentials in a Jupyter Notebook - **\[📰\]** Automatically Reload Modules with %autoreload - **\[ \]** Calmcode: ipywidgets - **\[ \]** Documentation: Jupyter Lab - **\[ \]** Pluralsight: Getting Started with Jupyter Notebook and Python - **\[📺 \]** William Horton — A Brief History of Jupyter Notebooks - **\[📺 \]** I Like Notebooks - **\[📺 \]** I don’t like notebooks.- Joel Grus (Allen Institute for Artificial Intelligence) - **\[📺 \]** Ryan Herr — After model.fit, before you deploy| JupyterCon 2020 - **\[📺 \]** nbdev live coding with Hamel Husain - **\[📺 \]** How to Use JupyterLab ### Be able to visualize data - **\[📰\]** Creating a Catchier Word Cloud Presentation - **\[📰\]** Effectively Using Matplotlib - **\[💻\]** Introduction to Data Visualization with Python - **\[💻\]** Introduction to Seaborn - **\[💻\]** Introduction to Matplotlib - **\[💻\]** Intermediate Data Visualization with Seaborn - **\[💻\]** Visualizing Time Series Data in Python - **\[💻\]** Improving Your Data Visualizations in Python - **\[💻\]** Visualizing Geospatial Data in Python - **\[💻\]** Interactive Data Visualization with Bokeh - **\[📺 \]** AppliedMachine Learning 2020–02 Visualization and matplotlib **1:07:30** ### Be able to model problems mathematically - **\[ \]** 3Blue1Brown: Essence of Calculus - **\[ \]** The Essence of Calculus, Chapter 1 **0:17:04** - **\[ \]** The paradox of the derivative | Essence of calculus, chapter 2 **0:17:57** - **\[ \]** Derivative formulas through geometry | Essence of calculus, chapter 3 **0:18:43** - **\[ \]** Visualizing the chain rule and product rule | Essence of calculus, chapter 4 **0:16:52** - **\[ \]** What’s so special about Euler’s number e? | Essence of calculus, chapter 5 **0:13:50** - **\[ \]** Implicit differentiation, what’s going on here? | Essence of calculus, chapter 6 **0:15:33** - **\[ \]** Limits, L’Hôpital’s rule, and epsilon delta definitions | Essence of calculus, chapter 7 **0:18:26** - **\[ \]** Integration and the fundamental theorem of calculus | Essence of calculus, chapter 8 **0:20:46** - **\[ \]** What does area have to do with slope? | Essence of calculus, chapter 9 **0:12:39** - **\[ \]** Higher order derivatives | Essence of calculus, chapter 10 **0:05:38** - **\[ \]** Taylor series | Essence of calculus, chapter 11 **0:22:19** - **\[ \]** What they won’t teach you in calculus **0:16:22** - **\[ \]** 3Blue1Brown: Essence of linear algebra - **\[ \]** Vectors, what even are they? | Essence of linear algebra, chapter 1 **0:09:52** - **\[ \]** Linear combinations, span, and basis vectors | Essence of linear algebra, chapter 2 **0:09:59** - **\[ \]** Linear transformations and matrices | Essence of linear algebra, chapter 3 **0:10:58** - **\[ \]** Matrix multiplication as composition | Essence of linear algebra, chapter 4 **0:10:03** - **\[ \]** Three-dimensional linear transformations | Essence of linear algebra, chapter 5 **0:04:46** - **\[ \]** The determinant | Essence of linear algebra, chapter 6 **0:10:03** - **\[ \]** Inverse matrices, column space and null space | Essence of linear algebra, chapter 7 **0:12:08** - **\[ \]** Nonsquare matrices as transformations between dimensions | Essence of linear algebra, chapter 8 **0:04:27** - **\[ \]** Dot products and duality | Essence of linear algebra, chapter 9 **0:14:11** - **\[ \]** Cross products | Essence of linear algebra, Chapter 10 **0:08:53** - **\[ \]** Cross products in the light of linear transformations | Essence of linear algebra chapter 11 **0:13:10** - **\[ \]** Cramer’s rule, explained geometrically | Essence of linear algebra, chapter 12 **0:12:12** - **\[ \]** Change of basis | Essence of linear algebra, chapter 13 **0:12:50** - **\[ \]** Eigenvectors and eigenvalues | Essence of linear algebra, chapter 14 **0:17:15** - **\[ \]** Abstract vector spaces | Essence of linear algebra, chapter 15 **0:16:46** - **\[ \]** 3Blue1Brown: Neural networks - **\[ \]** But what is a Neural Network? | Deep learning, chapter 1 **0:19:13** - **\[ \]** Gradient descent, how neural networks learn | Deep learning, chapter 2 **0:21:01** - **\[ \]** What is backpropagation really doing? | Deep learning, chapter 3 **0:13:54** - **\[ \]** Backpropagation calculus | Deep learning, chapter 4 **0:10:17** - **\[📰\]** A Visual Tour of Backpropagation - **\[📰\]** Entropy, Cross Entropy, and KL Divergence - **\[📰\]** Interview Guide to Probability Distributions - **\[📰\]** Introduction to Linear Algebra for Applied Machine Learning with Python - **\[📰\]** Entropy of a probability distribution — in layman’s terms - **\[📰\]** KL Divergence — in layman’s terms - **\[📰\]** Probability Distributions - **\[📰\]** Relearning Matrices as Linear Functions - **\[📰\]** You Could Have Come Up With Eigenvectors — Here’s How - **\[📰\]** PageRank — How Eigenvectors Power the Algorithm Behind Google Search - **\[📰\]** Interactive Visualization of Why Eigenvectors Matter - **\[📰\]** Cross-Entropy and KL Divergence - **\[📰\]** Why Randomness Is Information? - **\[📰\]** Basic Probability Theory - **\[📰\]** Math You Need to Succeed InMachine Learning Interviews - **\[📖\]** Basics of Linear Algebra for Machine Learning - **\[💻\]** Introduction to Statistics in Python - **\[💻\]** Foundations of Probability in Python - **\[💻\]** Statistical Thinking in Python (Part 1) - **\[💻\]** Statistical Thinking in Python (Part 2) - **\[💻\]** Statistical Simulation in Python - **\[ \]** edX: Essential Statistics for Data Analysis using Excel - **\[ \]** Computational Linear Algebra for Coders - **\[ \]** Khan Academy: Precalculus - **\[ \]** Khan Academy: Probability - **\[ \]** Khan Academy: Differential Calculus - **\[ \]** Khan Academy: Multivariable Calculus - **\[ \]** Khan Academy: Linear Algebra - **\[ \]** MIT: 18.06 Linear Algebra (Professor Strang) - **\[ \]** 1. The Geometry of Linear Equations **0:39:49** - **\[ \]** 2. Elimination with Matrices. **0:47:41** - **\[ \]** 3. Multiplication and Inverse Matrices **0:46:48** - **\[ \]** 4. Factorization into A = LU **0:48:05** - **\[ \]** 5. Transposes, Permutations, Spaces R^n **0:47:41** - **\[ \]** 6. Column Space and Nullspace **0:46:01** - **\[ \]** 9. Independence, Basis, and Dimension **0:50:14** - **\[ \]** 10. The Four Fundamental Subspaces **0:49:20** - **\[ \]** 11. Matrix Spaces; Rank 1; Small World Graphs **0:45:55** - **\[ \]** 14. Orthogonal Vectors and Subspaces **0:49:47** - **\[ \]** 15. Projections onto Subspaces **0:48:51** - **\[ \]** 16. Projection Matrices and Least Squares **0:48:05** - **\[ \]** 17. Orthogonal Matrices and Gram-Schmidt **0:49:09** - **\[ \]** 21. Eigenvalues and Eigenvectors **0:51:22** - **\[ \]** 22. Diagonalization and Powers of A **0:51:50** - **\[ \]** 24. Markov Matrices; Fourier Series **0:51:11** - **\[ \]** 25. Symmetric Matrices and Positive Definiteness **0:43:52** - **\[ \]** 27. Positive Definite Matrices and Minima **0:50:40** - **\[ \]** 29. Singular Value Decomposition **0:40:28** - **\[ \]** 30. Linear Transformations and Their Matrices **0:49:27** - **\[ \]** 31. Change of Basis; Image Compression **0:50:13** - **\[ \]** 33. Left and Right Inverses; Pseudoinverse **0:41:52** - **\[ \]** StatQuest: Statistics Fundamentals - **\[ \]** StatQuest: Histograms, Clearly Explained **0:03:42** - **\[ \]** StatQuest: What is a statistical distribution? **0:05:14** - **\[ \]** StatQuest: The Normal Distribution, Clearly Explained!!! **0:05:12** - **\[ \]** Statistics Fundamentals: Population Parameters **0:14:31** - **\[ \]** Statistics Fundamentals: The Mean, Variance and Standard Deviation **0:14:22** - **\[ \]** StatQuest: What is a statistical model? **0:03:45** - **\[ \]** StatQuest: Sampling A Distribution **0:03:48** - **\[ \]** Hypothesis Testing and The Null Hypothesis **0:14:40** - **\[ \]** Alternative Hypotheses: Main Ideas!!! **0:09:49** - **\[ \]** p-values: What they are and how to interpret them **0:11:22** - **\[ \]** How to calculate p-values **0:25:15** - **\[ \]** p-hacking: What it is and how to avoid it! **0:13:44** - **\[ \]** Statistical Power, Clearly Explained!!! **0:08:19** - **\[ \]** Power Analysis, Clearly Explained!!! **0:16:44** - **\[ \]** Covariance and Correlation Part 1: Covariance **0:22:23** - **\[ \]** Covariance and Correlation Part 2: Pearson’s Correlation **0:19:13** - **\[ \]** StatQuest: R-squared explained **0:11:01** - **\[ \]** The Central Limit Theorem **0:07:35** - **\[ \]** StatQuickie: Standard Deviation vs Standard Error **0:02:52** - **\[ \]** StatQuest: The standard error **0:11:43** - **\[ \]** StatQuest: Technical and Biological Replicates **0:05:27** - **\[ \]** StatQuest — Sample Size and Effective Sample Size, Clearly Explained **0:06:32** - **\[ \]** Bar Charts Are Better than Pie Charts **0:01:45** - **\[ \]** StatQuest: Boxplots, Clearly Explained **0:02:33** - **\[ \]** StatQuest: Logs (logarithms), clearly explained **0:15:37** - **\[ \]** StatQuest: Confidence Intervals **0:06:41** - **\[ \]** StatQuickie: Thresholds for Significance **0:06:40** - **\[ \]** StatQuickie: Which t test to use **0:05:10** - **\[ \]** StatQuest: One or Two Tailed P-Values **0:07:05** - **\[ \]** The Binomial Distribution and Test, Clearly Explained!!! **0:15:46** - **\[ \]** StatQuest: Quantiles and Percentiles, Clearly Explained!!! **0:06:30** - **\[ \]** StatQuest: Quantile-Quantile Plots (QQ plots), Clearly Explained **0:06:55** - **\[ \]** StatQuest: Quantile Normalization **0:04:51** - **\[ \]** StatQuest: Probability vs Likelihood **0:05:01** - **\[ \]** StatQuest: Maximum Likelihood, clearly explained!!! **0:06:12** - **\[ \]** Maximum Likelihood for the Exponential Distribution, Clearly Explained! V2.0 **0:09:39** - **\[ \]** Why Dividing By N Underestimates the Variance **0:17:14** - **\[ \]** Maximum Likelihood for the Binomial Distribution, Clearly Explained!!! **0:11:24** - **\[ \]** Maximum Likelihood For the Normal Distribution, step-by-step! **0:19:50** - **\[ \]** StatQuest: Odds and Log(Odds), Clearly Explained!!! **0:11:30** - **\[ \]** StatQuest: Odds Ratios and Log(Odds Ratios), Clearly Explained!!! **0:16:20** - **\[ \]** Live 2020–04–20!!! Expected Values **0:33:00** - **\[🅤\]ꭏ** Eigenvectors and Eigenvalues - **\[🅤\]ꭏ** Linear Algebra Refresher - **\[🅤\]ꭏ** Statistics - **\[🅤\]ꭏ** Intro to Descriptive Statistics - **\[🅤\]ꭏ** Intro to Inferential Statistics ### Be able to setup project structure - **\[📰\]** pydantic - **\[📰\]** Organizing machine learning projects: project management guidelines - **\[📰\]** Logging and Debugging in Machine Learning — How to use Python debugger and the logging module to find errors in your AI application - **\[📰\]** Best practices to write Deep Learning code: Project structure, OOP, Type checking and documentation - **\[📰\]** Configuring Google Colab Like A Pro - **\[📰\]** Stop using print, start using loguru in Python - **\[📰\]** Hypermodern Python - **\[📰\]** Hypermodern Python Chapter 2: Testing - **\[📰\]** Hypermodern Python Chapter 3: Linting - **\[📰\]** Hypermodern Python Chapter 4: Typing - **\[📰\]** Hypermodern Python Chapter 5: Documentation - **\[📰\]** Hypermodern Python Chapter 6: CI/CD - **\[📰\]** Push and pull: when and why to update your dependencies - **\[📰\]** Reproducible and upgradable Conda environments: dependency management with conda-lock - **\[📰\]** Options for packaging your Python code: Wheels, Conda, Docker, and more - **\[📰\]** Making model training scripts robust to spot interruptions - **\[ \]** Calmcode: logging - **\[ \]** Calmcode: tqdm - **\[ \]** Calmcode: virtualenv - **\[ \]** Coursera: Structuring Machine Learning Projects - **\[ \]** Doc: Python Lifecycle Training - **\[💻\]** Introduction to Data Engineering - **\[💻\]** Conda Essentials - **\[💻\]** Conda for Building & Distributing Packages - **\[💻\]** Software Engineering for Data Scientists in Python - **\[💻\]** Designing Machine Learning Workflows in Python - **\[💻\]** Object-Oriented Programming in Python - **\[💻\]** Command Line Automation in Python - **\[💻\]** Creating Robust Python Workflows - **\[ \]** Developing Python Packages - **\[ \]** Treehouse: Object Oriented Python - **\[ \]** Treehouse: Setup Local Python Environment - **\[🅤\]ꭏ** Writing READMEs - **\[📺 \]** Lecture 1: Introduction to Deep Learning - **\[📺 \]** Lecture 2: Setting Up Machine Learning Projects - **\[📺 \]** Lecture 3: Introduction to the Text Recognizer Project - **\[📺 \]** Lecture 4: Infrastructure and Tooling - **\[📺 \]** Hydra configuration - **\[📺 \]** Continuous integration - **\[📺 \]** Data Engineering +Machine Learning + Software Engineering // Satish Chandra Gupta // MLOps Coffee Sessions #16 - **\[📺 \]** OO Design and Testing Patterns for Machine Learning with Chris Gerpheide - **\[📺 \]** Tutorial: Sebastian Witowski — Modern Python Developer’s Toolkit - **\[📺 \]** Lecture 13:Machine Learning Teams (Full Stack Deep Learning — Spring 2021) **0:58:13** - **\[📺 \]** Lecture 5:Machine Learning Projects (Full Stack Deep Learning — Spring 2021) **1:13:14** - **\[📺 \]** Lecture 6: Infrastructure & Tooling (Full Stack Deep Learning — Spring 2021) **1:07:21** ### Be able to version control code - **\[📰\]** Mastering Git Stash Workflow - **\[📰\]** How to Become a Master of Git Tags - **\[📰\]** How to track large files in Github / Bitbucket? Git LFS to the rescue - **\[📰\]** Keep your git directory clean with git clean and git trash - **\[ \]** Codecademy: Learn Git - **\[ \]** Code School: Git Real - **\[💻\]** Introduction to Git for Data Science - **\[ \]** Thoughtbot: Mastering Git - **\[🅤\]ꭏ** GitHub & Collaboration - **\[🅤\]ꭏ** How to Use Git and GitHub - **\[🅤\]ꭏ** Version Control with Git - **\[📺 \]** 045 Introduction to Git LFS - **\[📺 \]** Git & Scripting ### Be able to setup model validation - **\[📰\]** Evaluating a machine learning model - **\[📰\]** Validating your Machine Learning Model - **\[📰\]** Measuring Performance: AUPRC and Average Precision - **\[📰\]** Measuring Performance: AUC (AUROC) - **\[📰\]** Measuring Performance: The Confusion Matrix - **\[📰\]** Measuring Performance: Accuracy - **\[📰\]** ROC Curves: Intuition Through Visualization - **\[📰\]** Precision, Recall, Accuracy, and F1 Score for Multi-Label Classification - **\[📰\]** The Complete Guide to AUC and Average Precision: Simulations and Visualizations - **\[📰\]** Best Use of Train/Val/Test Splits, with Tips for Medical Data - **\[📰\]** The correct way to evaluate online machine learning models - **\[📰\]** Proxy Metrics - **\[📺 \]** Accuracy as a Failure - **\[📺 \]** AppliedMachine Learning 2020–09 — Model Evaluation and Metrics **1:18:23** - **\[📺 \]** Machine Learning Fundamentals: Cross Validation **0:06:04** - **\[📺 \]** Machine Learning Fundamentals: The Confusion Matrix **0:07:12** - **\[📺 \]** Machine Learning Fundamentals: Sensitivity and Specificity **0:11:46** - **\[📺 \]** Machine Learning Fundamentals: Bias and Variance **0:06:36** - **\[📺 \]** ROC and AUC, Clearly Explained! **0:16:26** ------------------------------------------------------------------------ ### Be familiar with inner working of models **Bays theorem is super interesting and applicable ==> — \[📰\]** Naive Bayes classification - **\[📰\]** Linear regression - **\[📰\]** Polynomial regression - **\[📰\]** Logistic regression - **\[📰\]** Decision trees - **\[📰\]** K-nearest neighbors - **\[📰\]** Support Vector Machines - **\[📰\]** Random forests - **\[📰\]** Boosted trees - **\[📰\]** Hacker’s Guide to Fundamental Machine Learning Algorithms with Python - **\[📰\]** Neural networks: activation functions - **\[📰\]** Neural networks: training with backpropagation - **\[📰\]** Neural Network from scratch-part 1 - **\[📰\]** Neural Network from scratch-part 2 - **\[📰\]** Perceptron to Deep-Neural-Network - **\[📰\]** One-vs-Rest strategy for Multi-Class Classification - **\[📰\]** Multi-class Classification — One-vs-All & One-vs-One - **\[📰\]** One-vs-Rest and One-vs-One for Multi-Class Classification - **\[📰\]** Deep Learning Algorithms — The Complete Guide - **\[📰\]** Machine Learning Techniques Primer - **\[ \]** AWS: Understanding Neural Networks - **\[📖\]** Grokking Deep Learning - **\[📖\]** Make Your Own Neural Network - **\[ \]** Coursera: Neural Networks and Deep Learning - **\[💻\]** Extreme Gradient Boosting with XGBoost - **\[💻\]** Ensemble Methods in Python - **\[ \]** StatQuest: Machine Learning - **\[ \]** StatQuest: Fitting a line to data, aka least squares, aka linear regression. **0:09:21** - **\[ \]** StatQuest: Linear Models Pt.1 — Linear Regression **0:27:26** - **\[ \]** StatQuest: StatQuest: Linear Models Pt.2 — t-tests and ANOVA **0:11:37** - **\[ \]** StatQuest: Odds and Log(Odds), Clearly Explained!!! **0:11:30** - **\[ \]** StatQuest: Odds Ratios and Log(Odds Ratios), Clearly Explained!!! **0:16:20** - **\[ \]** StatQuest: Logistic Regression **0:08:47** - **\[ \]** Logistic Regression Details Pt1: Coefficients **0:19:02** - **\[ \]** Logistic Regression Details Pt 2: Maximum Likelihood **0:10:23** - **\[ \]** Logistic Regression Details Pt 3: R-squared and p-value **0:15:25** - **\[ \]** Saturated Models and Deviance **0:18:39** - **\[ \]** Deviance Residuals **0:06:18** - **\[ \]** Regularization Part 1: Ridge (L2) Regression **0:20:26** - **\[ \]** Regularization Part 2: Lasso (L1) Regression **0:08:19** - **\[ \]** Ridge vs Lasso Regression, Visualized!!! **0:09:05** - **\[ \]** Regularization Part 3: Elastic Net Regression **0:05:19** - **\[ \]** StatQuest: Principal Component Analysis (PCA), Step-by-Step **0:21:57** - **\[ \]** StatQuest: PCA main ideas in only 5 minutes!!! **0:06:04** - **\[ \]** StatQuest: PCA — Practical Tips **0:08:19** - **\[ \]** StatQuest: PCA in Python **0:11:37** - **\[ \]** StatQuest: Linear Discriminant Analysis (LDA) clearly explained. **0:15:12** - **\[ \]** StatQuest: MDS and PCoA **0:08:18** - **\[ \]** StatQuest: t-SNE, Clearly Explained **0:11:47** - **\[ \]** StatQuest: Hierarchical Clustering **0:11:19** - **\[ \]** StatQuest: K-means clustering **0:08:57** - **\[ \]** StatQuest: K-nearest neighbors, Clearly Explained **0:05:30** - **\[ \]** Naive Bayes, Clearly Explained!!! **0:15:12** - **\[ \]** Gaussian Naive Bayes, Clearly Explained!!! **0:09:41** - **\[ \]** StatQuest: Decision Trees **0:17:22** - **\[ \]** StatQuest: Decision Trees, Part 2 — Feature Selection and Missing Data **0:05:16** - **\[ \]** Regression Trees, Clearly Explained!!! **0:22:33** - **\[ \]** How to Prune Regression Trees, Clearly Explained!!! **0:16:15** - **\[ \]** StatQuest: Random Forests Part 1 — Building, Using and Evaluating **0:09:54** - **\[ \]** StatQuest: Random Forests Part 2: Missing data and clustering **0:11:53** - **\[ \]** The Chain Rule **0:18:23** - **\[ \]** Gradient Descent, Step-by-Step **0:23:54** - **\[ \]** Stochastic Gradient Descent, Clearly Explained!!! **0:10:53** - **\[ \]** AdaBoost, Clearly Explained **0:20:54** - **\[⨊ \]** Part 1: Regression Main Ideas **0:15:52** - **\[⨊ \]** Part 2: Regression Details **0:26:45** - **\[⨊ \]** Part 3: Classification **0:17:02** - **\[⨊ \]** Part 4: Classification Details **0:36:59** - **\[⨊ \]** Support Vector Machines, Clearly Explained!!! **0:20:32** - **\[ \]** Support Vector Machines Part 2: The Polynomial Kernel **0:07:15** - **\[ \]** Support Vector Machines Part 3: The Radial (RBF) Kernel **0:15:52** - **\[ \]** XGBoost Part 1: Regression **0:25:46** - **\[ \]** XGBoost Part 2: Classification **0:25:17** - **\[ \]** XGBoost Part 3: Mathematical Details **0:27:24** - **\[ \]** XGBoost Part 4: Crazy Cool Optimizations **0:24:27** - **\[ \]** StatQuest: Fiitting a curve to data, aka lowess, aka loess **0:10:10** - **\[ \]** Statistics Fundamentals: Population Parameters **0:14:31** - **\[ \]** Principal Component Analysis (PCA) clearly explained (2015) **0:20:16** - **\[ \]** Decision Trees in Python from Start to Finish **1:06:23** - **\[🅤\]ꭏ** Classification Models - **\[📺 \]** Neural Networks from Scratch in Python - **\[ \]** Neural Networks from Scratch — P.1 Intro and Neuron Code **0:16:59** - **\[ \]** Neural Networks from Scratch — P.2 Coding a Layer **0:15:06** - **\[ \]** Neural Networks from Scratch — P.3 The Dot Product **0:25:17** - **\[ \]** Neural Networks from Scratch — P.4 Batches, Layers, and Objects **0:33:46** - **\[ \]** Neural Networks from Scratch — P.5 Hidden Layer Activation Functions **0:40:05** - **\[📺 \]** AppliedMachine Learning 2020–03 Supervised learning and model validation **1:12:00** - **\[📺 \]** AppliedMachine Learning 2020–05 — Linear Models for Regression **1:06:54** - **\[📺 \]** AppliedMachine Learning 2020–06 — Linear Models for Classification **1:07:50** - **\[📺 \]** AppliedMachine Learning 2020–07 — Decision Trees and Random Forests **1:07:58** - **\[📺 \]** AppliedMachine Learning 2020–08 — Gradient Boosting **1:02:12** - **\[📺 \]** AppliedMachine Learning 2020–18 — Neural Networks **1:19:36** - **\[📺 \]** AppliedMachine Learning 2020–12 — AutoML (plus some feature selection) **1:25:38** ------------------------------------------------------------------------ *Originally published at* https://dev.to *on November 12, 2020.* By Bryan Guner on [November 12, 2020](https://medium.com/p/382ee243f23c). Canonical link Exported from [Medium](https://medium.com) on September 23, 2021.

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment