Useful tips and tricks for running on Princeton's computing clusters. (Adapted from https://gist.github.com/smsharma/c579c6563eed954f2283)

feynman.princeton.edu

Feynman-hepheno has 3 nodes -- node022 with 16 cores and 128GB memory, and node040-node041 with 28 cores and 256GB memory.

- Three areas -- home (

~), group (/group/hepheno/) and group storage (/mnt/hepheno/) - Best to work from your user directory in

/group/hephenosince~has very little storage space -- set acd /group/hepheno/in~/.bashrc! - The contents of

/group/hepheno/group-setup.share automatically sourced oncdinto the group directory/group/hepheno/:- Activates a python virtual environment (see below)

- Initiates MPI and sets a few environment variables

- Different compiler versions (intel/gnu) as well as OpenMPI/Intel MPI versions can be activated using

module load <module>and available modules seen usingmodule avail. - Typical env:

- SLURM scheduler. Typical batch file:

#!/bin/bash

#SBATCH -N 1 # node count

#SBATCH --ntasks-per-node=12

#SBATCH -t 4:00:00

#SBATCH --mail-type=begin

#SBATCH --mail-type=end

#SBATCH [email protected]

#SBATCH -p hepheno

cd /hepheno/heptools/test_code

mpiexec.hydra -n 12 run.py- To submit job,

sbatch submit.batch - Standard output and error in same directory where job is run from.

- Useful commands:

squeue [-u <username>]to view jobsscancel -u <username>andscancel <jobid>to cancel jobs

Data/code:

- The Fermi data is stored in

/mnt/hepheno/CTBCORE/and/mnt/hepheno/FermiData /group/hepheno/heptools/contains relevent programs (MultiNest, pythia etc) as well as the NPTF code (likeliy outdated)

[tiger/della/perseus].princeton.edu

Tiger has 16 cores/node and della has 20 cores/node.

- Home dir

~(negligible storage space),/scratch/gpfs/(temp work, short-lived branches etc),/tigress/(storage area, everything else) - Both tiger and della use common storage space

/tigress/so probably best to work from there - Fermi data in

/tigress/smsharma/public - Software in

/tigress/smsharma/public/heptools(including NPTF code) - No group setup so need to run the following before using:

cd /tigress/smsharma/public/heptools/MultiNest/

export LD_LIBRARY_PATH=$(pwd)/lib${LD_LIBRARY_PATH:+:$LD_LIBRARY_PATH}

cd /tigress/smsharma/public/heptools

source venv/bin/activate

export PYTHONPATH=$PYTHONPATH:/tigress/smsharma/public/heptools/Fermi-NPTF-edep

module load openmpiMost of this is just random stuff that I find useful while running on the cluster

If you want to use Sublime Text, either use rsub or Sublime FTP

Instructions for rsub:

- Put this script somewhere on the cluster and name the file

rsub.chmod +xthe file. Change theportsetting to the desired on (here,port=52697) - Create an alias

rsubpointing to that file - Install

rsublocally in Sublime via the ST package manager. Open the package settings and set the port appropriately ("port": 52697). - Add the following lines in

~/.ssh/config, for each cluster being used:

Host feynman.princeton.edu

RemoteForward 52697 127.0.0.1:52697

tmux allows multiple persistent terminal sessions

- Install local version of

tmuxusing this script tmux new-session -s <name>to start new sessiontmux attach -t <name>to attach named sessionCtrl-B Dto detach sessiontmux attachto attach sessionCtrl-B $to rename sessiontmux kill-session -t <name>to kill sessiontmux lsto view active sessionsset-window-option -g aggressive-resizeto properly resize over different sessions

Here's a nice cheatsheet.

To get proper mouse scrolling in iTerm, edit ~/.tmux.conf (might not work):

set-option -g mouse on

kill -9 -1

kill [-u <user>]

For feynman:

- Go to the root folder from where you can navigate to your notebooks

- On Feynman, type:

jupyter notebook --no-browser --port=7000. NB: Replace 7000 with something unique7xxx. - On your computer, type:

ssh -N -f -L localhost:7000:localhost:7000 <username>@feynman.princeton.edu - On your web browser, go to:

http://localhost:7000

tail -f ~/HL_Sim1_NPTF_0-15_np3.batch.o288395getconf _NPROCESSORS_ONLNSo you don't have to type the ssh command each time when logging in, append these to your ~/.bashrc or ~/.bash_profile

# Aliases for ssh-ing into clusters

alias feynman="ssh -Y [email protected]"

alias della="ssh -Y [email protected]"

alias tiger="ssh -Y [email protected]"

alias perseus="ssh -Y [email protected]"

So you don't have to type a password each time when logging in. For Linux and Mac OS X (sorry, Windows users).

- Create a private/public keypair on your local machine (note: if you already have an existing keypair, you can skip this step):

ssh-keygen -t rsa. Enter password and specify output file path as prompted. - Copy the public key to the cluster:

- Linux:

ssh-copy-id -i ~/.ssh/id_rsa.pub [email protected] - OS X:

cat ~/.ssh/id_rsa.pub | ssh [email protected] "mkdir ~/.ssh; cat >> ~/.ssh/authorized_keys"

- Linux:

- Enter passwords as prompted, and you should be good to go!

- Download the appropriate version with

wgetfrom here. - Simply do e.g.

bash Anaconda2-4.3.0-Linux-x86_64.sh. Make sure to choose the prepend path to be somewhere nice and spacious rather than your home dir. - Conda is now installed and it should also have appended

condato yourPATH(otherwise do somethinglikeexport PATH="/group/hepheno/heptools/anaconda3/bin:$PATH"). This installs the usual stuff like[num/sci/asto]pyetc. - Create a new venv with

conda create -n venv_py27 python=2.7 anaconda, where you can see the available Python versions withconda search "^python$". - Activate your beautiful venv with

source activate venv_py27. You can nowpipinstall into it and do whatever you please.

TODO: working with multiple Python versions in venvs for development. TODO: pipenv

curl -O

https://pypi.python.org/packages/source/v/virtualenv/virtualenv-1.9.tar.gz # Check for latest version

tar xzf virtualenv-1.9.tar.gz

python virtualenv-1.9/virtualenv.py venv

source venv/bin/activateCan use a different python executable with virtualenv -p /usr/bin/python2.6 <path/to/new/virtualenv/> (in case the default one is old, for example)

autoenv automatically sources virtual environments on login -- useful!

- Download and untar PolSpice

wget ftp://ftp.iap.fr/pub/from_users/hivon/PolSpice/PolSpice_v03-01-06.tar.gz

tar -zxvf PolSpice_v03-01-06.tar.gz

cd PolSpice[..]

- Make sure

$HEALPIXis set to your root Healpix directory and edit theMakefileinsrc. My header:

#

# Makefile to customize for your system

#

# system variable HEALPIX must be defined for compilation

# and at run time

#

FC = gfortran # F90 compiler

FCFLAGS = -fopenmp -lgomp # compiler options

FITSLIB = /group/hepheno/heptools/cfitsio/ # cfitsio library location (directory)

SUFF = F90 # Healpix f90 include and lib suffix

HPXINC = $(HEALPIX)/include$(SUFF) # Healpix f90 include files location

HPXLIB = $(HEALPIX)/lib$(SUFF) # Healpix f90 library location

#

INC = -I. -I$(HPXINC)

LIBS = -L$(HPXLIB) -lhealpix -L$(FITSLIB) -lcfitsio

#

- Add

/PolSpice/srcto your$PATHso thatspiceis available - See example in

CAPS.ipynbin theRockstardirectory. The other file you'll need isspicepy.pyfrom the same directory

cd /group/hepheno/smsharma/ #cd /group/hepheno/heptools/

git clone http://github.com/JohannesBuchner/MultiNest

source /opt/intel/impi/5.0.3.048/bin64/mpivars.sh

cmake -DCMAKE_Fortran_COMPILER=gfortran

make

make install #optional, probably don't need it / might not work

# Need to do this everytime -- replace $(pwd) with install dir and add to group-setup.sh or ~/.bashrc.

export LD_LIBRARY_PATH=$(pwd)/lib${LD_LIBRARY_PATH:+:$LD_LIBRARY_PATH}pip install numpy matplotlib scipy healpy pandas Cython mpmath pymultinest mpi4py numexpr astropy ipython jupyter ipyparallel joblib tqdm iminuit pip install scikit-learn keras tensorflowIf astropy and numpy happen to not play nice, the following definitely work:

pip install numpy==1.9.1

pip install astropy==1.0.3Try installing OpenBLAS

git clone https://github.com/xianyi/OpenBLAS

cd OpenBLAS

make FC=gfortran- Install

nodejs:

conda install -c conda-forge nodejs - Install the JupyterLab extensionwith widgets as in here.

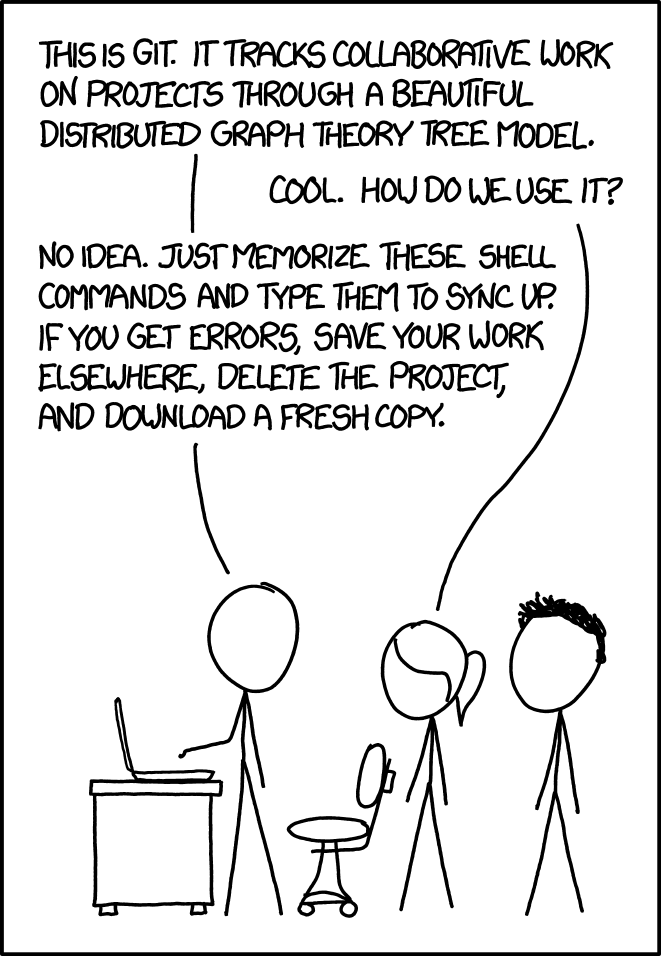

- To clone repository:

git clone <remote link> - To pull updates from remote:

git pull - To add all local changes (from the folder you're in):

git add -A . - To remove files from local:

git rm <files> - To commit changes:

git commit -m "<Descriptive name of commit>" - To push changes to remote:

git push