-

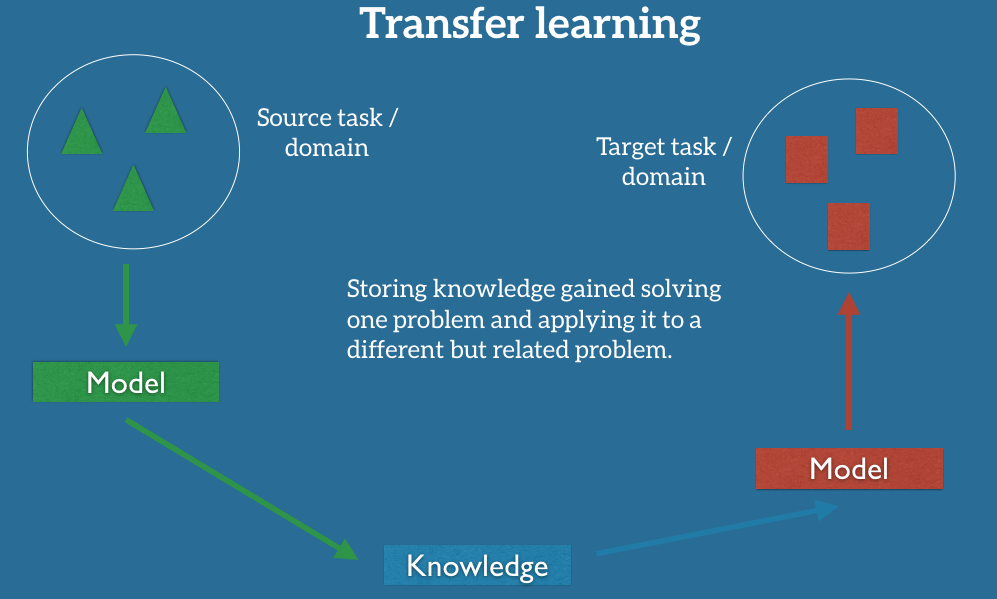

transfer learning is the process of training a model on a large-scale dataset and then using that pretrained model to conduct learning for another downstream task (i.e., target task).

-

Deep-Learning-Adaptive-Computation-Machine

Transfer learning and domain adaptation refer to the situation where what has been learned in one setting … is exploited to improve generalization in another setting

-

Handbook of Research on Machine Learning Applications, 2009.

Transfer learning is the improvement of learning in a new task through the transfer of knowledge from a related task that has already been learned.

- Develop Model Approach

- Pre-trained Model Approach

Develop Model Approach

-

Select Source Task. You must select a related predictive modeling problem with an abundance of data where there is some relationship in the input data, output data, and/or concepts learned during the mapping from input to output data.

-

Develop Source Model. Next, you must develop a skillful model for this first task. The model must be better than a naive model to ensure that some feature learning has been performed.

-

Reuse Model. The model fit on the source task can then be used as the starting point for a model on the second task of interest. This may involve using all or parts of the model, depending on the modeling technique used. Tune Model. Optionally, the model may need to be adapted or refined on the input-output pair data available for the task of interest.

Pre-trained Model Approach

- Select Source Model. A pre-trained source model is chosen from available models. Many research institutions release models on large and challenging datasets that may be included in the pool of candidate models from which to choose from.

- Reuse Model. The model pre-trained model can then be used as the starting point for a model on the second task of interest. This may involve using all or parts of the model, depending on the modeling technique used.

- Tune Model. Optionally, the model may need to be adapted or refined on the input-output pair data available for the task of interest.

This second type of transfer learning is common in the field of deep learning.

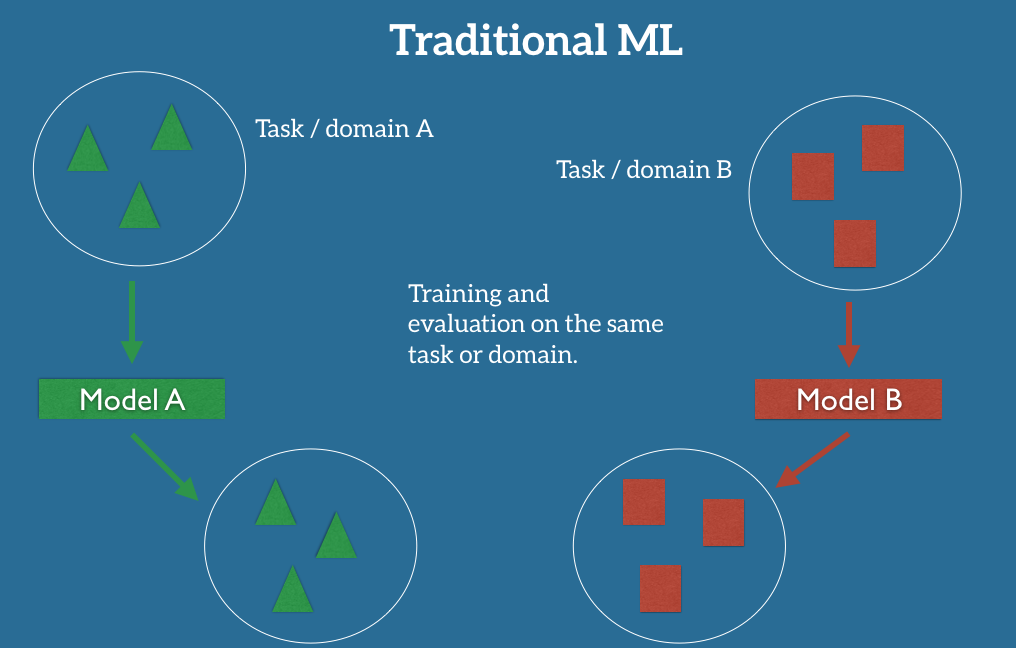

traditional ml src

transfer learning src

tipe tipe transfer learning src

-

neural transfer learning for nlp

The current generation of neural network-based natural language processing models excels at learning from large amounts of labelled data. Given these capabilities, natural language processing is increasingly applied to new tasks, new domains, and new languages. Current models, however, are sensitive to noise and adversarial examples and prone to overfitting [....] We make several contributions to transfer learning for natural language processing: Firstly, we propose new methods to automatically select relevant data for supervised and unsupervised domain adaptation. Secondly, we propose two novel architectures that improve sharing in multi-task learning and outperform single-task learning as well as the state-of-the-art. Thirdly, we analyze the limitations of current models for unsupervised cross-lingual transfer and propose a method to mitigate them as well as a novel latentvariable cross-lingual word embedding model. Finally,we propose a framework based on fine-tuning language models for sequential transfer learning and analyze the adaptation phase. -

Parameter-Efficient Transfer Learning for NLP

menggunakan adapter sebagai ganti fine-tuning karena tidak efisien, karena setiap model baru berarti harus di fine-tuning lagi. catatan: fine-tuning: mengubah hyperparameter pada satu metode untuk pembuatan model -

Semi-Supervised Sequence Modeling with Cross-View Training semi supervised learning adalah gabungan antara unsupervised dan supervised learning. Jadi beberapa dataset ada yang unlabeled ditujukan agar dapat diprediksi oleh model yang sedang di train. Model di train dengan metode Cross-View Training

transfer learning di luar NLP

- Transfer learning for time series classification

In this paper, we fill this gap by investigating how to transfer deep CNNs for the Time Series Classificaiton task - A Survey on Deep Learning of Small Sample in Biomedical Image Analysis penggunaan transfer learning untuk "mengakali" learning pada small sample untuk image classification

saya menemukan beberapa cara untuk mencari "secara ngasal" parameter model yang tepat.

- TPOT (menggunakan genetic algorithm untuk menentukan model dengan suatu metric error yang paling rendah)

- HyperparameterHunter (menggunakan algoritma gridsearchCV atau kasarnya, di bruteforce satu per satu suatu interval isi dari parameternya)