|

from __future__ import division, print_function |

|

|

|

""" |

|

Python 3.6 Lambda function that checks the latest cost metric for CloudWatch and writes it to a slack channel. |

|

""" |

|

|

|

import boto3 |

|

import requests |

|

import os |

|

import datetime |

|

import json |

|

import calendar |

|

import copy |

|

import logging |

|

|

|

# https://docs.aws.amazon.com/lambda/latest/dg/python-logging.html |

|

log = logging.getLogger() |

|

log.setLevel(logging.INFO) |

|

|

|

# urllib3 is noisy |

|

logging.getLogger('botocore.vendored.requests.packages.urllib3.connectionpool').setLevel(logging.WARNING) |

|

|

|

## From env vars |

|

# Required |

|

MONTHLY_BUDGET = float(os.environ["MONTHLY_BUDGET"]) # dollars |

|

SLACK_WEBHOOK_URL = os.environ["SLACK_WEBHOOK_URL"] |

|

SLACK_CHANNEL = os.environ["SLACK_CHANNEL"] |

|

# Optional |

|

COST_DASHBOARD = os.environ.get("COST_DASHBOARD", None) |

|

AWS_ENVIRONMENT_NAME = os.environ.get("AWS_ENVIRONMENT_NAME", "default") |

|

DEBUG = os.environ.get("DEBUG", "false").lower() == "true" |

|

|

|

# Constants |

|

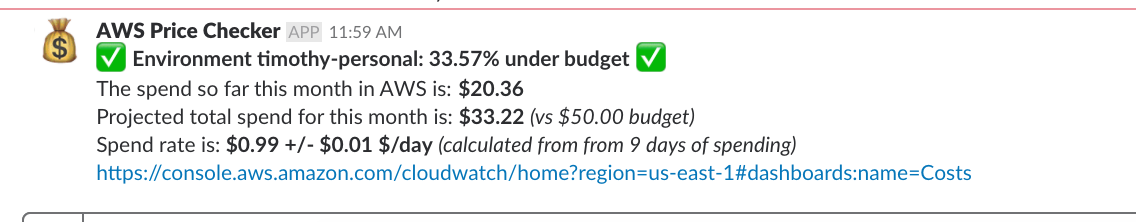

OK_STATUS = ":white_check_mark: *Environment {}: {:.2%} under budget* :white_check_mark:" |

|

BAD_STATUS = ":warning:*Environment {}: {:.2%} over budget* :warning:" |

|

UNKNOWN_STATUS = ":question:*Environment {}: unknown cost status (no data points, or not enough points to calculate rate)* :question:" |

|

ISO_8601_DT = "%Y-%m-%dT00:00:00Z" |

|

METRIC_QUERY_PERIOD_DAYS = int(os.environ.get("METRIC_QUERY_PERIOD_DAYS", "10")) |

|

|

|

def get_current_spend_rate(): |

|

""" |

|

Get the current spend against this account by querying CloudWatch. |

|

Returns an additional boolean value indicating whether any data points were found. |

|

""" |

|

# https://docs.aws.amazon.com/AmazonCloudWatch/latest/monitoring/billing-metricscollected.html |

|

cloudwatch = boto3.resource('cloudwatch') |

|

metric = cloudwatch.Metric('AWS/Billing', 'EstimatedCharges') |

|

|

|

# Get metrics for the last METRIC_QUERY_PERIOD_DAYS days |

|

# https://boto3.readthedocs.io/en/latest/reference/services/cloudwatch.html#CloudWatch.Metric.get_statistics |

|

r = metric.get_statistics( |

|

Dimensions=[ |

|

{ |

|

"Name": "Currency", |

|

"Value": "USD", |

|

} |

|

], |

|

StartTime=(datetime.datetime.utcnow() - datetime.timedelta(days=METRIC_QUERY_PERIOD_DAYS)).strftime(ISO_8601_DT), |

|

EndTime=datetime.datetime.utcnow().strftime(ISO_8601_DT), |

|

Statistics=["Maximum"], # Since the metric is total spend per month |

|

Period=3600*24 # daily |

|

) |

|

|

|

if DEBUG: |

|

from pprint import pprint |

|

pprint(r) |

|

|

|

# Sort values and calculate rate for (n-1) points |

|

dp_diffs = [] |

|

sdps = sorted((dp for dp in r['Datapoints']), key = lambda dp: dp['Timestamp']) |

|

for i in range(1, len(sdps)): |

|

dp_diff = copy.deepcopy(sdps[i]) |

|

dp_diff["dTimestamp"] = sdps[i]['Timestamp'] - sdps[i-1]['Timestamp'] |

|

dp_diff["dVal"] = sdps[i]['Maximum'] - sdps[i-1]['Maximum'] |

|

dp_diff["dDays"] = dp_diff["dTimestamp"].total_seconds() / (60*60*24) |

|

dp_diff["dVal/dDays"] = dp_diff["dVal"] / dp_diff["dDays"] |

|

|

|

# Throw out value when month rolls over |

|

# This isn't a perfect way to capture this, but it should work most of the time |

|

if dp_diff["dVal/dDays"] < 0: |

|

continue |

|

|

|

dp_diffs.append(dp_diff) |

|

|

|

if not dp_diffs: |

|

log.info("No datapoints received from CloudWatch. Returning $0 as estimate.") |

|

return 0, 0, 0, 0, datetime.datetime.now(tz=datetime.timezone.utc) |

|

|

|

latest_value = sdps[-1]['Maximum'] |

|

mean = sum(dp_diff["dVal/dDays"] for dp_diff in dp_diffs)/len(dp_diffs) |

|

stddev = (sum((dp_diff["dVal/dDays"] - mean)**2 for dp_diff in dp_diffs)/max(len(dp_diffs)-1,1))**0.5 |

|

log.info("Calculated cost stats: current-spend=%5.4f rate-mean=%5.4f rate-stdev=%5.4f nsamples=%d" % ( |

|

latest_value, mean, stddev, len(dp_diffs) |

|

)) |

|

|

|

return latest_value, mean, stddev, len(dp_diffs), sdps[-1]['Timestamp'] |

|

|

|

|

|

def handler(event, context): |

|

""" |

|

Lambda function handler. |

|

Will be called with a scheduled event from lambda. |

|

""" |

|

execution_start = datetime.datetime.utcnow() |

|

|

|

# Message formatting: https://api.slack.com/docs/messages/builder |

|

slack_message = "" |

|

slack_data = { |

|

"icon_emoji": ":moneybag:", |

|

"username": "AWS Price Checker", |

|

"channel": SLACK_CHANNEL |

|

} |

|

|

|

# We use the timestamp of the newest returned record as our current date for calculations |

|

latest_value, rate_avg, rate_stddev, n_data_points, start_date = get_current_spend_rate() |

|

|

|

days_in_month = calendar.monthrange(start_date.year, start_date.month)[1] |

|

days_remaining_in_month = days_in_month - start_date.day |

|

|

|

projected_spend = latest_value + days_remaining_in_month * rate_avg |

|

fraction_used = projected_spend/MONTHLY_BUDGET |

|

if not n_data_points: |

|

slack_message += UNKNOWN_STATUS.format(AWS_ENVIRONMENT_NAME) |

|

elif fraction_used < 1: |

|

slack_message += OK_STATUS.format(AWS_ENVIRONMENT_NAME, 1-fraction_used) |

|

else: |

|

slack_message += BAD_STATUS.format(AWS_ENVIRONMENT_NAME, fraction_used-1) |

|

|

|

slack_message += "\nThe spend this month in AWS as of {} is: *${:,.2f}* with *{:d}* days remaining".format( |

|

start_date.strftime("%Y/%m/%d"), |

|

latest_value, |

|

days_remaining_in_month |

|

) |

|

slack_message += "\nProjected total spend for this month is: *${:,.2f}* _(vs ${:,.2f} budget)_".format( |

|

projected_spend, |

|

MONTHLY_BUDGET |

|

) |

|

slack_message += "\nSpend rate is: *${:,.2f} +/- ${:,.2f} $/day* _(calculated from from {} days of spending)_".format( |

|

rate_avg, |

|

rate_stddev, |

|

n_data_points |

|

) |

|

|

|

if COST_DASHBOARD is not None: |

|

slack_message += "\n%s" % (COST_DASHBOARD) |

|

|

|

slack_data["text"] = slack_message |

|

|

|

# Send to slack |

|

if DEBUG: |

|

log.info("Skipping send to slack. Message=%s" % (slack_data)) |

|

else: |

|

r = requests.post( |

|

SLACK_WEBHOOK_URL, |

|

data=json.dumps(slack_data), |

|

headers={'Content-Type': 'application/json'} |

|

) |

|

log.info("Response from slack: %s" % r.content) |

|

|

|

# Fail if we don't get back the expected response |

|

if r.content != b'ok': |

|

raise RuntimeError('Problem with response from Slack: "%s"' % (r.content)) |

|

|

|

# Max timeout is 300 s (5 min) |

|

# https://docs.aws.amazon.com/lambda/latest/dg/limits.html |

|

log.info("Time elapsed (ms): %s" % ((datetime.datetime.utcnow() - execution_start).total_seconds()*1000)) |

|

if context: |

|

# https://docs.aws.amazon.com/lambda/latest/dg/python-context-object.html#python-context-object-methods |

|

log.info("Time remaining (ms): %s" % context.get_remaining_time_in_millis()) |

|

|

|

|

|

if __name__ == "__main__": |

|

# More logging |

|

logging.basicConfig() |

|

|

|

# Just call it |

|

handler(None, None) |

To build: