- AWS

- Kubernetes

- Minikube

- Kubectl, manage Pods, Nodes

- Helm

- Pods

- Pod Ports

- Service, Service Types

- Deployment

- StatefulSet

- DaemonSet

- Networking Model

- DNS, Services, Pods domains

- Ingress

- Storage, Persistent Volumes, Storage Classes, Reclaim Policy

- Volumes Types

- Finalizers

- Configuration, ConfigMaps

- Secrets

- Namespaces

- Multi Tenancy

- Images, imagePullPolicy, Private registries

- Helm

- Kustomize

- Liveness, Readiness and Startup Probes

- Operators Pattern

- Sidecars

- Endpoints

- Logging Architecture

- System components

- Metrics

- Applications

- Examples

- Admission Controllers

- Kubernetes Secrets Management

- Terraform

-

-

Save ulexxander/357b4d45344068d232b3ae7a96963660 to your computer and use it in GitHub Desktop.

AWS Cheat Sheet - Amazon Web Services Quick Guide [2023]

Accept invite via URL with token -> Set password -> Set MFA

- AWS Apps

- US West (N. California) AWS Management Console

- Tag Editor - Resource Groups Management Console - allows to search all resources by particular tag

An AMI contains the software configuration (operating system (OS), application server, and applications) required to launch your instance.

- Instance lifecycle - Amazon Elastic Compute Cloud

- aws_instance | Resources | hashicorp/aws | Terraform Registry

pending -> running -> stopping -> stopped -> shutting-down -> terminated

Only running is billed.

As soon as the status of an instance changes to shutting-down or terminated, you stop incurring charges for that instance.

After you terminate an instance, it remains visible in the console for a short while, and then the entry is automatically deleted.

You can't connect to or recover a terminated instance.

- Virtual private clouds (VPC) - Amazon Virtual Private Cloud

- Default VPCs - Amazon Virtual Private Cloud

- What is AWS VPC & Peering - AWS VPC Tutorial - Intellipaat

- ipv4 - Usage of 192.168.xxx, 172.xxx and 10.xxx in private networks - Network Engineering Stack Exchange

- CIDR.xyz - An interactive IP address and CIDR range visualizer

A virtual private cloud (VPC) is a virtual network dedicated to your AWS account. It is logically isolated from other virtual networks in the AWS Cloud. You can launch your AWS resources, such as Amazon EC2 instances, into your VPC.

- When you create a VPC, you must specify a range of IPv4 addresses for the VPC in the form of a CIDR block. For example,

10.0.0.0/16. - A VPC spans all of the Availability Zones in the Region.

- The allowed block size is between a

/16netmask (65,536 IP addresses) and/28netmask (16 IP addresses).

enable_dns_hostnames- Whether or not the VPC has DNS hostname support (enabled on default VPC)

Subnets for your VPC - Amazon Virtual Private Cloud

- Range of IP addresses in your VPC. You can launch AWS resources into your subnets.

- When you create a subnet, you specify the IPv4 CIDR block for the subnet, which is a subset of the VPC CIDR block.

- Each subnet must reside entirely within one Availability Zone and cannot span zones.

- By launching instances in separate Availability Zones, you can protect your applications from the failure of a single zone.

- The first four IP addresses and the last IP address in each subnet CIDR block are not available for your use, and they cannot be assigned to a resource

- Public subnet: The subnet has a direct route to an internet gateway. Resources in a public subnet can access the public internet.

- Private subnet: The subnet does not have a direct route to an internet gateway. Resources in a private subnet require a NAT device to access the public internet.

- VPN-only subnet: The subnet has a route to a Site-to-Site VPN connection through a virtual private gateway. The subnet does not have a route to an internet gateway.

Route tables - Amazon Virtual Private Cloud

- Contains a set of rules, called routes, that determine where network traffic from your subnet or gateway is directed.

- A subnet can only be associated with one route table at a time, but you can associate multiple subnets with the same subnet route table.

- Each route in a table specifies a destination and a target.

0.0.0.0/0 (destination) -> igw-id (target) - CIDR blocks for IPv4 and IPv6 are treated separately. Route with a destination CIDR of

0.0.0.0/0does not automatically include all IPv6 addresses. - When you create a VPC, it automatically has a main route table. When a subnet does not have an explicit routing table associated with it, the main routing table is used by default.

Internet Gateway - Amazon Virtual Private Cloud

- Enables resources in your public subnets (such as EC2 instances) to connect to the internet if the resource has a public IPv4 address or an IPv6 address.

- Similarly, resources on the internet can initiate a connection to resources in your subnet using the public IPv4 address or IPv6 address.

- Virtual Private Gateway is alternative for VPN solutions.

NAT gateways - Amazon Virtual Private Cloud

A NAT gateway is a Network Address Translation (NAT) service. You can use a NAT gateway so that instances in a private subnet can connect to services outside your VPC but external services cannot initiate a connection with those instances.

- The NAT gateway replaces the source IP address of the instances with the IP address of the NAT gateway.

- For a public NAT gateway, this is the elastic IP address of the NAT gateway.

- For a private NAT gateway, this is the private IPv4 address of the NAT gateway.

- When sending response traffic to the instances, the NAT device translates the addresses back to the original source IP address.

- Public – (Default) Instances in private subnets can connect to the internet through a public NAT gateway, but cannot receive unsolicited inbound connections from the internet.

- Private – Instances in private subnets can connect to other VPCs or your on-premises network through a private NAT gateway. You can route traffic from the NAT gateway through a transit gateway or a virtual private gateway.

Elastic network interfaces - Amazon Elastic Compute Cloud

- An elastic network interface is a logical networking component in a VPC that represents a virtual network card.

- You can create a network interface, attach it to an instance, detach it from an instance, and attach it to another instance.

- It can include: primary private IPv4 address from the IPv4 address range of your VPC, one or more secondary private IPv4 addresses from the IPv4 address range of your VPC, and so on...

- Control traffic to resources using security groups - Amazon Virtual Private Cloud

- aws_security_group | Resources | hashicorp/aws | Terraform Registry

A security group controls the traffic that is allowed to reach and leave the resources that it is associated with. For example, after you associate a security group with an EC2 instance, it controls the inbound and outbound traffic for the instance.

Connect to an EKS Private Endpoint with AWS ClientVPN | by Awani Alero | AWS Tip

A Remote Access VPN allows individual users to securely connect to a private network remotely, such as at home or while traveling. Remote access VPNs typically use software on a user’s device, such as a laptop or smartphone, to establish a secure connection with the private network.

A Site-to-Site VPN, on the other hand, connects entire networks to each other, allowing them to communicate as if they were on the same local network. Site-to-site VPNs typically use specialized hardware, such as a VPN gateway, to establish a secure connection between the networks. This type of VPN is commonly used by organizations that have multiple office locations or want to connect their cloud infrastructure to their on-premises data centers.

AWS Client VPN is a managed client-based OpenVPN Service that enables you to securely access your private AWS resources remotely using their Private IPs and hostnames.

Difference Between Virtual Private Gateway and Transit Gateway | Difference Between

A Virtual Private Gateway (VGW) is nothing but a VPN connector on the AWS side of the Site-to-Site VPN connection. It is a managed gateway endpoint for your VPC responsible for hybrid IT connectivity using VPN and AWS Direct Connect. The VGW is a logical network device that allows you to create an IPSec VPN tunnel from your VPC to your on-premises environment.

AWS transit gateway is a network transit hub that connects multiple VPCs and on-premise networks via virtual private networks or Direct Connect links.

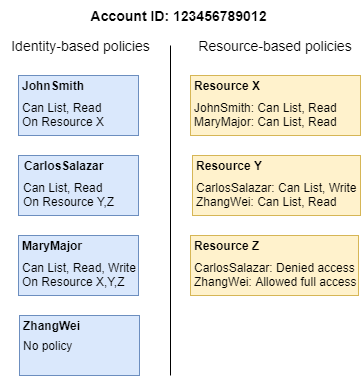

- Identity-based policies and resource-based policies - AWS Identity and Access Management

- Policy evaluation logic - AWS Identity and Access Management

Identity-based policies are attached to an IAM user, group, or role. These policies let you specify what that identity can do (its permissions). For example, you can attach the policy to the IAM user named John, stating that he is allowed to perform the Amazon EC2 RunInstances action.

Resource-based policies are attached to a resource. For example, you can attach resource-based policies to Amazon S3 buckets, Amazon SQS queues, VPC endpoints, and AWS Key Management Service encryption keys.

Resource-level permissions refer to the ability to use ARNs to specify individual resources in a policy. Resource-based policies are supported only by some AWS services.

aws sts get-caller-identity

aws iam get-role --role-name my-role

aws iam list-attached-role-policies --role-name my-role

aws iam get-policy --policy-arn - Certificate Manager – AWS Certificate Manager – Amazon Web Services

- aws_acm_certificate | Resources | hashicorp/aws | Terraform Registry

Use AWS Certificate Manager (ACM) to provision, manage, and deploy public and private SSL/TLS certificates for use with AWS services and your internal connected resources.

- Amazon Route 53 | DNS Service | AWS

- aws_route53_zone | Resources | hashicorp/aws | Terraform Registry

- aws_route53_record | Resources | hashicorp/aws | Terraform Registry

- Routing traffic to an ELB load balancer - Amazon Route 53

Amazon Route 53 is a highly available and scalable Domain Name System (DNS) web service. Route 53 connects user requests to internet applications running on AWS or on-premises.

- shared file storage for use with compute instances in the AWS cloud and on premise servers.

- high aggregate throughput to thousands of clients simultaneously.

- ReadWriteMany access mode.

- use when it is difficult to estimate the amount of storage the application.

- more costly

- won’t support some applications such as databases that require block storage

- doesn’t support any backup mechanism we need to setup backup manually

- doesn’t support snapshots.

- cloud block storage service that provides direct access from a single EC2 instance to a dedicated storage volume.

- high available and low-latency block storage solution.

- ReadWriteOnce access mode.

- automatic scaling is not available, but can scaled up down based on the need

- cost-efficient

- support all type of application.

- provides point-in-time snapshots of EBS volumes, which are backed up to Amazon S3 for long-term durability.

- provides the ability to copy snapshots across AWS regions, enabling geographical expansion, data center migration, and disaster recovery

- What is an Application Load Balancer? - Elastic Load Balancing

- Network Traffic Distribution – Elastic Load Balancing – Amazon Web Services - comparisons

- Create an HTTPS listener for your Application Load Balancer - Elastic Load Balancing

Automatically distributes your incoming traffic across multiple targets, such as EC2 instances, containers, and IP addresses, in one or more Availability Zones. It monitors the health of its registered targets, and routes traffic only to the healthy targets. Serves as the single point of contact for clients.

What is Amazon Elastic Container Registry? - Amazon ECR

AWS managed container image registry service.

- Registry - provided to each AWS account; you can create one or more repositories in your registry and store images in them.

- Authorization token

- Repository - contains your Docker images, Open Container Initiative (OCI) images, and OCI compatible artifacts.

- Repository policy -control access to your repositories and the images within them with repository policies.

- Image - push and pull container images to your repositories.

[ECR] [request]: Repository create on push · Issue #853 · aws/containers-roadmap

By design, ECR has not had create on push as a number of features and configuration options exist for repositories.

- CloudWatch Logs Insights query syntax - Amazon CloudWatch Logs

- Set up Fluent Bit as a DaemonSet to send logs to CloudWatch Logs - Amazon CloudWatch

With either method, the IAM role that is attached to the cluster nodes must have sufficient permissions. For more information about the permissions required to run an Amazon EKS cluster, see Amazon EKS IAM Policies, Roles, and Permissions in the Amazon EKS User Guide.

- Network load balancing on Amazon EKS - Amazon EKS

- How to create EKS Cluster using Terraform MODULES? - DevOps by Example

- Introduction - EKS Best Practices Guides

- Creating or updating a kubeconfig file for an Amazon EKS cluster - Amazon EKS

aws eks update-kubeconfig --region region-code --name my-cluster - Organizing Cluster Access Using kubeconfig Files | Kubernetes

- kubernetes-sigs/aws-iam-authenticator: A tool to use AWS IAM credentials to authenticate to a Kubernetes cluster

- Resolve the Kubernetes object access error in Amazon EKS

- How to add a user to an AWS EKS cluster

- EKS: Your current user or role does not have access to Kubernetes objects on this EKS cluster. | Diego DevOps Blog

Failed to resolve Your current user or role does not have access to Kubernetes objects on this EKS cluster. :(((

Two different authorization systems are in use. The AWS Management Console uses IAM. The EKS cluster uses the Kubernetes RBAC system (from the Kubernetes website). The cluster’s aws-auth ConfigMap associates IAM identities (users or roles) with Kubernetes cluster RBAC identities.

IAM roles for Amazon EC2 - Amazon Elastic Compute Cloud

Applications can securely make API requests from your instances, without requiring you to manage the security credentials that the applications use. Instead of creating and distributing your AWS credentials, you can delegate permission to make API requests using IAM roles as follows:

- Create an IAM role.

- Define which accounts or AWS services can assume the role.

- Define which API actions and resources the application can use after assuming the role.

- Specify the role when you launch your instance, or attach the role to an existing instance.

- Have the application retrieve a set of temporary credentials and use them.

Amazon EC2 uses an instance profile as a container for an IAM role. When you create an IAM role using the IAM console, the console creates an instance profile automatically and gives it the same name as the role to which it corresponds. If you use the Amazon EC2 console to launch an instance with an IAM role or to attach an IAM role to an instance, you choose the role based on a list of instance profile names.

-

Configuring a Kubernetes service account to assume an IAM role - Amazon EKS

-

Amazon EKS supports IAM Roles for Service Accounts (IRSA) that allows cluster operators to map AWS IAM Roles to Kubernetes Service Accounts.

-

This provides fine-grained permission management for apps that run on EKS and use other AWS services.

-

It works via IAM OpenID Connect Provider (OIDC) that EKS exposes, and IAM Roles must be constructed with reference to the IAM OIDC Provider (specific to a given EKS cluster), and a reference to the Kubernetes Service Account it will be bound to.

-

Once an IAM Role is created, a service account should include the ARN of that role as an annotation (

eks.amazonaws.com/role-arn).

AWS IRSA Role:

module "load_balancer_controller_irsa_role" {

source = "terraform-aws-modules/iam/aws//modules/iam-role-for-service-accounts-eks"

role_name = "load_balancer_controller"

attach_load_balancer_controller_policy = true

oidc_providers = {

ex = {

provider_arn = module.eks.oidc_provider_arn

namespace_service_accounts = ["kube-system:aws-load-balancer-controller"]

}

}

tags = local.tags

}

Kubernetes Service Account:

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/name: aws-load-balancer-controller

name: aws-load-balancer-controller

namespace: kube-system

annotations:

eks.amazonaws.com/role-arn: arn:aws:iam::814556414566:role/load_balancer_controller

Configuring pods to use a Kubernetes service account - Amazon EKS

kubectl describe pod my-app-6f4dfff6cb-76cv9 | grep AWS_WEB_IDENTITY_TOKEN_FILE:

AWS_WEB_IDENTITY_TOKEN_FILE: /var/run/secrets/eks.amazonaws.com/serviceaccount/token

The kubelet requests and stores the token on behalf of the pod.

- cert-manager adds certificates and certificate issuers as resource types in Kubernetes clusters, and simplifies the process of obtaining, renewing and using those certificates.

- It can issue certificates from a variety of supported sources, including Let's Encrypt, HashiCorp Vault, and Venafi as well as private PKI.

- It will ensure certificates are valid and up to date, and attempt to renew certificates at a configured time before expiry.

DNS01 - cert-manager Documentation

Cert manager does not find the record using default nameserver.

E0424 12:02:04.047456 1 sync.go:190] cert-manager/challenges "msg"="propagation check failed" "error"="NS ns-1536.awsdns-00.co.uk.:53 returned REFUSED for _acme-challenge.eks.limitlex.io." "dnsName"="eks.limitlex.io" "resource_kind"="Challenge" "resource_name"="eks-limitlex-io-648rj-197652701-1058046404" "resource_namespace"="ingress" "resource_version"="v1" "type"="DNS-01"

Solution: explicitly set nameservers that have record already propagated:

extraArgs = ["--dns01-recursive-nameservers=1.1.1.1:53,8.8.8.8:53"]

- Welcome - AWS Load Balancer Controller

- Ingress annotations - AWS Load Balancer Controller

alb.ingress.kubernetes.io/schemealb.ingress.kubernetes.io/target-typealb.ingress.kubernetes.io/load-balancer-namealb.ingress.kubernetes.io/inbound-cidrs

- Service annotations - AWS LoadBalancer Controller

AWS Load Balancer Controller is a controller to help manage Elastic Load Balancers for a Kubernetes cluster.

- It satisfies Kubernetes Ingress resources by provisioning Application Load Balancers.

- It satisfies Kubernetes Service resources by provisioning Network Load Balancers.

- The controller watches for ingress events from the API server.

- An ALB (ELBv2) is created in AWS for the new ingress resource. This ALB can be internet-facing or internal.

- Target Groups are created in AWS for each unique Kubernetes service described in the ingress resource.

- Listeners are created for every port detailed in your ingress resource annotations. When no port is specified, sensible defaults (

80or443) are used. - Rules are created for each path specified in your ingress resource.

- Instance mode (default) - traffic starts at the ALB and reaches the Kubernetes nodes through each service's NodePort. This means that services referenced from ingress resources must be exposed by

type:NodePortin order to be reached by the ALB. - IP mode - Ingress traffic starts at the ALB and reaches the Kubernetes pods directly. CNIs must support directly accessible POD ip via secondary IP addresses on ENI.

- Amazon VPC CNI plugin - Amazon EKS - is deployed on each Amazon EC2 node in your Amazon EKS cluster. The add-on creates elastic network interfaces and attaches them to your Amazon EC2 nodes. The add-on also assigns a private IPv4 or IPv6 address from your VPC to each pod and service.

AWS manual way guide using combinations of CLIs: eksctl / aws-cli+kubectl and helm / kubectl:

Installing the AWS Load Balancer Controller add-on - Amazon EKS

Alternative flow using Terraform and kubectl:

- Install

cert-managerusingkubectl - Create IRSA role for the controller using

terraform-aws-iam/iam-role-for-service-accounts-eksmodule, example: https://github.com/terraform-aws-modules/terraform-aws-iam/blob/v5.13.0/examples/iam-role-for-service-accounts-eks/main.tf#L184- NOTE: replace source in the example with

terraform-aws-modules/iam/aws//modules/iam-role-for-service-accounts-eks

- NOTE: replace source in the example with

- Obtain IAM module output

iam_role_arn - Create

ServiceAccount/aws-load-balancer-controllerKubernetes manifest with annotationeks.amazonaws.com/role-arnset toiam_role_arnfrom Terraform. - Download controller manifest

- Important: comment out

ServiceAccountin the manifest, we already have it with correct role ARN! - Important:

your-cluster-namewith your cluster name in the manifest, otherwise controller won't discover subnets! - Download and apply IngressClass.

Better automated flow using only Terraform:

- Create IRSA role for the controller using

terraform-aws-iam/iam-role-for-service-accounts-eksmodule - Deploy AWS Load Balancer Controller as a

helm_releaseresource in Terraform usinghelmprovider:

resource "helm_release" "aws_load_balancer_controller" {

name = "aws-load-balancer-controller"

repository = "https://aws.github.io/eks-charts"

chart = "aws-load-balancer-controller"

version = "1.4.8"

namespace = "kube-system"

# ...

- Certificate Discovery - AWS LoadBalancer Controller

- Setting up Ingress on EKS with TLS Certificate from ACM

- AWS EKS Kubernetes ALB Ingress Service Enable SSL - STACKSIMPLIFY

- TLS certificates for ALB Listeners can be automatically discovered with hostnames from Ingress resources if the alb.ingress.kubernetes.io/certificate-arn annotation is not specified.

- The controller will attempt to discover TLS certificates from the tls field in Ingress and host field in Ingress rules.

- Ensure subnects have tags configured:

- Subnet Discovery - AWS Load Balancer Controller

kubernetes.io/role/internal-elborkubernetes.io/role/elbkubernetes.io/cluster/${cluster-name}

- Ensure configured cluster name in the controller manifest is correct!

Replace

your-cluster-namein theDeploymentspecsection of the file with the name of your cluster by replacing`my-cluster`with the name of your cluster.

Log message: InvalidParameter: 1 validation error(s) found.\n- minimum field value of 1, CreateTargetGroupInput.Port.\n

Issue #1695 · kubernetes-sigs/aws-load-balancer-controller

- Instance mode (default) will require at least NodePort service type. With NodePort service type, kube-proxy will open a port on your worker node instances to which the ALB can route traffic.

- Use IP target type instead:

alb.ingress.kubernetes.io/target-type: ip

- Amazon EBS CSI driver - Amazon EKS

- kubernetes-sigs/aws-ebs-csi-driver: CSI driver for Amazon EBS

- Persistent storage for Kubernetes | AWS Storage Blog <- very great explanation!

Allows Amazon EKS clusters to manage the lifecycle of Amazon EBS volumes for persistent volumes.

- EKS add-on

eksctl create addonaws eks create-addon- `resource "aws_eks_addon" "csi_driver" { addon_name = "aws-ebs-csi-driver" }

- Helm Chart: https://kubernetes-sigs.github.io/aws-ebs-csi-driver

Auto Scaling groups - Amazon EC2 Auto Scaling

An Auto Scaling group contains a collection of EC2 instances that are treated as a logical grouping for the purposes of automatic scaling and management. An Auto Scaling group also lets you use Amazon EC2 Auto Scaling features such as health check replacements and scaling policies.

Karpenter is an open-source node provisioning project built for Kubernetes.

-

Watching for pods that the Kubernetes scheduler has marked as unschedulable

-

Evaluating scheduling constraints (resource requests, nodeselectors, affinities, tolerations, and topology spread constraints) requested by the pods

-

Provisioning nodes that meet the requirements of the pods

-

Removing the nodes when the nodes are no longer needed

-

The Provisioner sets constraints on the nodes that can be created by Karpenter and the pods that can run on those nodes.

-

Node Templates enable configuration of AWS specific settings. Each provisioner must reference an AWSNodeTemplate using

spec.providerRef. Multiple provisioners may point to the same AWSNodeTemplate.

Constraints you can request include:

- Resource requests: Request that certain amount of memory or CPU be available.

- Node selection: Choose to run on a node that is has a particular label (

nodeSelector). - Node affinity: Draws a pod to run on nodes with particular attributes (affinity).

- Topology spread: Use topology spread to help ensure availability of the application.

- Pod affinity/anti-affinity: Draws pods towards or away from topology domains based on the scheduling of other pods.

Reason: module terraform-aws-modules/iam/aws//modules/iam-role-for-service-accounts-eks requires Karpenter to provide whitelisted resource tag when managing EC2 instances.

# Default karpenter_tag_key is "karpenter.sh/discovery"

condition {

test = "StringEquals"

variable = "ec2:ResourceTag/${var.karpenter_tag_key}"

values = [var.karpenter_controller_cluster_id]

}

Solution: add to AWSNodeTemplate:

spec:

tags:

karpenter.sh/discovery: ${module.eks.cluster_name}Failed to resolve, probably all pods need to be covered by PDB.

2023-03-27T10:11:04.410Z ERROR controller.consolidation consolidating cluster, tracking PodDisruptionBudgets, no matches for kind "PodDisruptionBudget" in version "policy/v1beta1" {"commit": "5d4ae35-dirty"}

- Data Encryption and Secrets Management - EKS Best Practices Guides

- How to use AWS Secrets Configuration Provider with Kubernetes Secret Store CSI Driver

- Integrating Secrets Manager secrets with Kubernetes Secrets Store CSI Driver

Kubernetes secrets are used to store sensitive information, such as user certificates, passwords, or API keys. They are persisted in etcd as base64 encoded strings. On EKS, the EBS volumes for etcd nodes are encrypted with EBS encryption. A pod can retrieve a Kubernetes secrets objects by referencing the secret in the podSpec.

- EKS Pricing

- $0.10 per hour for each cluster

- EC2 Pricing

- On-Demand

- t3.small $0.0248 2 2 GiB

- t3.medium $0.0496 2 4 GiB

- t3.large $0.0992 2 8 GiB

- On-Demand

- EBS

- Volumes

- Free Tier includes 30 GB of storage, 2 million I/Os, and 1 GB of snapshot storage

- General Purpose SSD (gp3) - Storage $0.096/GB-month

- General Purpose SSD (gp2) Volumes $0.12 per GB-month of provisioned storage

- Snapshots (storage, restore)

- Standard: $0.05/GB-month, Free

- Archive: $0.0125/GB-month, $0.03 per GB of data retrieved

- Volumes

- S3

- Standard (General purpose storage for any type of data) - First 50 TB / Month $0.023 per GB

- Standard Infrequent Access (For long lived but infrequently accessed data) - $0.0125 per GB

- Glacier Instant Retrieval (For long-lived archive data accessed once a quarter) - $0.004 per GB

- Glacier Flexible Retrieval (Formerly S3 Glacier, long-term backups and archives with retrieval option from 1 minute to 12 hours) - $0.0036 per GB

- Glacier Deep Archive (long-term data archiving that is accessed once or twice in a year and can be restored within 12 hours) - $0.00099 per GB

- ELB Pricing

- Application Load Balancer

- $0.0252 per hour (or partial hour)

- $0.008 per LCU-hour (or partial hour)

- Free Tier receives 750 hours per month shared between Classic and ALB; 15 GB of data processing for Classic; and 15 LCUs for ALB.

- LCU

- New connections: newly established connections per second.

- Active connections: active connections per minute.

- Processed bytes: bytes processed in GBs for HTTP(S) requests and responses.

- Rule evaluations: The product of the number of rules processed by your load balancer and the request rate. The first 10 processed rules are free (Rule evaluations = Request rate * (Number of rules processed - 10 free rules).

- Network Load Balancer

- $0.0252 per hour (or partial hour)

- $0.006 per LCU-hour (or partial hour)

- No Free Tier.

- LCU

- New connections or flows: newly established connections/flows per second. Many technologies (HTTP, WebSockets, etc.) reuse TCP connections for efficiency.

- Active connections or flows: Peak concurrent connections/flows, sampled minutely.

- Processed bytes: bytes processed in GBs.

- LCU are charged only on the dimension with the highest usage.

- Application Load Balancer

- RDS Pricing

- PostgreSQL

- Single AZ db.t3.medium $0.095 per hour

- Multi AZ standby db.t3.medium $0.19 per hour

- General Purpose SSD (gp2) $0.138 per GB-month

- MySQL

- Single AZ db.t3.medium $0.088 per hour

- Multi AZ standby db.t3.medium $0.176 per hour

- General Purpose SSD (gp2) $0.138 per GB-month

- PostgreSQL

- ECR Pricing

- Free tier 500 MB per month of storage for private repositories for one year.

- $0.10 per GB / month

- CloudWatch Pricing

- Free Tier 5 GB Data (ingestion, archive storage, and data scanned by Logs Insights queries)

- Logs

- Collect (Data Ingestion) $0.67 per GB

- Store (Archival) $0.033 per GB

- Analyze (Logs Insights queries) $0.0067 per GB of data scanned

- Detect and Mask (Data Protection) $0.12 per GB of data scanned

- KMS Pricing

- Each costs $1/month (prorated hourly)

- If you enable automatic key rotation, each newly generated backing key costs an additional $1/month (prorated hourly).

- AWS Secrets Manager Pricing

- $0.40 per secret per month.

- Logging for Amazon EKS - AWS Prescriptive Guidance

- Introduction | Data on EKS

- AWS Observability Best Practices

- Add optional monitoring with Grafana

- Cloudcraft – Draw AWS diagrams

helm repo add grafana-charts https://grafana.github.io/helm-charts

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

Which tech stack(s) is AWS built on? : aws

I think the actual interesting thing is how much of AWS is built on AWS. AWS relies a lot on their "primitives" to build higher level services Consider RDS, which is really just EC2 + EBS + S3 + Route53 Or that one of the largest single users of EC2 is ELB (Every ELB is an EC2 instance under the hood)

- Kubernetes, also known as K8s, is an open-source system for automating deployment, scaling, and management of containerized applications.

- Kubernetes coordinates a highly available cluster of computers that are connected to work as a single unit.

- Kubernetes automates the distribution and scheduling of application containers across a cluster in a more efficient way.

A Kubernetes cluster consists of two types of resources:

- The Control Plane coordinates the cluster

- Nodes are VM or a physical computer that serves as a worker machine in a Kubernetes cluster. Each node has a Kubelet, which is an agent for managing the node and communicating with the Kubernetes control plane using API.

A Kubernetes cluster that handles production traffic should have a minimum of three nodes.

A Pod is a Kubernetes abstraction that represents a group of one or more application containers, and some shared resources for those containers: Volumes, unique cluster IP address, container image version or specific ports to use. Nodes are always co-located and co-scheduled, and run in a shared context on the same Node.

A Node is a worker machine in Kubernetes and may be either a virtual or a physical machine. Each Node is managed by the control plane. A Node can have multiple pods, and the Kubernetes control plane automatically handles scheduling the pods across the Nodes in the cluster.

A Service in Kubernetes is an abstraction which defines a logical set of Pods and a policy by which to access them. Services enable a loose coupling between dependent Pods. The set of Pods targeted by a Service is usually determined by a LabelSelector. Routes traffic across a set of Pods, handles discovery and routing.

Rolling updates allow Deployments update to take place with zero downtime by incrementally updating Pods instances with new ones.

- Which Kubernetes apiVersion Should I Use?

- How to have multiple object types in a single openshift yaml template?

- kubernetes/minikube: Run Kubernetes locally

- Getting Minikube on WSL2 Ubuntu working

- minikube start | minikube

- Basic controls | minikube

minikube start

minikube dashboard

minikube service hello-minikube

minikube stop

minikube delete- kubectl Cheat Sheet | Kubernetes

- Use Port Forwarding to Access Applications in a Cluster | Kubernetes (TCP ports only)

kubectl apply -f nginx.yml

kubectl delete -f nginx.yml

# Creates a proxy server or application-level gateway between localhost and the Kubernetes API server.

kubectl proxy

# List all resources.

kubectl list allHow to restart Kubernetes Pods with kubectl

- Restarting Kubernetes Pods by changing the number of replicas with

kubectl scalecommand - Downtimeless restarts with

kubectl rollout restartcommand - Automatic restarts by updating the Pod’s environment variable

- Restarting Pods by deleting them

kubectl rollout restart deploy <name>kubectl delete pods <pod> --grace-period=0 --forceBulk deletion of Kubernetes resources - Octopus Deploy

kubectl delete pods --all

Safely Drain a Node | Kubernetes

kubectl drain --ignore-daemonsets --delete-emptydir-data <node>

kubectl delete node <node>How to remove broken nodes in Kubernetes - Stack Overflow

Finally I understood that nodes stuck at deletion due to finalizer

# Remove finalizer

kc edit node ip-10-0-20-124.us-west-1.compute.internal

kc delete node ip-10-0-20-124.us-west-1.compute.internalAccessing logs from Init Containers | Kubernetes

kubectl logs <pod-name> -c <init-container-2>

- Helm | Quickstart Guide

- Artifact Hub

- A Chart is a Helm package. It contains all of the resource definitions necessary to run an application, tool, or service inside of a Kubernetes cluster.

- A Repository is the place where charts can be collected and shared.

- A Release is an instance of a chart running in a Kubernetes cluster. One chart can often be installed many times into the same cluster. And each time it is installed, a new release is created.

- Helm installs charts into Kubernetes, creating a new release for each installation. And to find new charts, you can search Helm chart repositories.

Sprig Function Documentation | sprig

.bashrc

alias hm="helm"

. ~/scripts/helm-autocomplete.sh

complete -o default -F __start_helm hmhelm repo add bitnami https://charts.bitnami.com/bitnami

helm search repo bitnami

helm repo update

helm install bitnami/mysql --generate-name

helm show chart bitnami/mysql

helm show all bitnami/mysql- Pods are the smallest deployable units of computing that you can create and manage in Kubernetes. Group of one or more containers, with shared storage and network resources, and a specification for how to run the containers. A Pod's contents are always co-located and co-scheduled, and run in a shared context.

- Pods that run a single container. The "one-container-per-Pod" model is the most common Kubernetes use case; in this case, you can think of a Pod as a wrapper around a single container; Kubernetes manages Pods rather than managing the containers directly.

- Pods that run multiple containers that need to work together. A Pod can encapsulate an application composed of multiple co-located containers that are tightly coupled and need to share resources. These co-located containers form a single cohesive unit of service—for example, one container serving data stored in a shared volume to the public, while a separate sidecar container refreshes or updates those files. The Pod wraps these containers, storage resources, and an ephemeral network identity together as a single unit.

- As well as application containers, a Pod can contain init containers that run during Pod startup. You can also inject ephemeral containers for debugging if your cluster offers this.

- Pod Ports Reference | Kubernetes

- kubernetes - Container port pods vs container port service - Stack Overflow

containerPortas part of the pod definition is only informational purposes. Ultimately if you want to expose this as a service within the cluster or node then you have to create a service.- Defining a Service | Kubernetes

- Port definitions in Pods have names, and you can reference these names in the

targetPortattribute of a Service.

# Pod

ports:

- containerPort: 80

name: http-web-svc

# Service

ports:

- name: name-of-service-port

protocol: TCP

port: 80

targetPort: http-web-svc- Service is a method for exposing a network application that is running as one or more Pods in your cluster.

- You don't need to modify your existing application to use an unfamiliar service discovery mechanism. You can run code in Pods, whether this is a code designed for a cloud-native world, or an older app you've containerized. You use a Service to make that set of Pods available on the network so that clients can interact with it.

- The set of Pods targeted by a Service is usually determined by a selector that you define.

Publishing Services (ServiceTypes) | Kubernetes

ClusterIP: Exposes the Service on a cluster-internal IP.NodePort: Exposes the Service on each Node's IP at a static port (the NodePort).LoadBalancer: Exposes the Service externally using a cloud provider's load balancer.ExternalName: Maps the Service to the contents of the externalName field (e.g.foo.bar.example.com), by returning a CNAME record with its value. No proxying of any kind is set up.

Deployments | Kubernetes A Deployment provides declarative updates for Pods and ReplicaSets.

.spec.strategy specifies the strategy used to replace old Pods by new ones. .spec.strategy.type can be "Recreate" or "RollingUpdate". "RollingUpdate" is the default value.

Recreate- all existing Pods are killed before new ones are created.RollingUpdate- rolling update fashion, specifymaxUnavailable(maximum number of Pods that can be unavailable) andmaxSurge(maximum number of Pods that can be created over the desired number) to control the rolling update process.

StatefulSets | Kubernetes Workload API object used to manage stateful applications. Manages the deployment and scaling of a set of Pods, and provides guarantees about the ordering and uniqueness of these Pods. Unlike a Deployment, a StatefulSet maintains a sticky identity for each of their Pods. Each has a persistent identifier that it maintains across any rescheduling.

- Stable, unique network identifiers.

- Stable, persistent storage.

- Ordered, graceful deployment and scaling.

- Ordered, automated rolling updates.

DaemonSet | Kubernetes A DaemonSet ensures that all (or some) Nodes run a copy of a Pod. As nodes are added to the cluster, Pods are added to them. As nodes are removed from the cluster, those Pods are garbage collected. Deleting a DaemonSet will clean up the Pods it created.

- Every Pod in a cluster gets its own unique cluster-wide IP address.

- Pods can communicate with all other pods on any other node without NAT.

- Agents on a node (e.g. system daemons, kubelet) can communicate with all pods on that node.

Kubernetes 1.26 supports Container Network Interface (CNI) plugins for cluster networking. You must use a CNI plugin that is compatible with your cluster and that suits your needs.

DNS for Services and Pods | Kubernetes

- Kubernetes creates DNS records for Services and Pods. You can contact Services with consistent DNS names instead of IP addresses.

- A DNS query may return different results based on the namespace of the Pod making it.

- kubernetes/dns specification.md

- Kubernetes assigns Service an IP address - the cluster IP, that is used by the virtual IP address mechanism.

- "Normal" (not headless) Services are assigned DNS A and/or AAAA records, depending on the IP family or families of the Service, with a name of the form

my-svc.my-namespace.svc.cluster-domain.example. This resolves to the cluster IP of the Service. - Headless Services (without a cluster IP) Services are also assigned DNS A and/or AAAA records, with a name of the form

my-svc.my-namespace.svc.cluster-domain.example. Unlike normal Services, this resolves to the set of IPs of all of the Pods selected by the Service. Clients are expected to consume the set or else use standard round-robin selection from the set. - Sometimes you don't need load-balancing and a single Service IP. In this case, you can create what are termed "headless" Services, by explicitly specifying "None" for the cluster IP (

.spec.clusterIP).

- Form:

pod-ip-address.my-namespace.pod.cluster-domain.example - Example:

172-17-0-3.default.pod.cluster.local - When a Pod is created, its hostname (as observed from within the Pod) is the Pod's

metadata.namevalue. Can be changed withhostnameandsubdomainfields.

kubectl get services kube-dns --namespace=kube-system# apt install dnsutils

root@postgresql-58d5489454-4kvmm:/ nslookup mysql

Server: 10.96.0.10

Address: 10.96.0.10#53

Name: mysql.pay.svc.cluster.local

Address: 10.244.0.31-

Set up Ingress on Minikube with the NGINX Ingress Controller | Kubernetes

-

Provides load balancing, SSL termination and name-based virtual hosting.

-

Exposes HTTP and HTTPS routes from outside the cluster to services within the cluster. Traffic routing is controlled by rules defined on the Ingress resource.

-

An Ingress controller is responsible for fulfilling the Ingress, usually with a load balancer, though it may also configure your edge router or additional frontends to help handle the traffic.

-

An Ingress does not expose arbitrary ports or protocols. Exposing services other than HTTP and HTTPS to the internet typically uses a service of type

Service.Type=NodePortorService.Type=LoadBalancer. -

You must have an Ingress controller to satisfy an Ingress. Only creating an Ingress resource has no effect.

Each HTTP rule contains the following information:

- An optional host.

- A list of paths, each of which has an associated backend defined with a

service.nameand aservice.port.nameorservice.port.number. - A backend is a combination of Service and port names.

ImplementationSpecific: With this path type, matching is up to theIngressClass.Exact: Matches the URL path exactly and with case sensitivity.Prefix: Matches based on a URL path prefix split by/. Matching is case sensitive and done on a path element by element basis.

Hosts can be precise matches (for example foo.bar.com) or a wildcard (for example *.foo.com).

- Single Service - default backend with no rules.

- Simple fanout - route traffic from a single IP address to more than one Service, based on the HTTP URI being requested (

pathType: Prefix). - Name based virtual hosting - route HTTP traffic to multiple host names at the same IP address (

host: foo.bar.com). - TLS - secure an Ingress by specifying a Secret that contains a TLS private key and certificate. Only supports a single TLS port, 443, and assumes TLS termination at the ingress point (traffic to the Service and its Pods is in plaintext).

kubernetes-dashboard 6.0.6 · helm/k8s-dashboard

Limitations:

- Non-HTTPS is tricky to setup

- Insecure access detected. Sign in will not be available. Access Dashboard securely over HTTPS or using localhost. Read more here .

- Can not be served under sub path, without rewriting target on proxy level

Attemps to setup HTTP ingress:

protocolHttp = true

service = {

externalPort = 9090

}

extraArgs = ["--insecure-bind-address=0.0.0.0", "--enable-insecure-login"]

ingress = {

enabled = true

className = "alb"

annotations = {

# TODO: ALB config to variables.

"alb.ingress.kubernetes.io/scheme" = "internet-facing"

"alb.ingress.kubernetes.io/target-type" = "ip"

"alb.ingress.kubernetes.io/load-balancer-name" = "forumpay-internal"

"alb.ingress.kubernetes.io/group.name" = "forumpay-internal"

"alb.ingress.kubernetes.io/group.order" = "-1"

# IP of Limitlex Office.

"alb.ingress.kubernetes.io/inbound-cidrs" = "1.2.3.4/32"

}

paths = ["/*"]

}

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: my-nginx-ingress

spec:

ingressClassName: nginx

defaultBackend:

service:

name: nginx-service

port:

number: 80# Rule

host: payment-gateway.local.limitlex.io

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: webhost-gui

port:

number: 80# Ingress

metadata:

name: webhost-gui

namespace: pay

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

nginx.ingress.kubernetes.io/use-regex: "true"

# Rule

http:

paths:

- path: /htdocs_gui(/|$)(.*)

pathType: Prefix

backend:

service:

name: webhost-gui

port:

number: 80Persistent Volumes | Kubernetes

PVs are resources in the cluster. PVCs are requests for those resources and also act as claim checks to the resource. Lifecycle:

- Provisioning

- Static: cluster administrator creates a number of PVs. They carry the details of the real storage, which is available for use by cluster users.

- Dynamic: When none of the static PVs match PersistentVolumeClaim, the cluster may try to dynamically provision a volume specially for the PVC.

This provisioning is based on StorageClasses: the PVC must request a storage class and the administrator must have created and configured that class for dynamic provisioning to occur.

Claims that request the class

""effectively disable dynamic provisioning for themselves.

- Binding

- Master watches for new PVCs, finds a matching PV (if possible), and binds them together. If a PV was dynamically provisioned for a new PVC, the loop will always bind that PV to the PVC.

- Once bound, PersistentVolumeClaim binds are exclusive, regardless of how they were bound.

- Claims will remain unbound indefinitely if a matching volume does not exist and will be bound as matching volumes become available.

- Using

- Pods use claims as volumes. The cluster inspects the claim to find the bound volume and mounts that volume for a Pod. For volumes that support multiple access modes, the user specifies which mode is desired when using their claim as a volume in a Pod.

- Retain - manual reclamation

- kubernetes - What to do with Released persistent volume? - Stack Overflow

Delete the claimRef entry from PV specs, so as new PVC can bind to it. This should make the PV Available:

kubectl patch pv <your-pv> -p '{"spec":{"claimRef": null}}' - Delete all released volumes:

kubectl get pv | grep Released | awk '$1 {print$1}' | while read vol; do kubectl delete pv/${vol}; done

- kubernetes - What to do with Released persistent volume? - Stack Overflow

Delete the claimRef entry from PV specs, so as new PVC can bind to it. This should make the PV Available:

- Recycle - basic scrub (

rm -rf /thevolume/*). Deprecated, instead, the recommended approach is to use dynamic provisioning. - Delete - associated storage asset such as AWS EBS, GCE PD, Azure Disk, or OpenStack Cinder volume is deleted

Storage Classes | Kubernetes A StorageClass provides a way for administrators to describe the "classes" of storage they offer. Different classes might map to quality-of-service levels, or to backup policies, or to arbitrary policies determined by the cluster administrators. Kubernetes itself is unopinionated about what classes represent.

Persistent Volumes | minikube

On Minikube there is pre-defined standard StorageClass and storage-provisioner Pod in kube-system namespace.

Provisioner will dynamically create {ersistent volumes, if persistent volume claims are introduced.

Provisioned persistent volumes will have Reclaim Policy set to Delete, which means volumes will be deleted once claims are deleted.

To use static provisioning, set storageClassName: "" on both PV and PVC:

apiVersion: v1

kind: PersistentVolume

metadata:

name: grafana-data

labels:

app: grafana

spec:

storageClassName: ""

accessModes:

- ReadWriteOnce

capacity:

storage: 1Gi

hostPath:

path: /data/grafana

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana-data

labels:

app: grafana

spec:

storageClassName: ""

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1GiVolumes | Kubernetes

To use a volume, specify the volumes to provide for the Pod in .spec.volumes and declare where to mount those volumes into containers in .spec.containers[*].volumeMounts.

Persistent Volumes | Kubernetes Types:

cephfs- CephFS volumecsi- Container Storage Interface (CSI)fc- Fibre Channel (FC) storagehostPath- HostPath volume (for single node testing only; WILL NOT WORK in a multi-node cluster; consider usinglocalvolume instead)iscsi- iSCSI (SCSI over IP) storagelocal- local storage devices such as a disk, partition or directory mounted on nodes.nfs- Network File System (NFS) storagerbd- Rados Block Device (RBD) volume

Projected Volumes | Kubernetes Types:

Ephemeral Volumes | Kubernetes Types:

- emptyDir: empty at Pod startup, with storage coming locally from the kubelet base directory (usually the root disk) or RAM

- configMap, downwardAPI, secret: inject different kinds of Kubernetes data into a Pod

- CSI ephemeral volumes: similar to the previous volume kinds, but provided by special CSI drivers which specifically support this feature

- generic ephemeral volumes, which can be provided by all storage drivers that also support persistent volumes

Container Storage Interface (CSI) defines a standard interface for container orchestration systems (like Kubernetes) to expose arbitrary storage systems to their container workloads. Replaces provider-specific implementations (awsElasticBlockStore, azureDisk, gcePersistentDisk).

Stop Messing with Kubernetes Finalizers | Martin Heinz | Personal Website & Blog

Configuration Best Practices | Kubernetes

- Configuration files should be stored in version control before being pushed to the cluster.

- Group related objects into a single file whenever it makes sense.

- Put object descriptions in annotations, to allow better introspection.

- Don't use naked Pods (that is, Pods not bound to a ReplicaSet or Deployment) if you can avoid it. Naked Pods will not be rescheduled in the event of a node failure. Deployment creates a ReplicaSet and specifies a strategy to replace Pods (such as RollingUpdate). A Job may also be appropriate.

- Create a Service before its corresponding backend workloads (Deployments or ReplicaSets), and before any workloads that need to access it. When Kubernetes starts a container, it provides environment variables pointing to all the Services which were running when the container was started.

- An optional (though strongly recommended) cluster add-on is a DNS server, like CoreDNS.

- Don't specify a

hostPortfor aPodunless it is absolutely necessary, it limits the number of places thePodcan be scheduled, because each<hostIP, hostPort, protocol>combination must be unique.- For debugging purposes: apiserver proxy or

kubectl port-forward. - Explicitly exposing a Pod's port on the node: NodePort Service.

- For debugging purposes: apiserver proxy or

- Avoid using

hostNetwork, for the same reasons ashostPort. - Use headless Services (which have a

ClusterIPofNone) for service discovery when you don't needkube-proxyload balancing. - Define and use labels that identify semantic attributes of your application or Deployment, such as

{ app.kubernetes.io/name: MyApp, tier: frontend, phase: test, deployment: v3 }. - Use the Kubernetes common labels. These standardized labels enrich the metadata so tools, including

kubectland dashboard work in an interoperable way.

ConfigMaps | Kubernetes A ConfigMap is an API object used to store non-confidential data in key-value pairs. Consumed by pods:

- Inside a container command and args

- Environment variables for a container

- Add a file in read-only volume, for the application to read

- Write code to run inside the Pod that uses the Kubernetes API to read a ConfigMap

Mounted ConfigMaps are updated automatically. ConfigMaps consumed as environment variables are not updated automatically and require a pod restart.

apiVersion: v1

kind: ConfigMap

metadata:

name: game-demo

data:

# property-like keys; each key maps to a simple value

player_initial_lives: "3"

ui_properties_file_name: "user-interface.properties"

# file-like keys

game.properties: |

enemy.types=aliens,monsters

player.maximum-lives=5 Expose Pod Information to Containers Through Environment Variables | Kubernetes

env:

- name: MY_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeNameSecrets | Kubernetes A Secret is an object that contains a small amount of sensitive data such as a password, a token, or a key. Such information might otherwise be put in a Pod specification or in a container image. Using a Secret means that you don't need to include confidential data in your application code.

Kubernetes Secrets are, by default, stored unencrypted in the API server's underlying data store (etcd). Anyone with API access can retrieve or modify a Secret, and so can anyone with access to etcd.

data- base64-encoded.stringData- arbitrary strings.

- As files in a volume mounted on one or more of its containers.

- As container environment variable.

- By the kubelet when pulling images for the Pod.

- Service Account Tokens

- Secrets Store Providers | CSI Driver

- Custom signer for X.509 certificates and CertificateSigningRequests

- device plugin - expose node-local encryption hardware to a specific Pod

You can use one of the following type values to create a Secret to store the credentials for accessing a container image registry:

kubernetes.io/dockercfg- store a serialized~/.dockercfgwhich is the legacy format for configuring Docker command line.datafield contains a.dockercfgkey whose value is content of a~/.dockercfgfile encoded in the base64 format.kubernetes.io/dockerconfigjson- store a serialized JSON that follows the same format rules as the~/.docker/config.jsonfile which is a new format for~/.dockercfg.datafield of the Secret object must contain a.dockerconfigjsonkey, in which the content for the~/.docker/config.jsonfile is provided as a base64 encoded string.

# Create registry secret

kubectl create secret docker-registry regcred --docker-server=gitlab-registry.dev.limitlex.io --docker-username=... --docker-password=... --docker-email=alexander@limitlex.io

# Dump secret

kubectl get secret regcred -o jsonpath='{.data.*}' | base64 -d- Namespaces provide a mechanism for isolating groups of resources within a single cluster.

- Names of resources need to be unique within a namespace, but not across namespaces.

- Namespace-based scoping is applicable only for namespaced objects (e.g. Deployments, Services, etc) and not for cluster-wide objects (e.g. StorageClass, Nodes, PersistentVolumes, etc).

kubectl config view

kubectl config set-context --current --namespace=<insert-namespace-name-here>kubernetes - Service located in another namespace - Stack Overflow

<service.name>.<namespace name>.svc.cluster.local or <service.name>.<namespace name>

- Multi-tenant design considerations for Amazon EKS clusters | Containers

- Network Policies | Kubernetes

- Alternate compatible CNI plugins - Amazon EKS

IfNotPresent- the image is pulled only if it is not already present locally.Always- every time the kubelet launches a container, the kubelet queries the container image registry to resolve the name to an image digest.Never- the kubelet does not try fetching the image. If the image is somehow already present locally, the kubelet attempts to start the container; otherwise, startup fails.

Options:

- Configuring Nodes to Authenticate to a Private Registry (requires node configuration by cluster administrator)

- Kubelet Credential Provider to dynamically fetch credentials for private registries

- Pre-pulled Images (requires root access to all nodes to set up)

- Specifying

ImagePullSecretson a Pod (only pods which provide own keys can access the private registry) - recommended approach to run containers based on images in private registries. - Vendor-specific or local extensions (your cloud provider)

Helm Is Helm used just for templating? Helm is used for templating, sharing charts and managing releases. A collection of templated resources in Helm is called a chart. It uses the Go templating engine and the helpers from the Sprig library.

helm template . -x templates/pod.yaml --set env_name=production

helm search mysql- Declarative Management of Kubernetes Objects Using Kustomize | Kubernetes

- Kubernetes Kustomize Tutorial - DevOps by Example

- kustomize/combineConfigs.md at master · kubernetes-sigs/kustomize

Prune old configmaps:

kc apply --prune --prune-allowlist=core/v1/ConfigMap --all -k external-exchanges/aggregator/Configure Liveness, Readiness and Startup Probes | Kubernetes

livenessProbe- when to restart a container. For cases when applications running long periods of time eventually transition to broken states, and cannot recover except by being restarted.readinessProbe- know when a container is ready to start accepting traffic. Caution: liveness probes do not wait for readiness probes to succeed.startupProbe- know when a container application has started. Disables liveness and readiness checks until it succeeds.

Probes options: initialDelaySeconds, periodSeconds, timeoutSeconds, successThreshold, failureThreshold, terminationGracePeriodSeconds.

# Pod container.

livenessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5

periodSeconds: 5 # Pod container.

livenessProbe:

httpGet:

path: /healthz

port: 8080

httpHeaders:

- name: Custom-Header

value: Awesome

initialDelaySeconds: 3

periodSeconds: 3- Operator pattern | Kubernetes

- OperatorHub.io | The registry for Kubernetes Operators

- cncf/tag-app-delivery Operator-WhitePaper_v1-0.md

- Operators are software extensions to Kubernetes that make use of custom resources to manage applications and their components. Operators follow Kubernetes principles, notably the control loop.

- The operator pattern aims to capture the key aim of a human operator who is managing a service or set of services.

- Operators are clients of the Kubernetes API that act as controllers for a Custom Resource.

- Operators were introduced by CoreOS as a class of software that operates other software, putting operational knowledge collected by humans into software.

- Expand Kubernetes to support other workflows and functionalities specific to an application.

- Enable Kubernetes users to describe the desired state of AWS resources using the Kubernetes API and configuration language.

- No need to define resources outside of the cluster or run services that provide supporting capabilities like databases or message queues within the cluster.

grafana-operator/grafana-operator: An operator for Grafana that installs and manages Grafana instances, Dashboards and Datasources through Kubernetes/OpenShift CRs grafana-operator/deploy_grafana.md

- The ability to configure and manage your entire Grafana with the use Kubernetes resources such as CRDs, configMaps, Secrets etc.

- Automation of: Ingresses, Grafana product versions, Grafana dashboard plugins, Grafana datasources, Grafana notification channel provisioning, Oauth proxy and others.

- Efficient dashboard management through jsonnet, plugins, organizations and folder assignment, which can all be done through

.yamls! - Resources: Grafana, GrafanaDashboard, GrafanaDatasource, GrafanaNotificationChannel.

Prometheus Operator - Running Prometheus on Kubernetes

- Kubernetes Custom Resources: Use Kubernetes custom resources to deploy and manage Prometheus, Alertmanager, and related components.

- Simplified Deployment Configuration: Configure the fundamentals of Prometheus like versions, persistence, retention policies, and replicas from a native Kubernetes resource.

- Prometheus Target Configuration: Automatically generate monitoring target configurations based on familiar Kubernetes label queries; no need to learn a Prometheus specific configuration language.

Alternative setup without operator:

- How To Setup Prometheus Monitoring On Kubernetes [Tutorial]

- How To Setup Prometheus Node Exporter On Kubernetes

- Allows to define a common output destination for all your metrics (like InfluxDB output), and configure Sidecar monitoring on your application pods using labels.

Annotations for workload monitoring:

metadata:

annotations:

telegraf.influxdata.com/class: "default"

telegraf.influxdata.com/ports: "8080"Alternative DaemonSet example without operator: influxdata/tick-charts/daemonset.yaml

- Fluent Bit is a good choice as a logging agent because of its lightweight and efficiency, while Fluentd is more powerful to perform advanced processing on logs because of its rich plugins.

- Fluent Bit only mode: If you just need to collect logs and send logs to the final destinations, all you need is Fluent Bit.

- Fluent Bit + Fluentd mode: If you also need to perform some advanced processing on the logs collected or send to more sinks, then you also need Fluentd.

- Fluentd only mode: If you need to receive logs through networks like HTTP or Syslog and then process and send the log to the final sinks, you only need Fluentd.

- Fluent Bit will be deployed as a DaemonSet while Fluentd will be deployed as a StatefulSet.

Kubernetes Sidecar Container | Best Practices and Examples

- Sidecar containers are containers that are needed to run alongside the main container. The two containers share resources like pod storage and network interfaces. The sidecar containers can also share storage volumes with the main containers, allowing the main containers to access the data in the sidecars. The main and sidecar containers also share the pod network, and the pod containers can communicate with each other on the same network using localhost or the pod’s IP, reducing latency between them.

- Sidecar containers allow you to enhance and extend the functionalities of the main container without having to modify its codebase. Additionally, the sidecar container application can be developed in a different language than that of the main container application, offering increased flexibility.

Use cases:

- Applications Designed to Share Storage or Networks

- Main Application and Logging Application

- Keeping Application Configuration Up to Date

What is an 'endpoint' in Kubernetes? - Stack Overflow EndpointSlices | Kubernetes

- In the Kubernetes API, an Endpoints (the resource kind is plural) defines a list of network endpoints, typically referenced by a Service to define which Pods the traffic can be sent to.

- The EndpointSlice API is the recommended replacement for Endpoints.

Logging Architecture | Kubernetes

- Easiest and most adopted logging method for containerized applications is writing to standard output and standard error streams.

- Native functionality provided by a container engine or runtime is usually not enough for a complete logging solution. For example, you may want to access your application's logs if a container crashes, a pod gets evicted, or a node dies.

- In a cluster, logs should have a separate storage and lifecycle independent of nodes, pods, or containers. This concept is called cluster-level logging.

- A container runtime handles and redirects any output generated to a containerized application's stdout and stderr streams. Different container runtimes implement this in different ways; however, the integration with the kubelet is standardized as the CRI logging format.

- By default, if a container restarts, the kubelet keeps one terminated container with its logs. If a pod is evicted from the node, all corresponding containers are also evicted, along with their logs.

- You can configure the kubelet to rotate logs automatically. Kubelet is responsible for rotating container logs and managing the logging directory structure. The kubelet sends this information to the container runtime (using CRI), and the runtime writes the container logs to the given location.

- Only the contents of the latest log file are available through

kubectl logs.

- Use a node-level logging agent that runs on every node.

- Because the logging agent must run on every node, it is recommended to run the agent as a

DaemonSet.

- Because the logging agent must run on every node, it is recommended to run the agent as a

- Include a dedicated sidecar container for logging in an application pod.

- The sidecar container streams application logs to its own stdout.

- The sidecar container runs a logging agent, which is configured to pick up logs from an application container. Note: Using a logging agent in a sidecar container can lead to significant resource consumption. Moreover, you won't be able to access those logs using

kubectl logsbecause they are not controlled by the kubelet.

- Push logs directly to a backend from within an application.

- Cluster-logging that exposes or pushes logs directly from every application is outside the scope of Kubernetes.

- Kubernetes Fluentd - Fluentd

- fluent/fluentd-kubernetes-daemonset: Fluentd daemonset for Kubernetes and it Docker image

- Cluster-level Logging in Kubernetes with Fluentd | Medium

- How To Set Up an Elasticsearch, Fluentd and Kibana (EFK) Logging Stack on Kubernetes | DigitalOcean

- logging - FluentD log unreadable. it is excluded and would be examined next time - Stack Overflow

-

fluent/fluent-bit-kubernetes-logging: Fluent Bit Kubernetes Daemonset

-

Exporting Kubernetes Logs to Elasticsearch Using Fluent Bit | Medium

-

Process Kubernetes containers logs from the file system or Systemd/Journald, enrich with Kubernetes Metadata (Pod Name/ID, Container Name/ID, Labels, Annotations), centralize in third party storage services like Elasticsearch, InfluxDB.

-

Kubernetes manages a cluster of nodes, so our log agent tool will need to run on every node to collect logs from every POD, hence Fluent Bit is deployed as a DaemonSet.

fluent/helm-charts: Helm Charts for Fluentd and Fluent Bit

- aws/aws-for-fluent-bit: The source of the amazon/aws-for-fluent-bit container image

- eks-charts/stable/aws-for-fluent-bit at master · aws/eks-charts

- fluent-bit-docs/aws-credentials.md at 43c4fe134611da471e706b0edb2f9acd7cdfdbc3 · fluent/fluent-bit-docs

Standalone MongoDB Elasticsearch Versions

- The kubelet and container runtime do not run in containers. The kubelet runs your containers (grouped together in pods)

- The Kubernetes scheduler, controller manager, and API server run within pods (usually static Pods). The etcd component runs in the control plane, and most commonly also as a static pod. If your cluster uses kube-proxy, you typically run this as a

DaemonSet.

Resource metrics pipeline | Kubernetes Resource usage for node and pod, including metrics for CPU and memory. The HorizontalPodAutoscaler (HPA) and VerticalPodAutoscaler (VPA) use data from the metrics API to adjust workload replicas and resources to meet customer demand.

Components:

- cAdvisor: Daemon for collecting, aggregating and exposing container metrics included in Kubelet.

- kubelet: Node agent for managing container resources. Resource metrics are accessible using the

/metrics/resourceand/statskubelet API endpoints. - Summary API: API provided by the kubelet for discovering and retrieving per-node summarized stats available through the

/statsendpoint. - metrics-server: Cluster addon component that collects and aggregates resource metrics pulled from each kubelet.

- Metrics API: Kubernetes API supporting access to CPU and memory used for workload autoscaling.

Kubernetes - Collect Kubernetes metrics (Minikube's addon: 'metrics-server')

minikube addons enable metrics-server

kubectl get pods --namespace kube-system | grep metrics-server

kubectl get --raw /apis/metrics.k8s.io/v1beta1/nodes/minikube | jq

kubectl top node minikube

kubectl top pods- MySQL - Run a Single-Instance Stateful Application | Kubernetes

- How to Deploy Postgres on Kubernetes | Tutorial

- Deploy Grafana on Kubernetes | Grafana documentation

- pires/kubernetes-elasticsearch-cluster: Elasticsearch cluster on top of Kubernetes made easy.

- telegraf/plugins/inputs/prometheus at master · influxdata/telegraf

You must or you must not. There is no "should" :D

grafana-operator/deploy/manifests/v4.9.0 at v4.9.0 · grafana-operator/grafana-operator

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespaceAdmission Controllers Reference | Kubernetes

- Piece of code that intercepts requests to the Kubernetes API server prior to persistence of the object, but after the request is authenticated and authorized.

- May be validating, mutating, or both. Mutating controllers may modify related objects to the requests they admit; validating controllers may not.

- https://pkg.go.dev/k8s.io/client-go@v0.26.3/rest#InClusterConfig

- https://pkg.go.dev/k8s.io/client-go@v0.26.3/rest#Config

- https://pkg.go.dev/k8s.io/client-go/kubernetes#NewForConfig

- https://trstringer.com/connect-to-kubernetes-from-go/

Connect from inside the cluster The only different when connecting from within the cluster is how the config is retrieved. You will use

k8s.io/client-go/restand make a call torest.InClusterConfig(). After error checking, pass that config output tokubernetes.NewForConfig()and the rest of the code is the same.

- Taints and Tolerations | Kubernetes

- K9s - Manage Your Kubernetes Clusters In Style

- kind is a tool for running local Kubernetes clusters using Docker container “nodes”. kind was primarily designed for testing Kubernetes itself, but may be used for local development or CI.

Comparing different approaches.

Goals:

- Separate secrets from configuration

- Configuration can be then stored inside Git repository and versioned or in CI.

- Secrets access is restricted and given only to specific audience and described with policies.

- Physical storage - Kubernetes control plane ETCD key-value store (in case of EKS it is managed and encrypted by default)

- Representation - Kubernetes Secrets objects

- User Identities - Kubernetes Users

- Applications Identities - Kubernetes Service Accounts

- Policies language - Kubernetes RBAC

- Additional components - backup service, for example Velero

- Admin has complete access to the cluster.

- Developer has access only to specific resources:

v1- configmaps, namespaces, pods, persistentvolumeclaims... Butpods/execis not allowed.apps/v1- deployments, statefulsets...networking.k8s.io/v1- ingresses

- Admin creates secrets using

kubectl create secretCLI or Kubernetes Dashboard - Developer references secret and mounts it into the pod as volume.

- Application loads secrets from file.

Secrets Management - EKS Best Practices Guides

- On EKS, the EBS volumes for etcd nodes are encrypted with EBS encryption.

- Caution: Secrets in a particular namespace can be referenced by all pods in the secret's namespace.

- Caution: The node authorizer allows the Kubelet to read all of the secrets mounted to the node.

- Recommendations:

- Audit the use of Kubernetes Secrets

- Use separate namespaces as a way to isolate secrets from different applications

- Physical storage - Vault Integrated Storage (it supports other options as well) mounted to Persistent Volume (on EKS will be backed by EBS)

- Representation - Vault Secrets

- User Identities - Vault Entities coming from Username & Password, LDAP, OIDC authentication methods.

- Application Identities - Vault Entities coming from Kubernetes authentication methods.

- Policies language - Vault Policies

- Additional components - Vault and Vault Agent Injector instances inside cluster, Vault Agent automatically injected inside every pod.

- Additional configuration - Vault physical storage, secrets engine, authentication methods, roles.

- Admin has complete access to the Kubernetes cluster and Vault instance.

- Developer does not have

pods/execaccess in Kubernetes. - Admin creates secrets.

- Admin creates policy for accessing secrets.

- Admin creates role under Kubernetes auth method, attaches policy to it, defines which Kubernetes services accounts from which namespaces can assume it.

- Developer attaches Vault Agent Sidecar Injector Annotations to his pod. He specify which role application assumes, which secrets is being loaded and optionaly tempalte to render them into file.

- Application loads secrets from file.

Vault on Kubernetes Deployment Guide

Differences from Vault and Vault Agent approach:

- Admin sets up Secrets Store CSI Driver

- Admin sets up Vault CSI Provider instead of Vault Agent Injector.

Differences from Plain Kubernetes Secrets approach:

- Developer creates SecretProviderClass (with role attached) instead of defining annotations (which result in additional init container and sidecar container).

- Developer mounts to the pod volume of type

csiinstead ofsecret.

SecretProviderClass configured by admin.

apiVersion: secrets-store.csi.x-k8s.io/v1

kind: SecretProviderClass

metadata:

name: vault-foo

spec:

provider: vault

parameters:

roleName: "csi"

vaultAddress: "http://vault.vault:8200"

objects: |

- secretPath: "secret/data/foo"

objectName: "bar"

secretKey: "bar"

- secretPath: "secret/data/foo1"

objectName: "bar1"

secretKey: "bar1"Deployment volume requested by developer:

containers:

- volumeMounts:

- name: secrets-store-inline

mountPath: "/mnt/secrets-store"

readOnly: true

volumes:

- name: secrets-store-inline

csi:

driver: secrets-store.csi.k8s.io

readOnly: true

volumeAttributes:

secretProviderClass: "vault-foo"- Introduction - Secrets Store CSI Driver

- hashicorp/vault-csi-provider: HashiCorp Vault Provider for Secret Store CSI Driver

- Mount Vault Secrets through Container Storage Interface (CSI) Volume

- External Secrets Operator - can sync Vault secrets to Kubernetes secrets. Currently in beta.

- Vault Secrets Operator - also in beta.

- AWS Secrets Manager - $0.40 per secret per month. Can be connected using Secrets Store CSI Driver in a way similar to Vault. aws/secrets-store-csi-driver-provider-aws

- Bitnami Sealed Secrets - encrypting and storing secrets in repository.

- Terraform Best Practices

- Terraform Cheat Sheet - 21 Terraform CLI Commands & Examples

- hashicorp/aws | Terraform Registry

- Install | Terraform | HashiCorp Developer

- Terraform Tutorial - Getting Started With Terraform on AWS

Ubuntu/Debian:

wget -O- https://apt.releases.hashicorp.com/gpg | gpg --dearmor | sudo tee /usr/share/keyrings/hashicorp-archive-keyring.gpg

echo "deb [signed-by=/usr/share/keyrings/hashicorp-archive-keyring.gpg] https://apt.releases.hashicorp.com $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/hashicorp.list

sudo apt update && sudo apt install terraform

terraform -install-autocomplete- Installing or updating the latest version of the AWS CLI - AWS Command Line Interface

- How to Configure AWS CLI Credentials on Windows, Linux and Mac

- Command completion - AWS Command Line Interface

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

unzip awscliv2.zip