PGBouncer is a lightweight connection pooler for PostgreSQL. CockroachDB is a cloud-native SQL database for building global, scalable cloud services that survive disasters.

CockroachDB is PostgreSQL wire compatible database, which means it aims to have tight compatibility with the PG ecosystem. Today, we're going to wire PGBouncer to work with CockroachDB. This article is meant to scratch the surface of possibilities unblocked by PGBouncer with CockroachDB and not meant to be an in-depth overview. We're currently researching this topic and will follow up with official docs on proper architecture and sizing of PGBouncer and CockroachDB.

- Using PGBouncer with CockroachDB

- Using PGBouncer with Cockroach Cloud Free Tier

- Exploring PGBouncer auth_type(s) with CockroachDB

For starters, lets review the reasons someone would leverage a connection pool. A database connection is costly, every connection pays penalty during user authentication. When you have many connections, they all take up prescious memory resources from the cluster. Exceeding connection limits leads to context switching and long waits. There are several approaches to working around a finite resource pool. On the client side, you have connection pooling mechanisms, most popular languages support connection pooling. Now what if you're working with a serverless architectures? There is no way to define a client side connection pool with a serverless application. To make matters worse, there could be hundreds and thousands of serverless functions connecting to the database. This is where middleware like PGBouncer comes in.

CockroachDB scales by increasing virtual CPU cores. Internal tests have shown that 4 active connections per vCPU is a sweet spot for maintaining quality of service and avoiding resource starvation. So say we need to size our cluster for 100 concurrent active connections. In CockroachDB, SHOW QUERIES command can show how many active connections are executing concurrently. I should mention that there is no penalty or hard limit to how many idle connections CockroachDB can support. So given 100 active connections, we need a cluster of 3 8 vCPU nodes to support our 1:4 ratio of vCPU to active connections. Now if we needed to support thousands of concurrent connections, we'd be talking about a very large cluster. This is not cost effective by any means. Embedding a PGBouncer in this scenario eases the 1:4 ratio quite a bit. I don't have empirical data yet and further research is necessary to prove the point, however.

I created a sample application that spins up a three node CockroachDB cluster, a load balancer (HAProxy), a client container and a single instance of PGBouncer. The last part is the key, you can scale your connection pool by spinning up multiple instances of PGBouncer and increase your connection pool independently of CockroachDB vCPU.

PGBouncer requires a few configuration files to get started. First is the pgbouncer.ini file.

#pgbouncer.ini

[databases]

* = host = lb port=26257 dbname=tpcc

[pgbouncer]

listen_addr = *

listen_port = 27000

auth_file = /etc/pgbouncer/userlist.txt

auth_type = cert

pool_mode = session

max_client_conn = 500

default_pool_size = 50

min_pool_size = 10

reserve_pool_size = 10

reserve_pool_timeout = 10

max_db_connections = 50

max_user_connections = 50

server_round_robin = 1

ignore_startup_parameters = extra_float_digits

application_name_add_host = 1

# Log settings

log_connections = 1

log_disconnections = 1

log_pooler_errors = 1

verbose = 0

admin_users = root

# Connection sanity checks, timeouts

server_reset_query = false

server_lifetime = 3600

# TLS settings

client_tls_sslmode = verify-full

client_tls_key_file = /home/postgres/node_certs/node.key

client_tls_cert_file = /home/postgres/node_certs/node.crt

client_tls_ca_file = /home/postgres/certs/ca.crt

client_tls_protocols = secure

client_tls_ciphers = secure

server_tls_sslmode = verify-full

server_tls_ca_file = /shared/node/certs/ca.crt

server_tls_key_file = /shared/node/certs/node.key

server_tls_cert_file = /shared/node/certs/node.crt

# Dangerous timeouts

query_wait_timeout = 300The database associated with the bounced connections is going to be tpcc. We will accept connections from the load balancer host lb on port 26257. This has some advantages as having PGBouncer connections load balanced through the HAProxy will prevent connection issues with dead nodes. HAProxy will take care of that. The flowchart below summarizes the architecture.

[databases]

* = host = lb port=26257 dbname=tpccI am considering whether adding a load balancer in front of multiple PGBouncer instances will eliminate a single point of failure for PGBouncer but that's a topic for another day.

Going further, we're going to accept connections on the PGBouncer port 27000 just to make the concept easier to understand and distinguish between a pooled and standard connection.

[pgbouncer]

listen_addr = *

listen_port = 27000One particular issue with setting up PGBouncer with CockroachDB is the security. There's a requirement for clients authenticating to PGBouncer, to use the PGBouncer originated certificate authority. In CockroachDB, you can use cockroach cert create-ca to generate a CA cert. Unfortunately in PGBouncer, you need to use the equivalent openssl command. We have steps documented in the following section. Other than that, PGBouncer is configured with CockroachDB similarly to PG. If you get hung up, feel free to inspect the Dockerfile I'd used to create the sample application.

Now back to the configuration, the other file necessary for PGBouncer to work is the userlist.txt. This list includes all of the users authorized to access the database through PGBouncer. These users must be added to CockroachDB as well.

"root" ""

"roach" "roach"There's a way to hash the passwords which I need to still add but for the time being the field is in plain text.

In CockroachDB, adding a user roach which is going to be used to access the database via PGBouncer can be created with the following command:

CREATE USER IF NOT EXISTS roach WITH PASSWORD 'roach';The last step is to launch the application so feel free to git clone the repo, navigate to the cockroach-secure-pgbouncer directory and start the docker compose appalication using the helper up.sh script.

Once the compose app starts up, we can launch a workload and inspect the behavior in the CockroachDB DBConsole.

First, execute the following command to load workload data.

docker exec -it client cockroach workload fixtures import tpcc \

--warehouses=10 'postgresql://roach@pgbouncer:27000/tpcc?sslcert=/shared/client/certs%2Fclient.roach.crt&sslkey=/shared/client/certs%2Fclient.roach.key&sslmode=verify-full&sslrootcert=/shared/client/certs%2Fca.crt'Notice the connection string, we're using the user roach, with hostname pgbouncer at port 27000. I'm using the custom certs in the mounted volumed /shared. These certs were generated using PGBouncer CA. This is a critical step in getting TLS work with CockroachDB and PGBouncer.

Once the workload load completes, we can execute the workload.

docker exec -it client cockroach workload run tpcc \

--warehouses=10 \

--conns 50 \

--active-warehouses=10 \

--ramp=3m \

--duration=10m \

--workers=100 \

--tolerate-errors \

'postgresql://roach@pgbouncer:27000/tpcc?sslcert=/shared/client/certs%2Fclient.roach.crt&sslkey=/shared/client/certs%2Fclient.roach.key&sslmode=verify-full&sslrootcert=/shared/client/certs%2Fca.crt'At this point we can go to the DBConsole and inspect the metrics. Firstly, navigating to the statements page and toggling the App: All drop down to view all of the open connections.

Notice the large number of connections prefixed with tpcc, that's by design to emphasize connections are pooled through the PGBouncer. It is toggled via pgbouncer.ini config application_name_add_host = 1. It is not recommended to use it in production because that will throw the console off with thousands of instances of connections, I'm using that for demo purposes only.

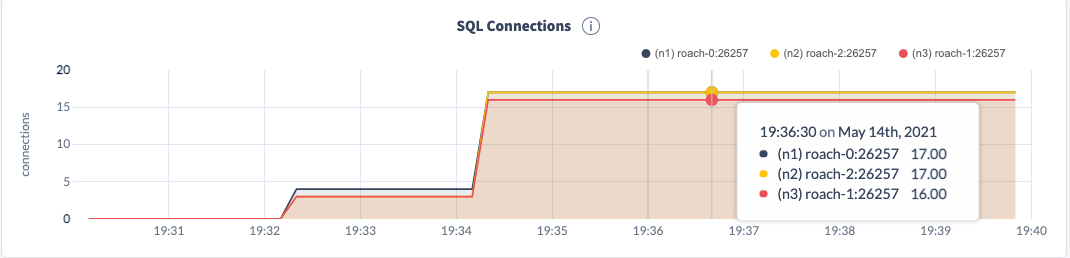

The next step is to navigate to the metrics page, and specifically the SQL page. The two graphs we want to observe are SQL Connections and SQL Queries.

These are the only active connections on the cluster and we know they're created via PGBouncer.

Lastly, let's view the PGBouncer logs, we can do that simply with:

docker logs -f pgbouncerStarting pgbouncer.

2021-05-14 19:46:35.768 UTC [1] LOG kernel file descriptor limit: 1048576 (hard: 1048576); max_client_conn: 500, max expected fd use: 512

2021-05-14 19:46:35.769 UTC [1] LOG listening on 0.0.0.0:27000

2021-05-14 19:46:35.769 UTC [1] LOG listening on [::]:27000

2021-05-14 19:46:35.769 UTC [1] LOG listening on unix:/tmp/.s.PGSQL.27000

2021-05-14 19:46:35.769 UTC [1] LOG process up: PgBouncer 1.15.0, libevent 2.1.8-stable (epoll), adns: c-ares 1.15.0, tls: LibreSSL 2.7.5Once we start connecting, we will see many authentication attempts

2021-05-14 19:47:50.855 UTC [1] LOG C-0x558a07bf66d0: tpcc/[email protected]:43920 login attempt: db=tpcc user=roach tls=TLSv1.2/ECDHE-RSA-AES256-GCM-SHA384

2021-05-14 19:47:50.985 UTC [1] LOG C-0x558a07bf66d0: tpcc/[email protected]:43920 closing because: client close request (age=0s)

2021-05-14 19:47:53.392 UTC [1] LOG C-0x558a07bf71c0: tpcc/[email protected]:43834 closing because: client unexpected eof (age=16s)

2021-05-14 19:47:53.393 UTC [1] LOG C-0x558a07bf6f90: tpcc/[email protected]:43784 closing because: client unexpected eof (age=16s)

2021-05-14 19:48:35.630 UTC [1] LOG stats: 1 xacts/s, 1 queries/s, in 477 B/s, out 257 B/s, xact 917435 us, query 744086 us, wait 19202 usWe can see that PGBouncer terminates finished connections and reuses them in the following attempts

2021-05-14 19:44:13.167 UTC [1] LOG C-0x55da4e7d94e0: tpcc/[email protected]:50112 login attempt: db=tpcc user=roach tls=TLSv1.2/ECDHE-RSA-AES256-GCM-SHA384

2021-05-14 19:44:13.167 UTC [1] LOG C-0x55da4e7d8c20: tpcc/[email protected]:50110 login attempt: db=tpcc user=roach tls=TLSv1.2/ECDHE-RSA-AES256-GCM-SHA384

2021-05-14 19:44:13.373 UTC [1] LOG C-0x55da4e7d9940: tpcc/[email protected]:50278 login attempt: db=tpcc user=roach tls=TLSv1.2/ECDHE-RSA-AES256-GCM-SHA384

2021-05-14 19:44:14.697 UTC [1] LOG C-0

...

2021-05-14 19:49:06.421 UTC [1] LOG S-0x558a07c5ec60: tpcc/[email protected]:26257 SSL established: TLSv1.2/ECDHE-RSA-AES128-GCM-SHA256/ECDH=X25519

2021-05-14 19:49:06.438 UTC [1] LOG S-0x558a07c5ee90: tpcc/[email protected]:26257 new connection to server (from 172.23.0.7:48364)

2021-05-14 19:49:06.446 UTC [1] LOG S-0x558a07c5ee90: tpcc/[email protected]:26257 SSL established: TLSv1.2/ECDHE-RSA-AES128-GCM-SHA256/ECDH=X25519

2021-05-14 19:49:06.456 UTC [1] LOG S-0x558a07c5f0c0: tpcc/[email protected]:26257 new connection to server (from 172.23.0.7:48368)

2021-05-14 19:49:06.464 UTC [1] LOG S-0x558a07c5f0c0: tpcc/[email protected]:26257 SSL established: TLSv1.2/ECDHE-RSA-AES128-GCM-SHA256/ECDH=X25519We can observe that the login attempt is made to the tpcc database by user roach using TLS. PGBouncer creates an arbitrary port on which the bounced connection is created.

Last thing I should mention is that PGBouncer operates in three different pool modes. So far, I've been able to only evaluate session mode. The prepared statements do not work in this mode and this limits the usability to only explicit queries. That said, if you want to carve a pool of users that will work with the database without taking prescious resources from the cluster at least for the authentication, PGBouncer makes a viable option.